Deploying FastAPI Applications

Introduction

FastAPI is a modern, fast (high-performance), web framework for building APIs with Python 3.7+ based on standard Python type hints. It is built on top of Starlette for the web parts and Pydantic for the data parts. With FastAPI, you can start building your API quickly and easily with minimal setup. It provides a simple and easy-to-use interface for routing, validation, and documentation of your API’s endpoints. Some of the key features of FastAPI include

fast execution speed,

support for asynchronous programming,

built-in support for data validation and serialization, and

automatic generation of API documentation using the OpenAPI standard.

In this article, you will learn to deploy FastAPI on a private server. For demonstration, I will use an EC2 instance but this can be replicated on any machine(on a private or public cloud).

Building the application

In order to deploy a fastAPI application, we need to build it. For demo purposes, we will build a simple application with a single endpoint. The api will respond with the message {"result": "Welcome to FastAPI"} whenever a user sends a GET request.

To build the application, follow the following steps:

Note: If you want the code, please find all codes in the following repository: https://github.com/bhattbhuwan13/heroku-fastapi

Install the FastAPI using the command: pip install “fastapi[all]”. This should install all the FastAPI with all its dependencies.

Create a new file, main.py, with the following contents

from fastapi import FastAPI

# Instantiate the class

app = FastAPI()

# Define a GET method on the specified endpoint

@app.get("/")

def hello():

return {"result": "Welcome to FastAPI"}

Freeze all the requirements using

pip freeze > requirements.txtRun the application locally using

uvicorn main:app. After running this command, you should get “{“result”: “Welcome to FastAPI”}” whenever you visit 127.0.0.1:8000.

The above steps are necessary to make sure that the application works locally. This prevents local errors from propagating to the server and allows you to focus on deployment. It is best to run the above commands while you are inside a virtual environment.

Deploying FastAPI to a private server

Now that the API is running locally, we can deploy it to production. For the same, you will need to launch an ec2 instance on aws and then deploy the application on top of it.

To launch an EC2 instance, follow the following steps:

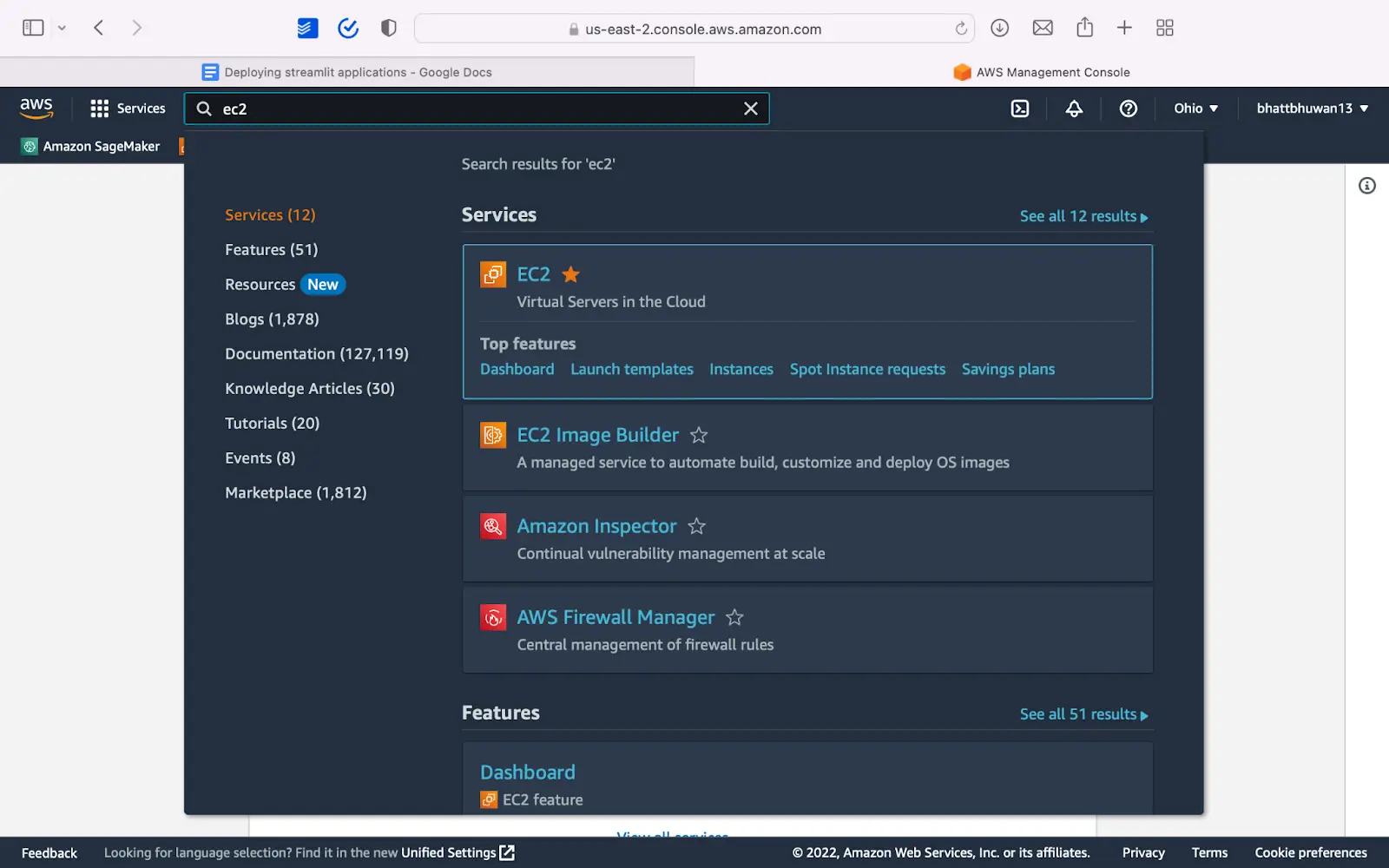

- login to aws, find EC2 among the services and click to open it.

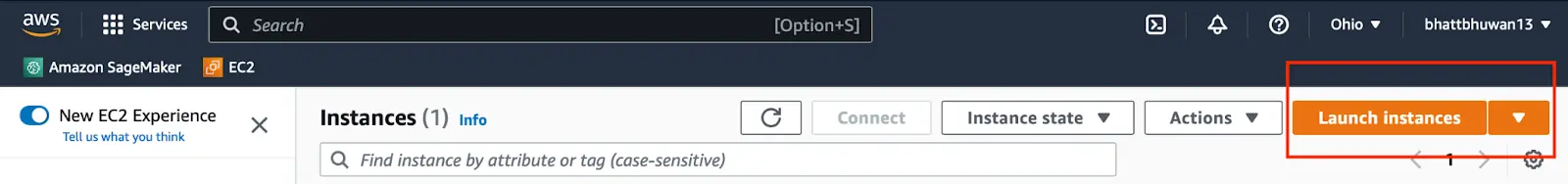

- Click on the Launch Instance button:

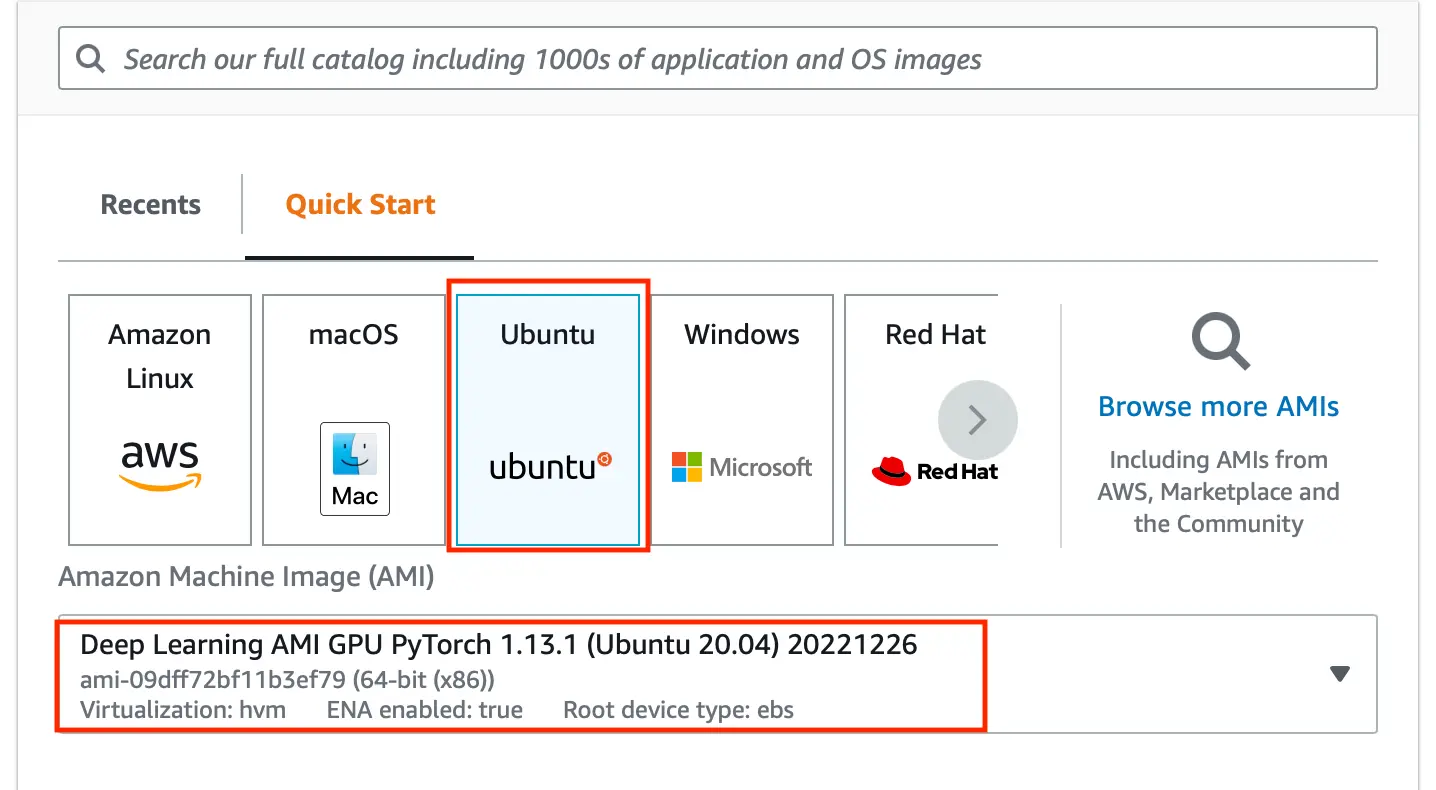

- You are free to use any instance and OS type, but for the sake of convenience, choose an Ubuntu instance and for the AMI choose deep learning ami. This comes with docker pre installed and saves us time and effort in later steps.

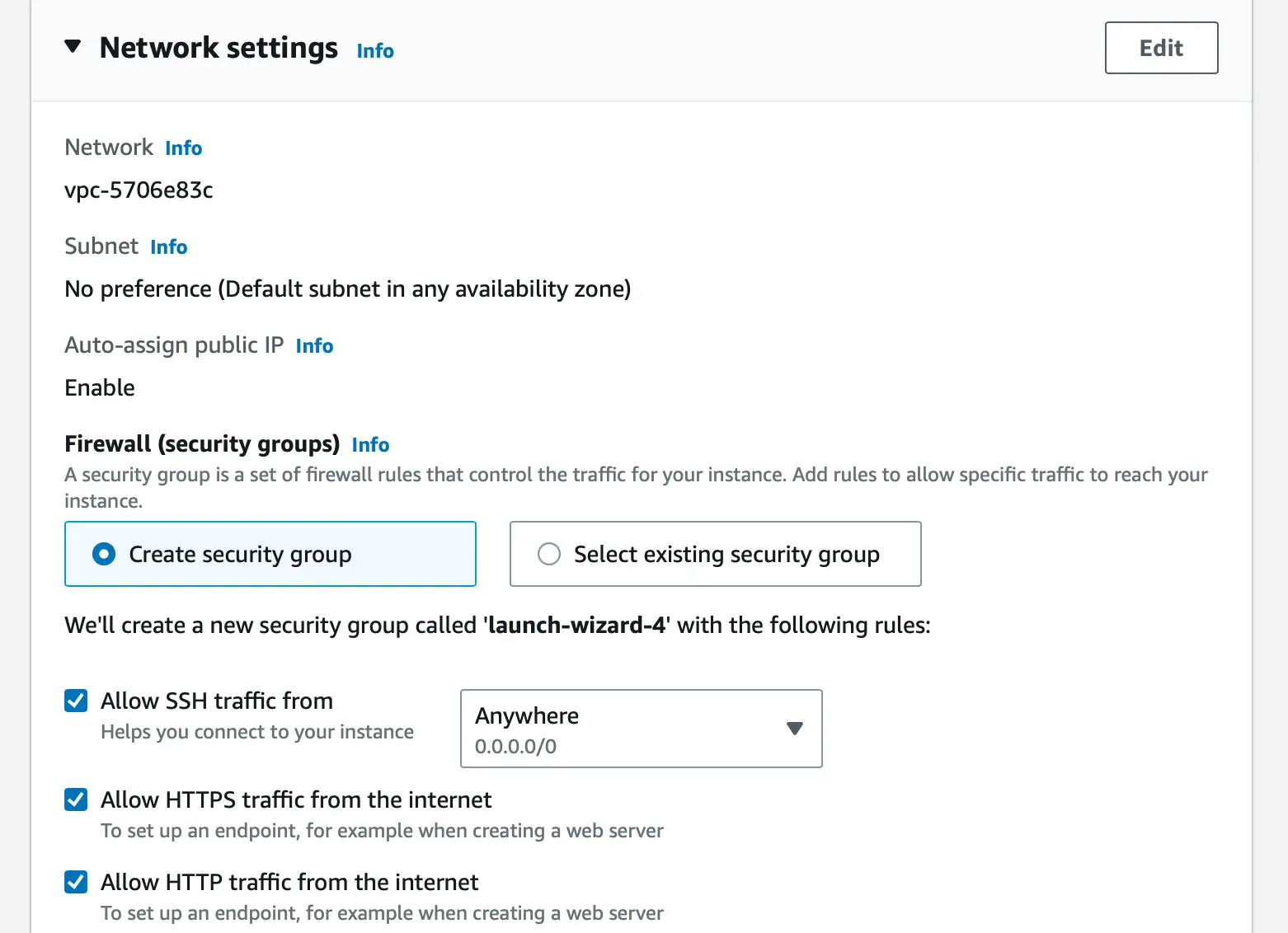

- This is important. No matter which cloud service you use, please allow connection on port 80 and 443(optional). On AWS EC2, you can do this by checking on allow HTTP and HTTPS traffic under the Network Settings. See the image below for reference.

Now that you have a server ready, you can deploy the application.

To deploy the application, follow the following steps,

- Create a virtual environment and install all the dependencies in the requirements.txt file created earlier. To install the dependencies, use the following command:

pip install -r requirements.txt

Install nginx. In an ubuntu based instance, you can do so by using the command “sudo apt install nginx”

Configure nginx by creating a new file, ‘fastapi_nginx` in the /etc/nginx/sites-enabled/ directory. The file should contain the following

contents:

server {

listen 80;

server_name 52.15.39.198;

location / {

proxy_pass http://127.0.0.1:8000;

}

}

In the above configuration, please replace 52.15.39.198 with the public ip of your server.

Restart nginx: sudo service nginx restart

Finally, in the directory containing main.py with the virtual environment activated, run the api using any one of the following commands:

python3 -m uvicorn main:app &

gunicorn main:app --workers 4 --worker-class uvicorn.workers.UvicornWorker --bind 0.0.0.0:80 &

Note: In the second command, set the –workers parameter to a value equal to the number of cores.

Finally, you can visit the public URL of your server to see the api in action. For this particular task, the public ip is 52.15.39.198.

One of the major advantages of using nginx to deploy the application is that Nginx is able to handle a large number of concurrent connections and supports features like load balancing and SSL termination.

Conclusion

In this article, you learned to build and deploy FastAPI applications on a private server. The same steps can be followed to deploy any python based web application or the API.

You may also be interested in:

About Saturn Cloud

Saturn Cloud is your all-in-one solution for data science & ML development, deployment, and data pipelines in the cloud. Spin up a notebook with 4TB of RAM, add a GPU, connect to a distributed cluster of workers, and more. Request a demo today to learn more.

Saturn Cloud provides customizable, ready-to-use cloud environments for collaborative data teams.

Try Saturn Cloud and join thousands of users moving to the cloud without

having to switch tools.