Multi-GPU TensorFlow on Saturn Cloud

TensorFlow is a popular, powerful framework for deep learning used by data scientists across industries. However, sometimes its efficacy can be hamstrung by a lack of compute resources. You might start with training on a CPU, and when that’s too slow for your needs, bump up to a GPU. But that can still be insufficient! When you’re sitting there for an hour, or two, or three waiting for your model to train, you’re wasting time when you could be making progress. What then? TensorFlow actually lets you move up to training on multiple GPUs on a single machine really easily, and you’ll be amazed how much performance and speed you can add, for next to no extra cost.

In this article, you’ll learn how you can combine TensorFlow and its built-in multi-GPU support with the power Saturn Cloud makes available, to scale up your deep learning! We’ll train a model serially, on one GPU, and then walk through exactly how you scale it up. Then we’ll compare the results and see what the real benefits are. You’ll also learn how it works under the hood, so you know what to expect from multi-GPU training and how to optimize it for your own tasks.

The Project

For examples that follow, we’ll be using a dataset of 40,000+ birds, and training a classifier with the ResNet50 architecture to identify which of 285 species each one belongs to. Thanks to Kaggle contributors for this dataset!

In order to really show off the advantages of adding more GPUs to the training task, it’s important to have a lot of data that can keep all those GPUs busy. If you have a small dataset, then you’re probably not going to need as much power as this dataset requires.

Unfamiliar with GPUs? Visit our docs for an overview before continuing.

(While we aren’t delving into cluster-distributed training in this guide, watch this space for future posts on that topic too!)

Training Parameters:

- 285 classes

- 40,930 training images (resized to 224x224)

- 1425 validation images (resized to 224x224)

- 50 epochs

- batch size varies by technique, see below for details

Setup

I’m using a Kaggle dataset, which you can get at the link above - it has already neatly separated out training, test, and validation samples. Here’s how I’m constructing my Keras data object from that. (Batch size will be customized, as you’ll see later.)

train_ds = tf.keras.preprocessing.image_dataset_from_directory(

'/datasets/birds/285 birds/train',

image_size=(224,224),

batch_size=batchsize

).prefetch(2).cache().shuffle(1000)

If you’d like to see more detailed plots and metrics for these model training runs, visit our companion Weights and Biases report! To see the full code for all the models in this report, visit us on Github.

Training Single Node

First, let’s quickly go over the basics and train the model on one GPU. This approach will get the job done … eventually. Because we are using just one GPU, we need to consider the limitations on RAM as well- our batch size can’t be too large or we’ll encounter an OOM error.

- Machine: V100-2XLarge - 8 cores - 61 GB RAM - 1 GPU - 100Gi Disk

- Batch size: 64 (640 steps per epoch as a result)

- Approximate time per epoch with validation: 2 minutes, 6 seconds (121s)

- Total training time: 1 hour, 44 minutes, 38 seconds (6,278s)

(The first couple epochs tend to be slower, so time per epoch is taken from the middle of the run.)

Code

We’re using Keras to handle the model fitting, which abstracts away a bunch of the boring tasks.

model = tf.keras.applications.ResNet50(

include_top=True,

weights=None,

classes=285)

optimizer = keras.optimizers.Adam(lr=.02)

model.compile(loss='sparse_categorical_crossentropy',

optimizer=optimizer,

metrics=['accuracy'])

model.fit(

train_ds,

epochs=50,

validation_data=valid_ds,

callbacks=[WandbCallback()] # This line allows Weights and Biases to track model performance!

)

Training Multi-GPU

We’re going to spin up a bigger EC2 instance now, with multiple GPUs, so we can distribute the training work across these and have them working for us simultaneously, all training the same model. This speeds up our training task a whole lot. We don’t achieve a perfectly linear speed-up (4 GPUs is not fully 4x faster) because there is some communication time required between the GPUs, but we get close!

Notice that the startup time for a multi-GPU training run is a little longer than a single-node, but that’s a one time delay for the run.

- Machine: V100-8XLarge - 32 cores - 244 GB RAM - 4 GPU - 100Gi Disk

- Batch size: 64*4 (256) (160 steps per epoch as a result)

- Approximate time per epoch with validation: 37 s

- Total training time: 33 minutes 56 sec (2,036s)

Code

This is actually incredibly similar to our single GPU approach. We’re just applying the Mirrored Strategy scope around our model definition and compilation stage, so that TensorFlow knows this model is to be trained on multiple GPUs.

strategy = tf.distribute.MirroredStrategy()

with strategy.scope():

model = tf.keras.applications.ResNet50(

include_top=True,

weights=None,

classes=classes)

optimizer = keras.optimizers.Adam(lr=.02)

model.compile(loss='sparse_categorical_crossentropy',

optimizer=optimizer,

metrics=['accuracy'])

model.fit(

train_ds,

epochs=50,

validation_data=valid_ds,

callbacks=[WandbCallback()]

)

Larger Batches

But there’s more! It turns out, with the more powerful machine, we actually have more GPU that we could take advantage of. Let’s double our batch size again!

- Batch size: 64*8 (512) (80 steps per epoch as a result)

- Approximate time per epoch with validation: 33 s

- Total training time: 30 minutes 49 sec (1,849s)

How does it work?

Even at the exactly scaled batch size of 256, we’ve reduced our runtime more than 3x, which makes intuitive sense when we realize we went from 1 processing unit to 4. (Allowing for some lag/communication latency across the GPUs.) But how do these processing units work together?

Let’s start with the single GPU’s procedure. A single GPU reviews all our batches every epoch, so it sees 64 images per batch x 640 batches x 50 epochs. These are all done serially, nothing can be parallelized because one processor is in play. It’s learning from each image, updating its model gradients, and gradually improving its performance as it goes.

When we expand to 4 GPUs on the same machine, we get the chance to parallelize the learning. By using TensorFlow Mirrored Strategy, the model will the model will to split the data in each batch (sometimes called “global batch”) across the GPUs (making “worker batches”). Each GPU has a copy of the model, called a “replica”, and while they learn on different parts of each batch, they will combine the learned gradients at the end of the step. They stay synchronized this way, and the result at the end of training is one model that has learned on all the data.

We have to make sure that we increase our (global) batch size to take full advantage of this processing power, and otherwise we can train just like we did before!

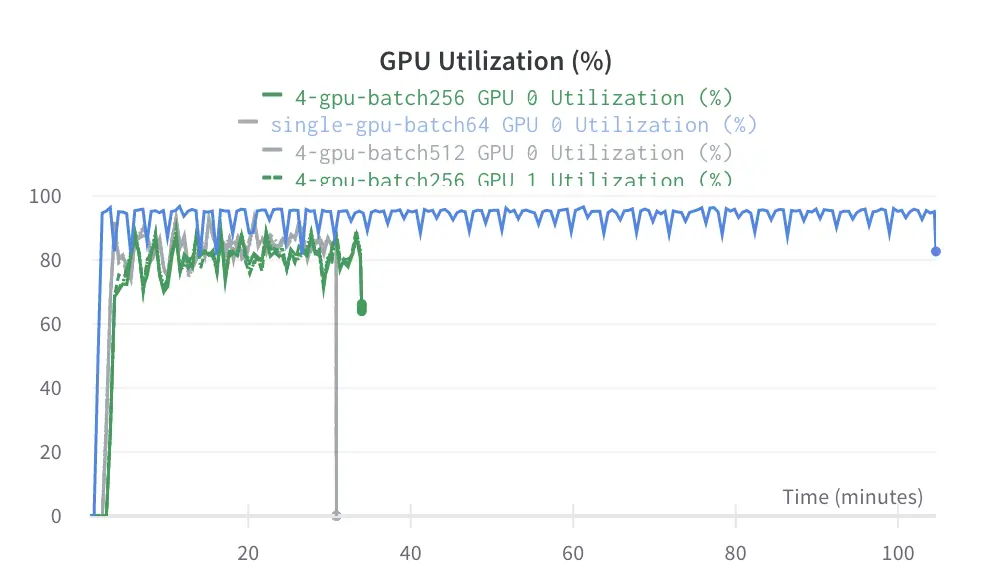

See how this chart shows four GPU workers for the gray and green runs, while the blue is one line.

Our task, therefore, was to give each batch enough bulk that all four GPUs can be kept busy (but without overrunning our RAM). If we do that, our epochs have fewer steps, because the batch size is larger. There’s still just one model being trained, and each worker shares the gradients, so we don’t have any trouble with performance.

The process for training on multiple machines is slightly different, however, and requires use of MultiWorkerMirroredStrategy. Learn more at the TensorFlow site.

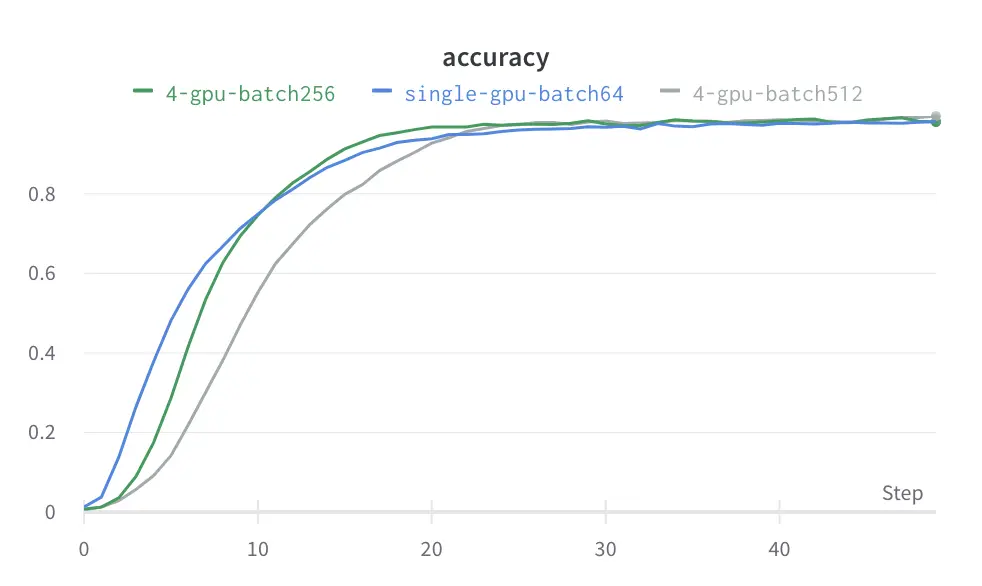

Evaluating Models

So, we can see that more GPUs and parallelization makes the model faster - but that’s not the only thing you have to think about when choosing a technique. Which model/s perform best, and how much will it cost?

| Model | Validation Accuracy | Total Training Time | Price to Train |

|---|---|---|---|

| Single GPU | 0.8014 | 1 hour, 44 min, 38 sec (6,278s) | $7.46 |

| 4 GPU, 256 Samples per Batch | 0.8365 | 33 min 56 sec (2,036s) | $9.68 |

| 4 GPU, 512 Samples per Batch | 0.8519 | 30 min 49 sec (1,849s) | $8.79 |

Meaning…

You can get MUCH faster training, better performance, and a negligible cost increase by adding two lines to your code!

If you aren’t considering multiple GPUs for big training tasks, why not?

Conclusions

I hope this has demonstrated how powerful and valuable multi-GPU training can be for your own deep learning work! You can save time, iterate faster, and produce more value for your organization by adding this easy feature to your process.

You might be thinking, “Awesome, so how do I get these great GPUs and get started?” Saturn Cloud has all the tools you need! Sign up for our free Hosted service today, and you can be running free GPUs immediately. If you need more resources than our free tier provides, you can sign up for Hosted Pro and pay for only what you use.

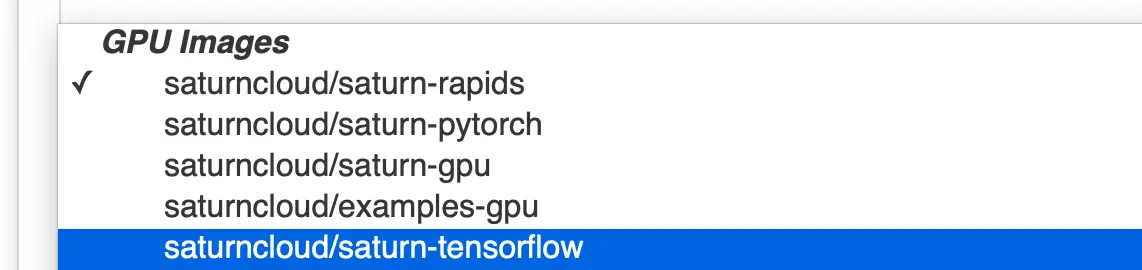

Get Started in Saturn Cloud

To run TensorFlow inside Saturn Cloud Hosted, open a new Jupyter Server and select one of the GPU hardware types. Choose the image namedsaturn-tensorflow for the most recent date available. Start your Jupyter Server, and it will be ready to use TensorFlow!

Side Note: Memory

One thing that you might want to keep an eye on during your own work with TensorFlow on GPUs is memory allocation. TensorFlow’s natural behavior is to absorb all the RAM it has access to on your GPUs - which can be a pain if you’re iterating on many models or just trying to do a lot of work. If you want to ask TensorFlow to just absorb memory as needed, you can use the chunk below before your training flow.

gpus = tf.config.list_physical_devices('GPU')

if gpus:

try:

for gpu in gpus:

tf.config.experimental.set_memory_growth(gpu, True)

logical_gpus = tf.config.experimental.list_logical_devices('GPU')

print(len(gpus), "Physical GPUs,", len(logical_gpus), "Logical GPUs")

except RuntimeError as e:

print(e)

More reading about Multi-GPU Keras/TensorFlow:

Photo by Vincent van Zalinge on Unsplash

If you’d like to see more detailed plots and metrics for these model training runs, visit our companion Weights and Biases report! To see the full code for all the models in this report, visit us on Github.

Saturn Cloud provides customizable, ready-to-use cloud environments for collaborative data teams.

Try Saturn Cloud and join thousands of users moving to the cloud without

having to switch tools.