Building Models with Saturn Cloud and Deploying via Nebius Token Factory

Saturn Cloud now runs on Nebius AI Cloud, giving teams access to bare-metal NVIDIA H100, H200, GB200, and GB300 GPUs with InfiniBand networking – without the operational overhead of managing raw Kubernetes.

This integration pairs Nebius’s hardware layer (HGX platforms, NDR400 InfiniBand, high-throughput storage) with Saturn Cloud’s orchestration (automated provisioning, environment management, cost governance). Once training is complete, models ship directly to Nebius Token Factory for production inference.

This article covers the architecture and walks through the setup process step by step.

The Integrated Architecture: Saturn Cloud + Nebius

The efficacy of any stack is fundamentally limited by its underlying compute and networking substrate. Nebius supplies the high-performance GPU infrastructure, including NVIDIA H100, H200, GB200, and GB200 NVL72 GPUs, networking, storage, and the software layer. Saturn Cloud layers on this hardware layer as a fully managed MLOps platform that abstracts away hardware management, Kubernetes setup, automated scaling, and cost control.

Infrastructure Layer: Nebius supplies the NVIDIA HGX H100/H200 platforms with Non-Blocking Clos topology (InfiniBand NDR400).

Orchestration Layer: Saturn Cloud manages automated K8s namespace isolation, EFS/S3 mount persistence, and pre-warmed node pools.

Serving Layer: Nebius Token Factory provides OpenAI-compatible endpoints with speculative decoding kernels optimized for the underlying hardware.

This separation of concerns ensures that performance scales linearly from training through inference without additional operational overhead.

The Infrastructure Foundation (Nebius)

At the hardware layer, Nebius offers NVIDIA GB200 NVL72 and HGX B300 systems utilizing NVIDIA Quantum-X800 InfiniBand networking, achieving 800 Gbps speeds critical for large-scale parameter synchronization during distributed training. For infrastructure engineers, the transition from standard Ethernet to InfiniBand-enabled RDMA (Remote Direct Memory Access) represents a qualitative shift in how data is moved across the cluster.

| Component | Technical Specification | Functional Impact for AI Workloads |

|---|---|---|

| GPU Architecture | NVIDIA Blackwell (GB200, HGX B300), Hopper (H100, H200) | High-density compute for LLM training and high-throughput inference. |

| Networking fabric | 800 Gbps NVIDIA Quantum-X800 InfiniBand | Minimal latency for NCCL collectives (AllReduce, AllGather). |

| Compute interconnect | NVLink Switch System | High-speed GPU-to-GPU communication within and between nodes. |

| Storage | Up to 4 PB, 1 TBps aggregate throughput | Eliminates I/O wait times during dataset loading and checkpointing. |

The Orchestration Layer: Saturn Cloud

Saturn Cloud is the orchestration engine that automates the provisioning of ephemeral, GPU-enabled development nodes. If you manage a raw K8s cluster on Nebius without Saturn Cloud, you must

- Provision GPU clusters and Kubernetes pods manually

- Orchestrate distributed training and scaling via raw Slurm or complex K8s manifests

- Set up security and governance, including IAM, SSO, and RBAC

- Implement environment reproducibility and experiment tracking from scratch

Saturn Cloud integrates core Kubernetes services like the cluster-autoscaler, Cilium for network encryption, and the NVIDIA GPU Operator to ensure hardware utilization. These components come preconfigured by default, reducing initial setup time significantly.

How Does Model Development Work in the Saturn Cloud MLOps Layer?

1. Build and iterate on models with ready-to-use development workspaces

If you manually manage a raw K8s cluster on Nebius, you must handle:

- Manual NVIDIA device plugin configuration and XID error monitoring

- Complex Persistent Volume Claim (PVC) mapping for multi-node training

- Writing custom YAML manifests for every experiment

Saturn Cloud orchestrates raw GPU nodes using a proprietary controller that interfaces with Nebius’s API. For example, a typical infrastructure spec for a Saturn Cloud workspace on Nebius might look like this in the configuration layer:

# Infrastructure Specification Example

instance_type: "nb.h100.8x" # Nebius H100 8-GPU Node

disk_size: "2TiB"

image: "saturn-huggingface-pytorch-2.4:latest"

shared_volumes:

- name: "model-checkpoints"

mount_path: "/home/jovyan/project/checkpoints"

This lets you instantly provision preconfigured development environments like Jupyter, VS Code, or RStudio – backed by prebuilt Conda/Docker images. The Saturn Cloud image builder handles image creation and distribution within the cluster, eliminating the need to manually distribute Docker images to every researcher.

These preconfigured workspaces attach seamlessly to data sources (object storage, databases), giving you consistent access across runs. Access to Nebius shared filesystems and object storage is managed via IAM service accounts, ensuring that data-plane security is maintained without hardcoding credentials in notebooks.

2. Execute training jobs that scale autonomously and consistently

The layer uses Dask, PyTorch Distributed Data Parallel (DDP), and Ray to shard workloads across the Nebius InfiniBand fabric. This means you can scale your workflows effortlessly, so code written during exploratory work runs the same way in training jobs and production.

For example, when you trigger a multi-node job, Saturn Cloud performs the following operations:

- Node Provisioning: Spins up n-number of H100 nodes via the Nebius API.

- Network Setup: Configures the NCCL (NVIDIA Collective Communications Library) environment variables to ensure optimal throughput over the high-speed interconnect.

- Checkpointing: Links the training process to a high-performance filesystem (such as Lustre or specialized S3 buckets) so that, if a node fails, the state is recovered within seconds.

Consider this example Saturn Cloud job YAML:

type: job

spec:[span_51](start_span)[span_51](end_span)[span_56](start_span)[span_56](end_span)

name: "distributed-training-run"

image:[span_52](start_span)[span_52](end_span)[span_57](start_span)[span_57](end_span) "saturncloud/saturn-python:2024.12.01"

command: "torchrun --nnodes=$SATURN_JOB_COUNT --master_addr=$SATURN_JOB_LEADER train.py"

instance_type: "8xH100"

instance_count: 4

git_repositories:

- url: "git@github.com:ai-team/llm-training.git"

reference: "main"

3. Bridge development-to-production gaps

The handoff is managed via a CI/CD trigger. Saturn Cloud exports the model weights (typically in Safetensors or GGUF format) and the associated metadata to the Token Factory registry. The deployment manifest specifies:

- Quantization parameters (e.g., FP8, INT8, or AWQ).

- Batching strategy (Continuous Batching via vLLM).

- Scaling limits (Min/Max active replicas).

This integrated setup ensures workloads run inside standard Kubernetes deployments or jobs, making them entirely portable.

4. Handle Ops concerns (security, governance, monitoring, and cost control by default)

For infrastructure engineers, the “build” and “deploy” phases are just the beginning. The real work lies in maintaining the environment’s security posture and cost governance. There are two major concerns here:

Security: Saturn Cloud can be deployed into a private subnet within the Nebius VPC. This means that model weights never traverse the public internet during the training-to-inference handoff. Saturn Cloud handles creating VPC Peering connections and Security Group rules that restrict ingress to approved CIDR blocks.

Cost control: To prevent “runaway” GPU costs, Saturn Cloud implements “Auto-Stop” policies. If a notebook hasn’t detected GPU activity for a set period (defined in minutes), the platform snapshots the environment and de-provisions the Nebius node. This is a critical feature for managing the high TCO of H100 clusters.

You can also set project-specific quotas to prevent overconsumption, and the usage dashboards provide visibility into spend per user or per project, integrating directly with internal cost allocation systems.

5. Ship models from Saturn Cloud to Nebius Token Factory without handoff friction

Nebius Token Factory is a managed vLLM-based serving stack running on bare-metal NVIDIA Hopper/Blackwell hardware. Unlike standard REST wrappers, the Token Factory is optimized for the LLM “Pre-fill” and “Decode” phases.

Speculative Decoding: Nebius uses smaller “draft” models to predict tokens, which are then verified by the larger model (e.g., Llama-3 70B), significantly reducing Time To First Token (TTFT).

KV Cache Management (PagedAttention): The platform implements PagedAttention, which treats the KV cache as virtual memory. This prevents fragmentation and allows for much larger batch sizes without the dreaded “Out of Memory” (OOM) errors that plague unmanaged K8s deployments.

The Serving/Execution Layer: Nebius Token Factory

Nebius Token Factory is a high-performance inference solution running on Nebius’s full-stack AI infrastructure. After model training, enterprises need a way to deploy to production that offers real-time inference at scale, cost transparency with managable TCO, predictable latency, and security/compliance. By abstracting the complexities of KV cache management, continuous batching, and speculative decoding, Token Factory allows engineers to focus on application logic.

How Nebius delivers inference at scale

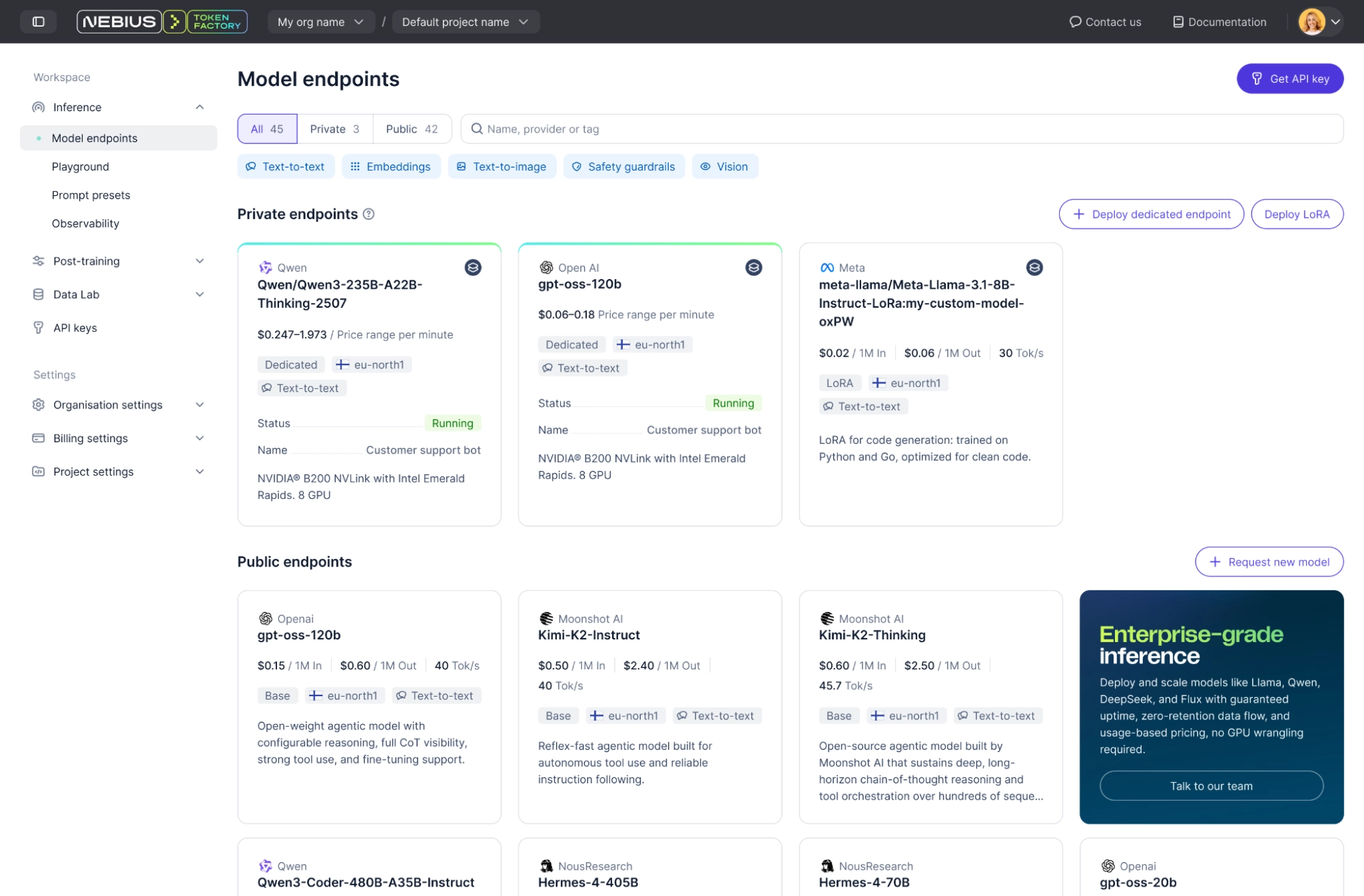

Support for open-source and custom models

Nebius AI Token Factory supports both popular open-source models and fully custom deployments. Users can instantly serve more than 60 pre-optimized open-source models (e.g., Llama, Qwen, and DeepSeek) or deploy their own models. Teams can upload LoRA adapters or full fine-tuned checkpoints directly via the API or dashboard, with the same performance guarantees applied to custom weights.

Global inference serving at scale

Nebius delivers high-throughput, low-latency inference by combining purpose-built hardware with advanced setups. The architecture utilizes a disaggregated approach, splitting prefill-specialized workers (for long context histories) from decode-specialized workers (for stable token generation), which significantly improves Time-To-First-Token (TTFT) and overall throughput.

Nebius’ multi-region routing, speculative decoding, and EU/US data center presence ensure scalable, resilient performance. The platform can handle workloads exceeding hundreds of millions of tokens per minute with a 99.9% uptime SLA.

Plug and play inference APIs with transparent billing

The API is fully OpenAI-compatible, allowing for seamless integration with existing tools and frameworks.

Example: Production Inference Request via Nebius Token Factory :

import os

from openai import OpenAI

# Authenticate with the API key stored in environment variables

client = OpenAI(

base_url="https://api.tokenfactory.nebius.com/v1/",

api_key=os.environ.get("NEBIUS_API_KEY")

)

# Leverage Token Factory's support for structured output via JSON schema [span_101](start_span)[span_101](end_span)

completion = client.chat.completions.create(

model="meta-llama/Meta-Llama-3.1-70B-Instruct",

messages=[{"role": "user", "content": "Analyze the log for anomalous pattern."}],

temperature=0.1,

response_format={ "type": "json_schema", "json_schema": MySchema.model_json_schema() }

)

print(completion.choices.message.content)

IDedicated endpoints offer reserved capacity and volume discounts, providing a predictable TCO (Total Cost of Ownership) for large-scale production deployments.

Enterprise-grade security, compliance, and reliability

Nebius’s inference services are engineered for reliability with clear uptime guarantees and SLAs. The platform is certified for SOC 2 Type II, HIPAA, and ISO 27001, providing the necessary trust for sensitive workloads.

From sign up to inference on two platforms: How it works

To illustrate the transition from a raw Kubernetes environment to a production-grade AI pipeline, let us examine the specific technical steps and configurations required to integrate Saturn Cloud with Nebius Token Factory.

Step 1: Onboarding and Automated Infrastructure Provisioning

The lifecycle begins with registering the organization’s tenancy in the Saturn Cloud Installation Manager. This process generates the necessary provisioning credentials and a terraform.tfvars file that acts as the source of truth for the environment configuration. Infrastructure engineers then execute the Terraform plan, which provisions the following components on Nebius AI Cloud:

| Infrastructure Component | Managed Resource | Operational Role |

|---|---|---|

| MK8S Cluster | nebius_mk8s_v1_cluster | High-availability Kubernetes control plane. |

| System Node Pool | nebius_mk8s_v1_node_group | Hosts Saturn Cloud control plane and microservices. |

| Workload Node Pools | nebius_mk8s_v1_node_group | Dynamic pools for CPU and GPU (H100/GB200) tasks. |

| VPC Networking | nebius_vpc_v1_network | Isolated network with Security Groups for egress control. |

| Storage Layer | nebius_nbs_v1_disk | Persistent volumes for user workspaces and dataset staging. |

Step 2: Provisioning the Integrated Development Environment (IDE)

Once the Saturn Cloud control plane is initialized via the saturn-helm-operator, you can launch GPU-accelerated workspaces directly from the UI or API. These workspaces are Kubernetes Pods that mount Nebius persistent disks in the user’s home directory, ensuring data persistence across pod restarts and environment upgrades.

Technical Specifications for Workspaces:

- Base Images: Pre-built with NVIDIA CUDA 13.0, PyTorch, and RAPIDS, or custom images from any registry.

- Networking: Cilium-backed network policies for intra-cluster security and encryption.

- Storage: Attachment of Nebius Shared Filesystems (via InfiniBand) for high-performance data access during model exploration.

- IDE Support: Native JupyterLab, RStudio, or SSH access for VS Code and PyCharm.

Step 3: Building and Distributing Models with Resource Recipes

As you iterate on models, Saturn Cloud captures the environment state in resource recipes – YAML files that define the image, extra packages, git repositories, and hardware requirements. This allows for the exact replication of a development environment for a formal training job, ensuring that “dependency drift” is eliminated.

Example Saturn Cloud Job Recipe :

type: job

spec:

name: "llm-distributed-finetuning"

image: "saturncloud/saturn-python:2024.12.01"

command: "torchrun multinode.py --batch_s[span_56](start_span)[span_56](end_span)ize 64"

instance_type: "8xH100"

instance_count: 4

git_repositories:

- url: "git@github.com:ai-org/llm-training.git"

reference: "production-v1.2"

extra_packages:

pip: ["transformers", "peft", "deepspeed", "accelerate"]

working_directory: "/home/jovyan/workspace/training"

Step 4: Executing Multi-Node Distributed Training

For large-scale models, single-GPU training is insufficient. Saturn Cloud facilitates multi-node training by coordinating Kubernetes Pods and injecting coordination metadata. When a job is launched with multiple instances, Saturn Cloud automatically populates the SATURN_JOB_LEADER (the DNS address of node 0) and SATURN_JOB_RANK environment variables.

Engineers utilize these variables within their training scripts to initialize the distributed backend (NCCL). In the Nebius environment, it is critical to use the specialized NCCL topology file (nccl-topo-h100-v1.xml) to ensure that parameter synchronization follows the optimized InfiniBand path rather than the default virtualized network stack.

Step 5: Packaging and Shipping Artifacts to Token Factory

Once training is complete, model weights and checkpoints are typically stored in Nebius Object Storage or exported to a version control system. These artifacts are then “shipped” to Nebius Token Factory for production hosting. The transition uses standardized formats like HuggingFace Safetensors or GGUF.

Deployment options in the token factory:

- Shared endpoints: Cost-effective access to a pool of models for experimentation and low-volume applications.

- Dedicated endpoints: Single-tenant instances with guaranteed performance, reserved capacity, and custom autoscaling policies.

- LoRA adapters: Instant deployment of fine-tuned adapters on top of base models, reducing deployment latency and storage costs by up to 70%.

Step 6: Production Inference and Real-Time Optimization

Nebius Token Factory provides an OpenAI-compatible API for consuming models in production. Beyond simple request-response, the platform employs several architectural optimizations to maintain sub-second latency under load, such as:

- Speculative Decoding: Using a smaller “draft” model to predict tokens, which are then verified by the target model, reducing latency for interactive applications.

- KV Cache Disaggregation: Separating the “prefill” phase (processing the input prompt) from the “decode” phase (generating output tokens) to optimize memory bandwidth and compute utilization.

- Autoscaling: Scaling the number of dedicated workers based on concurrent request volume, ensuring that bursts in traffic do not impact latency targets.

# Production Inference Request via Nebius Token Factory

import os

import json

from openai import OpenAI

# Authenticate with the API key stored securely in environme[span_147](start_span)[span_147](end_span)[span_151](start_span)[span_151](end_span)nt variables [span_203](start_span)[span_203](end_span)[span_204](start_span)[span_204](end_span)

client = OpenAI(

base_url="https://api.tokenfactory.nebius.com/v1/",

api_key=os.environ.get("NEBIUS_API_KEY")

)

# Leverage Token Factory's support for structured output via JSON schema [span_205](start_span)[span_205](end_span)

completion = client.chat.completions.create(

model="meta-llama/Meta-Llama-3.1-70B-Instruct",

messages=[{"role": "user", "content": "Extract entities from this clinical trial report."}],

temperature=0.1,

response_format={ "type": "json_schema", "json_schema": MySchema.model_json_schema() }

)

print(json.loads(completion.choices.message.content))

How the Saturn Cloud–Nebius Integration Functions

Each platform handles its own responsibilities while users experience it as one cohesive system. The integration:

- Reduces time-to-market with pre-configured K8s environments and streamlined deployments

- Provides cost visibility and governance through fine-grained quotas, usage dashboards, and transparent per-token inference pricing

- Avoids vendor lock-in on both ends – Saturn Cloud workloads are based on standard OCI containers and Token Factory uses an OpenAI-compatible API

- Supports reproducibility through Saturn Cloud’s resource recipes and versioned Docker images

Wrapping up

By offloading the infrastructure management of Kubernetes to Saturn Cloud and the hardware optimization to Nebius, infrastructure teams can focus on model performance rather than cluster administration. With a unified control plane and a bare-metal data plane, the path from git clone to a production API endpoint is significantly shorter.

The combination provides scalable, production-ready training pipelines, global SLA-backed inference delivery, and automated security with self-healing capabilities. Teams move faster, budgets stay predictable, and models run reliably.

Learn more about Saturn Cloud’s cloud integrations or explore the documentation to get started.

Saturn Cloud provides customizable, ready-to-use cloud environments

for collaborative data teams.

Try Saturn Cloud and join thousands of users moving to the cloud without having to switch tools.