Saturn Cloud on Neoclouds: Setting Up a Portable AI Development Platform

AI teams are increasingly moving to Cloud Services like Neoclouds, such as Nebius, Crusoe, and Vultr, for better GPU availability and lower costs. However, infrastructure alone doesn’t get you to production, which can mean weeks of internal platform work before ML work can begin.

Saturn Cloud bridges that gap as a single-tenant, portable AI platform that installs directly into your Neocloud account and runs on your managed Kubernetes cluster. You get enterprise-ready MLOps tooling—workspaces, scheduled jobs, model deployments, and team management—without having to build it yourself or relinquish control over your infrastructure and data.

How It Works

Saturn Cloud installs into any Kubernetes cluster in your cloud account—whether you provision it yourself or let us do it for you.

We provide Terraform modules to set up your cluster and GPU node pools

We deploy Saturn Cloud via Helm, using a bootstrap API token

We handle auth, logging, monitoring, autoscaling, and updates

Under the hood, it’s Kubernetes-native. You bring your Git repos and Docker images. Saturn Cloud turns them into running workloads—Jupyter notebooks, jobs, model endpoints, whatever you need.

What Saturn Cloud Gives You

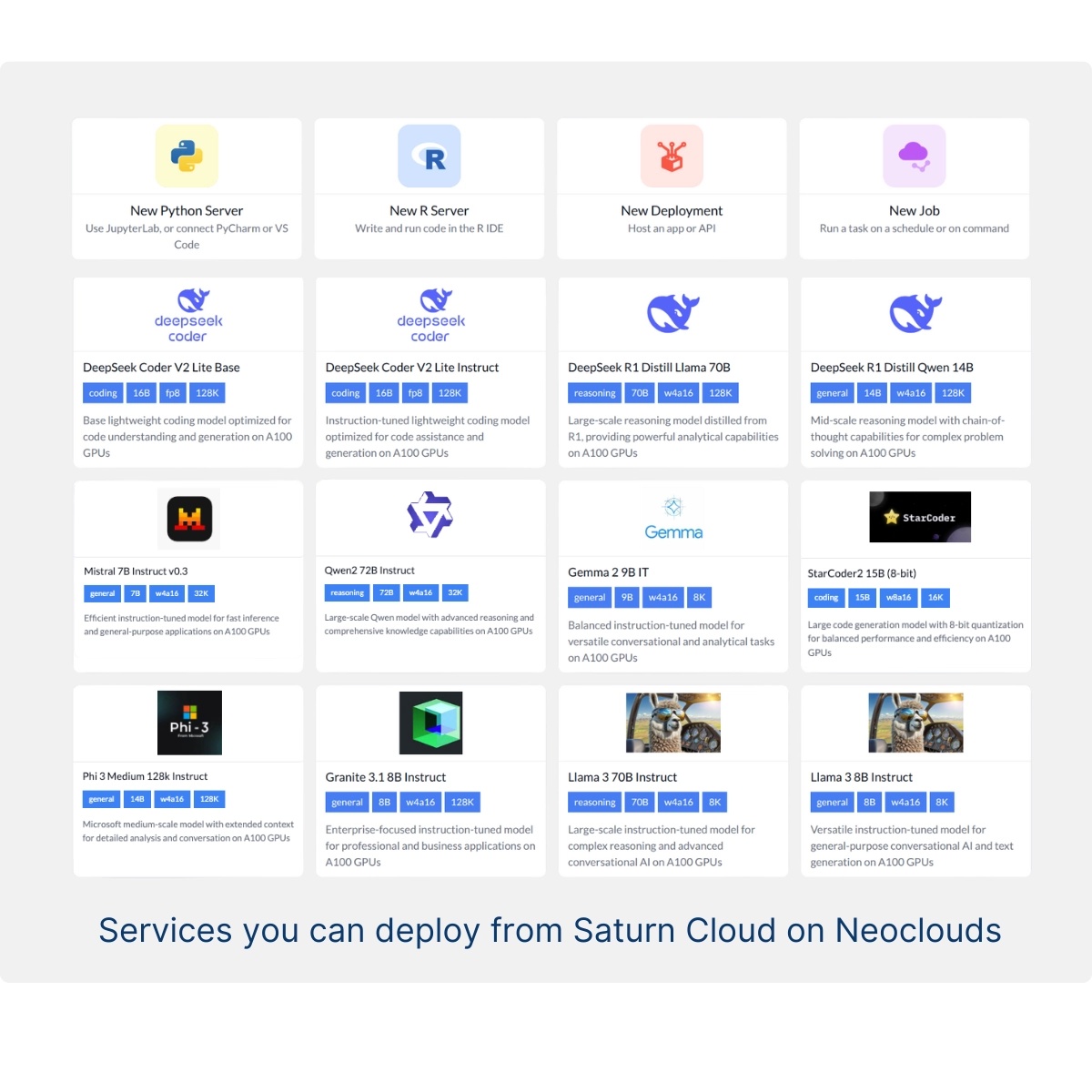

Saturn Cloud provides AI/ML teams with everything they need to get started immediately:

Development workspaces like JupyterLab, RStudio, or VS Code via SSH

Scheduled jobs for training and inference pipelines

Deployments for models, APIs, dashboards, or LLM apps

Full support for GPUs, shared filesystems, and CI/CD integration

All without relying on internal DevOps or platform engineering teams to build custom infrastructure from scratch.

What Saturn Installs

Saturn adds a small control plane to your cluster and wires the standard pieces:

Authenticated ingress with per-project RBAC

Log collection and metrics (e.g., fluent-bit to your log store; Prometheus)

GPU enablement on designated node pools (NVIDIA GPU Operator where supported)

SSH proxy for IDE access (VS Code, PyCharm, Cursor)

Cluster autoscaler integration

Network policy via the cluster’s CNI (e.g., Cilium when available)

Control components run on a dedicated system node pool. User workloads run on CPU and GPU node pools you define. Taints and tolerations keep system components isolated from training and serving workloads.

How Engineers Use It

Saturn exposes three Kubernetes-backed resource types:

Workspaces (Deployments) JupyterLab or RStudio in the browser, or connect over SSH from your IDE. Home directories are persistent. Shared filesystems can be mounted for team data.

Jobs (Jobs/CronJobs) One-off or scheduled runs. For multi-node training, Saturn provides environment wiring, allowing launchers like torchrun to coordinate workers.

Deployments (Deployments + HTTP)

Serve LLMs, ML models, or dashboards behind authenticated endpoints. Roll forward/back by updating the image tag you control.

All resources can be created and exported as YAML recipes. Teams typically keep these in Git and have CI/CD create or update resources in Saturn alongside image builds.

Typical First Workflow

Engineers usually start by creating a Workspace from a base image (or a team image), attaching a Git repository, and working in notebooks or via SSH from a local IDE. When training is ready, they promote the code to a Job, including multi-node runs when required. Inference is packaged as a Deployment behind an authenticated endpoint. The Kubernetes resource specifications for these steps are maintained as YAML recipes in Git, and CI/CD updates them concurrently with image builds.

Installation Overview

Cluster

Use the provider’s managed Kubernetes. Create node pools for system, CPU, and GPU workloads. Configure storage classes as needed for persistent home directories and any shared filesystems.

Helm Install

Register your company with Saturn Cloud to obtain a short-lived (4-hour) bootstrap token. Install the saturn-helm-operator and pass the token. The operator:

Exchanges the bootstrap token for a long-lived token and keeps it refreshed

Creates required secrets, including TLS/SSL for ingress

Installs Saturn Cloud and supporting microservices

Post-Install

Reset the admin password, sign in, and invite users. Optionally configure SSO (SAML/OIDC). Attach your container registry and any shared filesystems you plan to use.

Workloads are standard Kubernetes objects that run your container images, with code pulled from your Git repositories. This keeps them portable and reproducible.

Security and Networking

The installation is a single-tenant account. Traffic is private by default, with public endpoints enabled only when explicitly configured and always authenticated. Secrets remain within your cluster (or are integrated with your external vault), while logs and metrics are routed to destinations you control. Access is enforced through per-project, per-resource RBAC, so teams get only the necessary permissions.

Operations and Cost Controls

Compute is organized into separate CPU and GPU pools with autoscaling applied to the pools you choose. Projects can have quotas and instance guardrails to prevent overprovisioning. Workspaces support idle suspend to reduce waste, and jobs can be scheduled to run in defined windows. When your provider offers spot/preemptible nodes, you can add a dedicated pool and opt specific workloads into it.

Get Started

You can deploy Saturn Cloud into any Neocloud account in under an hour. We handle the heavy lifting - so your team can focus on building. Reach out via chat on Saturn Cloud to learn more.

About Saturn Cloud

Saturn Cloud is a portable AI platform that installs securely in any cloud account. Build, deploy, scale and collaborate on AI/ML workloads-no long term contracts, no vendor lock-in.

Saturn Cloud provides customizable, ready-to-use cloud environments

for collaborative data teams.

Try Saturn Cloud and join thousands of users moving to the cloud without having to switch tools.