Simple (and Ugly) Reporting with Jupyter Without Having to Learn Anything New

I’ve built a lot of dashboards in my life with Streamlit, Plotly Dash, Bokeh, Voila and Shiny. These tools all produce superior results, but I use them so infrequently that there is significant friction for me to re-learn how to use them. On the other hand I use Jupyter Notebooks at least weekly, and on a recent project I started thinking, well this Notebook is good enough. Can we just keep it up to date and hosted somewhere I can point people to?

Ugly reporting with Jupyter without needing to learn new things

The short version is that Jupyter and Python give you everything you need to stand up some very ugly, crude, but completely useful reports. The approach is to

- Schedule the execution of a notebook (can be with CRON) and save the resulting notebook to the filesystem.

- Have a service that serves static files to host the notebook.

Building your Jupyter notebook report

Building the notebook report is pretty easy. All you need to do is make sure you have a notebook that displays the information you care about. There are 2 extra thing you need to do. First make sure the notebook can execute from top to bottom without errors. Second make sure the notebook can automatically re-run with the “latest” data. Latest here is defined as whatever you care about in terms of keeping the report “up to date”.

Executing the report

Once you have the notebook, scheduling the execution is very easy.

jupyter nbconvert path-to-notebook.ipynb --execute --to html --output output-path.html

or in my case specifically

jupyter nbconvert saturn-operations/notebooks/cost-report.ipynb --execute --to html --output ~/shared/production/hosted-data-and-stats/reports/cost-report.ipynb.html

The --execute flag ensures that instead of just converting the notebook to html, all of the code cells are re-executed.

Hosting the report

It turns out Python has all you need to host the report. If you execute python -m http.server in any directory, you will get a simple static file server for the contents of that directory. Now anyone with a web browser can view and download your ugly Jupyter html reports

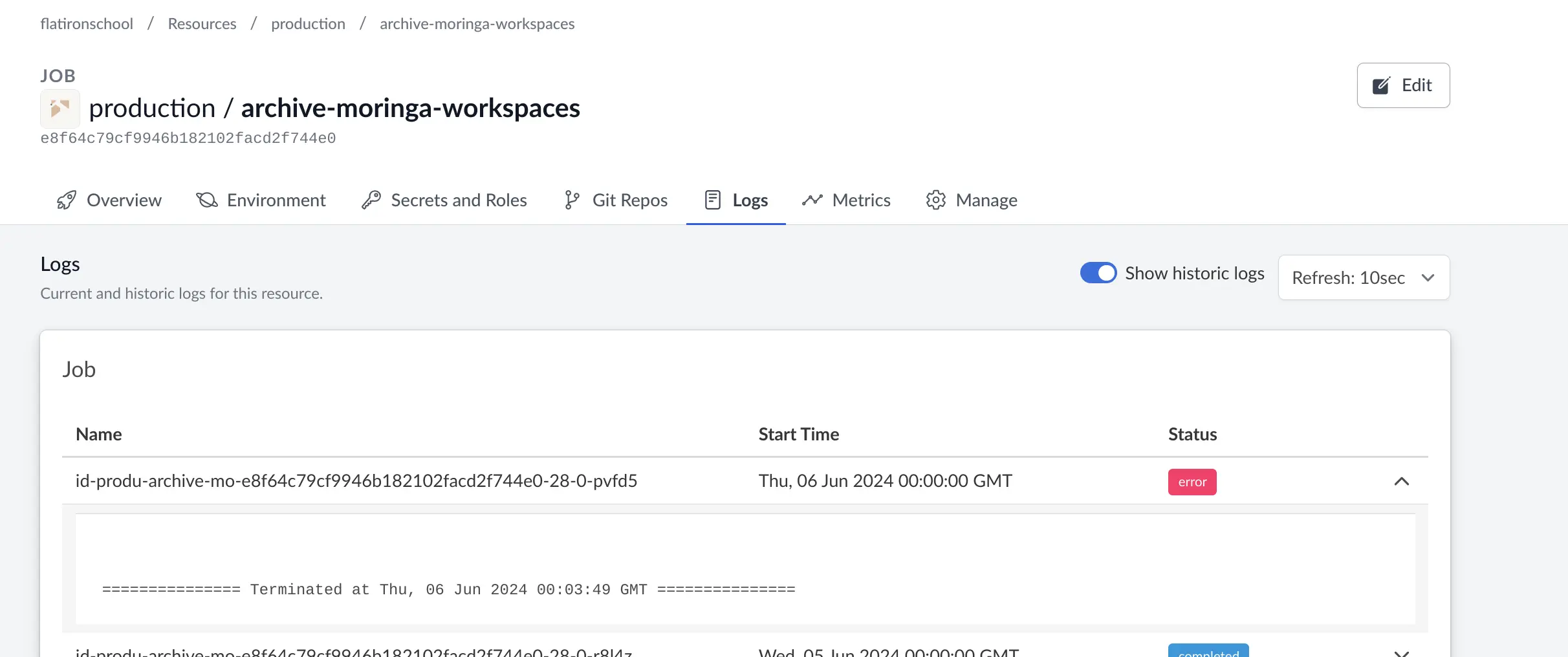

Doing this in Saturn Cloud

The above instructions only require you to have a computer that can server traffic for your company, but it doesn’t cover anything about security or access control. At Saturn Cloud of course, we do everything with Saturn Cloud, so we’re definitely goign to cover how we host these at our company.

Building the Jupyter notebook report on Saturn Cloud

The following recipe (redacted) demonstrates how to build out reporting infrastructure on Saturn Cloud.

type: job

spec:

name: cost-report-job

owner: production

description: ''

image: hugo/operations:2024.03.07

instance_type: xlarge

environment_variables:

PYTHONPATH: /home/jovyan/workspace/saturn-operations

DASHBOARD_DATA_PATH: /home/jovyan/shared/production/hosted-data-and-stats/monitoring-dump

working_directory: /home/jovyan/workspace/saturn-operations/scripts

extra_packages:

pip:

install: saturn-client saturnfs fastapi[all] papermill

start_script: ''

git_repositories:

- url: git@github.com:saturncloud/saturn-operations.git

path: /home/jovyan/workspace/saturn-operations

public: false

on_restart: reclone

reference: null

reference_type: branch

secrets:

...

shared_folders:

- owner: production

path: /home/jovyan/shared/production/hosted-data-and-stats

name: hosted-data-and-stats

command: bash cost-report.sh

scale: 1

use_spot_instance: false

schedule: 0 0,6,12,18 * * *

concurrency_policy: Allow

retries: 0

This is what this means. On some cron schedule (0 0,6,12,18 * * *), start a container with the hugo/operations:2024.03.07 docker image, install with pip the following extra_packages: saturn-client saturnfs fastapi[all] papermill, clone the saturn-operations.git git_repositories and execute the following command bash cost-report.sh from the working_directory /home/jovyan/workspace/saturn-operations/scripts (which is inside the git repository). Mount the NFS shared_folders into the container. cost-report.sh will execute a few notebooks and write the resulting html files to NFS.

Hosting the report

A Saturn Cloud Deployment can be used to actually serve the files.

type: deployment

spec:

name: report-server

owner: production

description: ''

image: saturncloud/saturn-python:2023.09.01

instance_type: medium

environment_variables: {}

working_directory: /home/jovyan/shared/production/hosted-data-and-stats/

start_script: ''

git_repositories: []

secrets: []

shared_folders:

- owner: production

path: /home/jovyan/shared/production/hosted-data-and-stats

name: hosted-data-and-stats

start_dind: false

command: python -m http.server

scale: 1

start_ssh: false

use_spot_instance: false

routes:

- subdomain: reports

container_port: 8000

visibility: owner

viewers: []

state:

id: 3f6f92a172f245f7a262c4377b919643

status: running

This is what this means. expose container_port 8000. Start a container with the hugo/operations:2024.03.07 docker image. Mount the NFS shared_folders into the container, and execute the following command python -m http.server

This service hosts all files on the NFS volume (which are being generated by cron). Since it is a deployment running in Saturn Cloud, that means you can use the networking tab to restrict which other users or groups at your company can access this report.

Conclusion

Reports don’t have to be complicated. This setup has been very robust and useful for simple reports we want to generate quickly that don’t need to be interactive. In a future post (or revision) we’ll go over how to augment your reports with papermill instead of just plain nbconvert.

About Saturn Cloud

Saturn Cloud is a portable AI platform that installs securely in any cloud account. Build, deploy, scale and collaborate on AI/ML workloads-no long term contracts, no vendor lock-in.

Saturn Cloud provides customizable, ready-to-use cloud environments

for collaborative data teams.

Try Saturn Cloud and join thousands of users moving to the cloud without having to switch tools.