How to Write Large Pandas Dataframes to CSV File in Chunks

As a data scientist, one of the most common tasks you will encounter is working with large datasets. These datasets can be too large to fit into memory, making it difficult to perform certain operations. One such operation is writing a large Pandas dataframe to a CSV file. In this article, we will explore how to write large Pandas dataframes to a CSV file in chunks.

Table of Contents

- Why Write Large Pandas Dataframes to CSV File in Chunks?

- How to Write Large Pandas Dataframes to CSV File in Chunks

- Common Errors and How to Handle Them

- Conclusion

Why Write Large Pandas Dataframes to CSV File in Chunks?

Writing a large Pandas dataframe to a CSV file all at once can be memory-intensive and time-consuming. If the dataframe is too large to fit into memory, it may lead to memory errors or the program crashing. Writing the dataframe to a CSV file in chunks can help to alleviate these issues. By breaking the dataframe into smaller chunks, we can write the file in segments and avoid memory errors.

How to Write Large Pandas Dataframes to CSV File in Chunks

Writing a large Pandas dataframe to a CSV file in chunks requires a few steps. Let’s take a look at how it can be done.

Step 1: Import the necessary libraries

First, we need to import the necessary libraries. We will be using Pandas and the csv module.

import pandas as pd

import numpy as np

Step 2: Load the dataframe

Next, we need to load the dataframe that we want to write to a CSV file. For this example, we will be using a sample dataframe with 1 million rows and 10 columns.

df = pd.read_csv('large_dataframe.csv')

Step 3: Define the chunk size

We need to define the chunk size that we want to use when writing the dataframe to a CSV file. The chunk size will determine how many rows we will write to the file at a time. A smaller chunk size will use less memory but may take longer to write the file, while a larger chunk size will use more memory but may write the file faster.

chunk_size = 10000

Step 4: Write the dataframe to the CSV file in chunks

Finally, we can write the dataframe to the CSV file in chunks. We will be using the Pandas iterrows() method to iterate over the dataframe in chunks of the specified size. For each chunk, we will be writing the rows to the CSV file using the csv.writer() method.

# 5.3 Writing Data to CSV in Chunks

chunk_size = 50000

num_chunks = len(large_dataframe) // chunk_size + 1

for i, chunk in enumerate(np.array_split(large_dataframe, num_chunks)):

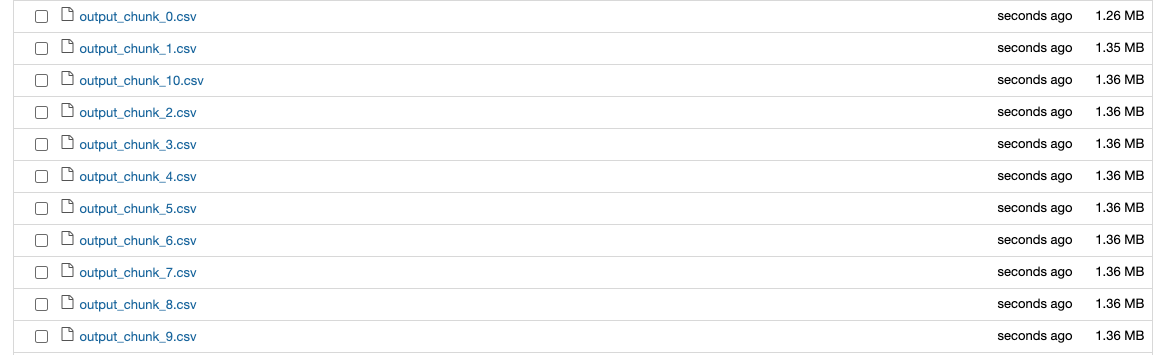

chunk.to_csv(f"output_chunk_{i}.csv", index=False)

In the code above, we iterate over each chunk of the dataframe using np.array_split. The enumerate function is used to get both the index (i) and the chunk (chunk). For each chunk, the data is written to a CSV file named output_chunk_i.csv, where i is the index of the chunk. The index=False parameter is used to exclude the row indices from being written to the CSV file.

Step 5: Check the written csv files

Once we have finished writing the dataframe to the CSV file, we can check the written in the corresponding folder.

Common Errors and How to Handle Them

Memory Overflow

If memory overflow occurs, consider reducing the chunk size or implementing a mechanism to handle data in smaller segments.

Chunk Size Selection

Choosing an optimal chunk size is crucial. Too small chunks may result in increased IO overhead, while overly large chunks may still lead to memory issues.

Data Integrity

Ensure that the chunks are written and concatenated in the correct order to maintain data integrity.

Conclusion

In this article, we explored how to write large Pandas dataframes to CSV file in chunks. Writing a large dataframe to a CSV file in chunks can help to alleviate memory errors and make the process faster. By breaking the dataframe into smaller chunks, we can write the file in segments and avoid memory errors. Overall, this is a useful technique to keep in mind when working with large datasets.

About Saturn Cloud

Saturn Cloud is a portable AI platform that installs securely in any cloud account. Build, deploy, scale and collaborate on AI/ML workloads-no long term contracts, no vendor lock-in.

Saturn Cloud provides customizable, ready-to-use cloud environments

for collaborative data teams.

Try Saturn Cloud and join thousands of users moving to the cloud without having to switch tools.