How to Use AWS SageMaker on GPU to Accelerate Your Machine Learning Workloads

As a data scientist or software engineer, you understand the importance of running machine learning workloads on powerful hardware to achieve optimal performance. Amazon Web Services (AWS) SageMaker is a cloud-based machine learning platform that provides a range of features to help you build, train, and deploy your models at scale. In this article, we will explore how to use AWS SageMaker on GPU to accelerate your machine learning workloads.

Table of Contents

- What is AWS SageMaker?

- Why Use AWS SageMaker on GPU?

- How to Use AWS SageMaker on GPU

- Best Practices

- Conclusion

What is AWS SageMaker?

AWS SageMaker is a fully managed service that makes it easy to build, train, and deploy machine learning models at scale. It provides a range of tools and services to help data scientists and developers build, train, and deploy machine learning models quickly and easily. With SageMaker, you can choose from a range of pre-built machine learning algorithms, or you can bring your own algorithms and frameworks.

Why Use AWS SageMaker on GPU?

GPU (graphics processing unit) acceleration is a powerful technology that can significantly speed up machine learning workloads. GPUs are designed to handle large amounts of parallel processing, making them ideal for machine learning tasks that involve complex calculations and large datasets.

When you use AWS SageMaker on GPU, you can take advantage of the power of GPU acceleration to train your machine learning models faster and more efficiently. This means that you can get your models into production faster, and you can iterate on your models more quickly.

How to Use AWS SageMaker on GPU

Using AWS SageMaker on GPU is easy. Here are the steps you need to follow:

Step 1: Set Up Your AWS Account

To use AWS SageMaker, you will need an AWS account. If you do not already have an AWS account, you can sign up for one at aws.amazon.com.

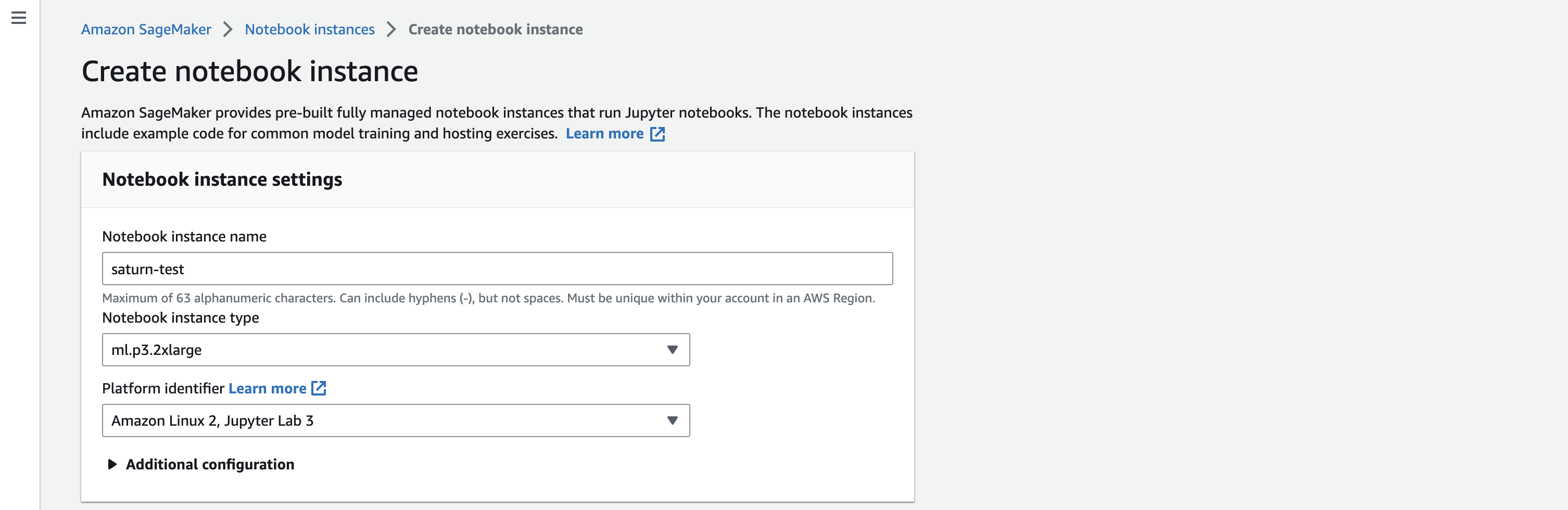

Step 2: Launch an AWS SageMaker Notebook Instance

Once you have set up your AWS account, you can launch an AWS SageMaker notebook instance. A notebook instance is a fully managed service that provides a Jupyter notebook environment for data scientists and developers to build, train, and deploy machine learning models.

To launch an AWS SageMaker notebook instance, follow these steps:

- Open the AWS Management Console and navigate to the SageMaker dashboard.

- Click on the “Notebook instances” tab.

- Click the “Create notebook instance” button.

- Enter a name for your notebook instance.

- Choose an instance type that supports GPU acceleration. SageMaker provides a range of GPU-enabled instances to choose from, including p3 instances and g4 instances.

- Choose the IAM role that provides permissions for your notebook instance.

- Click the “Create notebook instance” button.

Step 3: Train Your Machine Learning Model

Once you have launched your AWS SageMaker notebook instance, you can start building and training your machine learning model. Here are the steps you need to follow:

- Launch a Jupyter notebook on your notebook instance.

- Import the necessary libraries and datasets.

- Define your machine learning model architecture.

- Compile and train your model using TensorFlow or another machine learning framework.

- Monitor your model’s performance and make adjustments as needed.

When training your machine learning model, be sure to take advantage of the power of GPU acceleration. You can do this by configuring your machine learning framework to use the GPU for calculations.

Step 4: Deploy Your Machine Learning Model

Once you have trained your machine learning model, you can deploy it using AWS SageMaker. Here are the steps you need to follow:

- Export your trained model to a format that can be deployed on AWS SageMaker, such as a TensorFlow SavedModel.

- Create an inference pipeline that defines how your model will be used to make predictions.

- Deploy your model to an endpoint using the SageMaker API.

- Test your model’s predictions to ensure that it is working correctly.

Best Practices

Choose the Right Instance Type: When launching an AWS SageMaker notebook instance, carefully select the instance type that aligns with your machine learning workload requirements. SageMaker offers various GPU-enabled instances like p3 and g4 instances. Consider factors such as available memory, processing power, and cost implications.

Optimize Data Loading and Preprocessing: Efficient data loading and preprocessing can have a significant impact on GPU utilization. Optimize your data pipelines to ensure that data is fed to the GPU efficiently. Utilize parallel processing capabilities to enhance data throughput and minimize idle GPU time.

Utilize GPU-Accelerated Libraries: Leverage GPU-optimized libraries and frameworks such as TensorFlow and PyTorch. These libraries are designed to take advantage of GPU capabilities and can significantly improve the performance of machine learning tasks.

Implement Batch Processing: Batch processing allows you to make the most out of GPU parallelism. Instead of processing data point by point, consider batching multiple data points together. This can enhance the efficiency of GPU utilization and speed up model training.

Monitor and Optimize GPU Utilization: Regularly monitor GPU utilization during model training. Tools like AWS CloudWatch can provide insights into GPU metrics. Optimize hyperparameters and model architecture to ensure that the GPU is effectively utilized without bottlenecks.

Use Spot Instances for Cost Optimization: Consider using AWS Spot Instances for training your machine learning models on SageMaker. Spot Instances can significantly reduce costs compared to on-demand instances. However, be aware of the possibility of interruptions and design your workflow to handle them gracefully.

Implement Model Checkpoints: When training large models, implement model checkpoints to save the model’s state at regular intervals. In case of interruptions or failures during training, checkpoints allow you to resume from the last saved state, saving time and resources.

Scale Resources Based on Workload: Dynamically scale your GPU resources based on the workload. Utilize SageMaker’s capability to easily scale up or down based on the computational requirements of your machine learning tasks. This ensures optimal resource allocation and cost efficiency.

Secure Model Deployments: When deploying your machine learning model using AWS SageMaker, ensure that you implement security best practices. Use encryption for data in transit and at rest, configure access controls, and regularly update IAM roles to follow the principle of least privilege.

Regularly Update SageMaker and Framework Versions: Stay updated with the latest versions of AWS SageMaker and the machine learning frameworks you use. Regular updates often include performance improvements, bug fixes, and new features that can enhance the efficiency of GPU-accelerated workflows.

Conclusion

Using AWS SageMaker on GPU is a powerful way to accelerate your machine learning workloads. With SageMaker, you can take advantage of the latest GPU technology to train your models faster and more efficiently. By following the steps outlined in this article, you can quickly and easily set up an AWS SageMaker notebook instance, train your machine learning model, and deploy it to production. With SageMaker, you can focus on building great machine learning models, without worrying about the underlying infrastructure.

About Saturn Cloud

Saturn Cloud is your all-in-one solution for data science & ML development, deployment, and data pipelines in the cloud. Spin up a notebook with 4TB of RAM, add a GPU, connect to a distributed cluster of workers, and more. Request a demo today to learn more.

Saturn Cloud provides customizable, ready-to-use cloud environments for collaborative data teams.

Try Saturn Cloud and join thousands of users moving to the cloud without

having to switch tools.