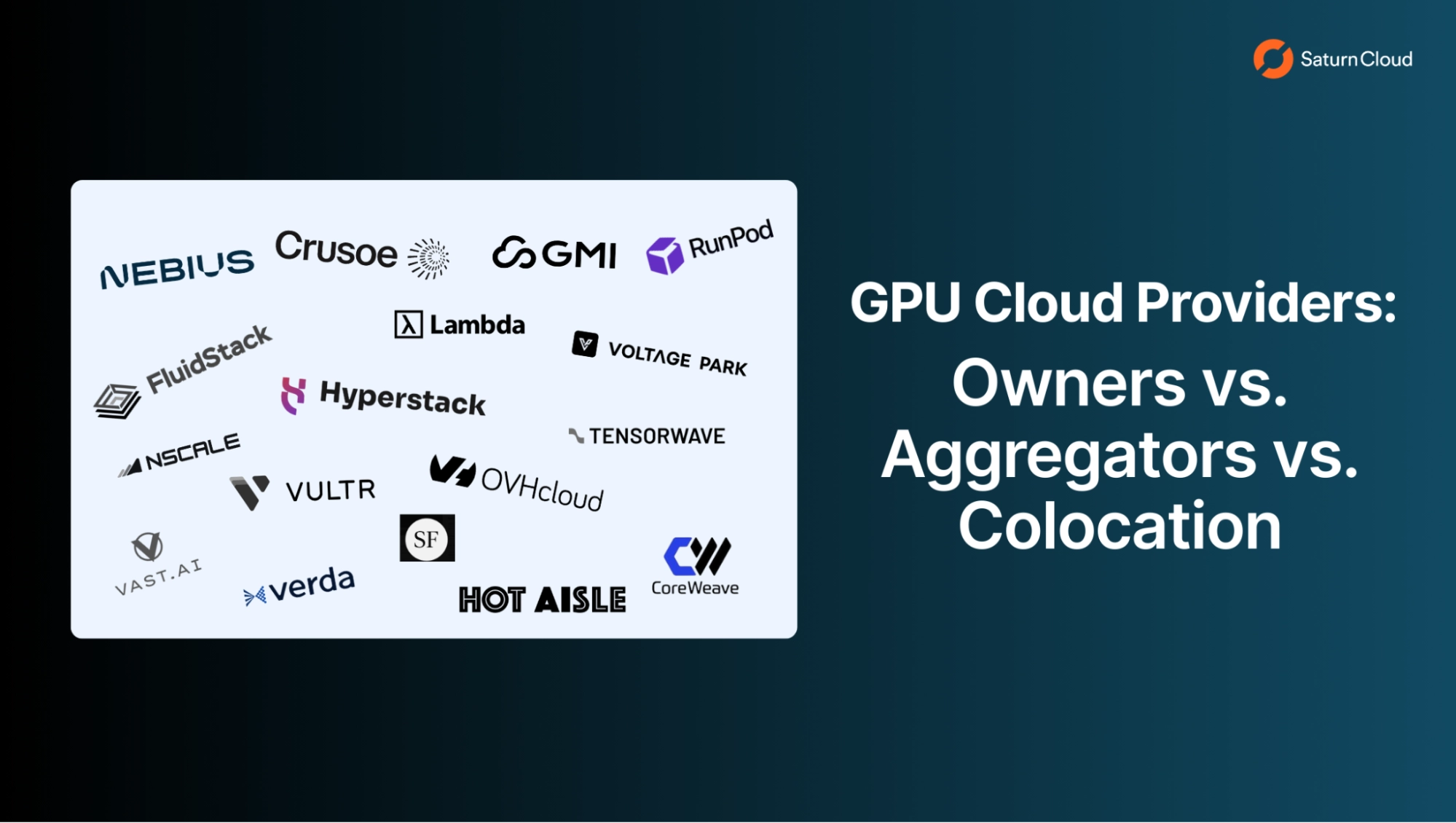

GPU Cloud Providers: Owners vs. Aggregators vs. Colocation

For AI teams scaling their models, the biggest variable isn’t the GPU, it’s the underlying infrastructure. Not all GPU cloud providers are built the same. Understanding a provider’s infrastructure ownership model is crucial for guaranteeing pricing stability, consistent capacity, and reliable support for your production workloads.

GPU cloud providers fall into three categories based on how they source and control their infrastructure.

1. Owners

These providers build and operate their own data centers, own their GPU hardware, and control the whole stack. This vertical integration enables them to offer consistent Service Level Agreements (SLAs), predictable pricing, and direct accountability when issues arise.

| Provider | Notes |

|---|---|

| Crusoe | Vertically integrated; manufactures own modular data centers, controls energy sourcing through GPU deployment |

| CoreWeave | Acquired NEST DC ($322M); 250K+ GPUs across 32+ data centers |

| Nebius | Owns data centers in Finland; colocation in the US and other regions (Full control over security and environment in owned facilities) |

| OVHcloud | Fully vertically integrated; designs/manufactures servers, builds/manages own data centers |

| Nscale | Owns data centers in Norway |

2. Hardware Owners (Colo)

These providers own their GPU hardware but rent space (colocation) in third-party data centers. They control the compute layer and the hardware supply but depend on partners for power, cooling, and physical security. This is a hybrid model that balances control with faster geographic expansion.

| Provider | Notes |

|---|---|

| Lambda | Owns GPU hardware; colocation in SF and Texas |

| Voltage Park | Owns H100 hardware; colocation in Texas, Virginia, and Washington |

| Hot Aisle | Owns AMD GPUs; colocation at Switch Pyramid Tier 5 in Michigan |

| Hyperstack | Owns GPU hardware; colocation partnerships |

| GMI Cloud | Owns GPU hardware; offshoot of Realtek/GMI Technology |

| TensorWave | Owns AMD GPU hardware; colocation across US data centers |

3. Aggregators

These providers operate marketplaces that connect you with third-party GPUs, ranging from other data centers to individual hosts. They capitalize on idle or excess capacity, often providing the lowest-cost access to a decentralized pool of GPUs. Pricing is typically lower, but quality, availability, and support vary significantly.

| Provider | Notes |

|---|---|

| Vast.ai | Pure marketplace; 10K+ GPUs from individuals to data centers |

| SF Compute | Two-sided marketplace connecting GPU cloud providers |

| RunPod | Hybrid model; “Secure Cloud” uses Tier 3/4 partners, “Community Cloud” aggregates third-party hosts |

| FluidStack | Mix of owned infrastructure and marketplace aggregation |

Why the Ownership Model Matters

For mission-critical AI workloads, the infrastructure model directly translates to your operational stability.

Pricing stability. Owners control their cost structure. When you’re locked into a multi-month training run, you don’t want your provider’s pricing to shift because their upstream supplier raised rates.

Support accountability. When something breaks at 2 am, owners can dispatch their own technicians. Aggregators may need to coordinate across multiple parties before anyone touches your hardware.

Consistent SLAs. Owners can guarantee uptime because they control every layer. Aggregators inherit the reliability (or lack thereof) of their suppliers.

Capacity planning. Owners know exactly what hardware they have and what’s coming. They can make real commitments about future availability. Aggregators are constrained by what their marketplace offers.

The Vertical Integration Advantage

Among owners, there’s a further distinction: how much of the stack do they actually control?

Crusoe takes vertical integration further than most, controlling energy sourcing, data center design and construction, and GPU deployment. This means faster buildout (Crusoe brought its Abilene facility online in under a year), more predictable costs (no middleman markup on power), and infrastructure purpose-built for AI workloads rather than for general-purpose data centers retrofitted for AI.

CoreWeave and Nebius also own significant infrastructure, though they rely more heavily on colocation partnerships for geographic expansion. CoreWeave, for instance, focuses its integration on custom high-density liquid-cooled systems to optimize the compute environment.

When Aggregators Make Sense

Aggregator marketplaces like Vast.ai and SF Compute aren’t inherently bad, they serve a purpose. If you’re running short experiments, testing GPU configurations, or need burst capacity for a few days, the lower prices and instant availability can outweigh the inconsistency.

But for production training runs, fine-tuning pipelines, or inference serving with uptime requirements, the ownership model matters. You’re not just renting GPUs; you’re relying on someone else’s infrastructure to keep your business running.

Questions to Ask Your Provider

Before signing a contract, ask:

- Do you own your GPUs, or are you reselling capacity?

- Do you own or lease your data center space?

- Who responds when hardware fails: your team or a third party?

- How do you handle capacity constraints? Can you guarantee availability for my contract term?

The answers tell you whether you’re buying infrastructure or renting access to someone else’s.

Frequently Asked Questions

1. What is the difference between a GPU owner and an aggregator?

A GPU owner (like CoreWeave or Crusoe) owns the physical H100 or A100 hardware and often the data center itself. They provide consistent performance and direct support. A GPU aggregator (like Vast.ai) is a marketplace that lists idle GPUs from various third-party hosts. Aggregators are usually cheaper but offer less control over hardware consistency and uptime.

2. Is it safe to use a GPU aggregator for AI training?

For experimental work or short-term projects, aggregators are excellent and cost-effective. However, for “mission-critical” training that takes weeks, owners are preferred. Aggregators often lack a unified SLA (Service Level Agreement), meaning if a host goes offline, your training run could be interrupted without a clear path to recovery.

3. What does “Colocation” (Colo) mean in a GPU cloud context?

Colocation means the provider owns the expensive GPU servers but rents space, power, and cooling from a specialized data center provider (like Equinix or Switch). This allows providers such as Lambda or Voltage Park to scale quickly across regions while maintaining full control over the underlying compute hardware.

4. Why is vertical integration important for AI startups?

Vertical integration (seen with providers like Crusoe or OVHcloud) means the provider controls everything from power generation to the server rack. This matters because AI workloads generate significant heat and require substantial power. A vertically integrated provider can often offer better pricing stability and purpose-built cooling solutions that prevent GPUs from “throttling” due to heat.

This post is part of our GPU cloud comparison series. For a comprehensive look at 17 providers across pricing, networking, storage, and platform capabilities, see the full comparison.

Saturn Cloud provides customizable, ready-to-use cloud environments

for collaborative data teams.

Try Saturn Cloud and join thousands of users moving to the cloud without having to switch tools.