Github Action + ECR + Optimizing Disk Space

Table of Contents

- Introduction

- Github Action

- AWS ECR(Elastic Container Registry)

- How ECR(Elastic Container Registry) Works

- Build simple Github actions to build and push docker container image to ECR(Elastic Container Registry)

- Handling or maximizing Github actions runner resources

Introduction:

When building a data-intensive application, setting up Github Actions workflow for it can be challenging especially when dealing with issues such as Github runner running out of disk space

One solution to maximizing your Github runner storage is to utilize a pre-built runner cleaner or Self-hosted Runner. In this article, We’ll cover the basics of Github actions, building a docker image and pushing to AWS ECR (Elastic Container Registry) , maximising your Github runner disk space and setting up self-hosted runners. Additionally, we will walk through the steps to set up these tools

Github actions:

Github actions is a CI/CD/CT (Continuous Integration/ Continuous deployment/Continuous testing) platform for automating your build, deployment and testing the lifecycle of your application. Using Github actions, you can build, deploy and test every pull request, or push in your Github repository. By automating these tasks, you can save time and effort, increase the reliability of your code, and ensure consistent processes across your team or organization.

Github actions support cloud integration including AWS, Azure, GCP, slack and alot more for Monitoring, deploying, testing and running workflow. One most important reason people uses Github actions is the provision of community-built or predefined workflows and these actions can be run via Github runner, or self-hosted runner (Virtual machine).

Imagine you are building a machine learning pipeline and you want to automate the test and deployment for the development and production lifecycle or process, using Github action, you can setup a process or automation using predefined actions with AWS integration to test every pull request before merging, and deploy to an AWS EC2 after merging to a branch

In Github actions, there are various components that are essential in setting up reliable and robust automation;

Runner: Github actions runner is an essential component of your workflow. A runner is basically a machine or environment that executes every step and job in your workflow. Github Actions runner can be hosted on Github infrastructure (Github runner) or you can setup a self-hosted runner on your preferred virtual environment either Linux, Ubuntu, windows or MacOS.

In a nutshell, When a job is triggered, it’s assigned to a runner, and the runner is responsible for the execution, running and report of the job in the workflow.

Steps: Steps are a fundamental component of Github Actions workflows. A step in Github actions is a unit or subset of a Job in a workflow. Each step executes a command of action that performs a certain task such as running a test, building and running a docker image.

Jobs: Jobs are collections or sets of steps or stages in a workflow that is on the same runner. Each job is typically a specific task or goal you want to accomplish such as testing your code, deploying to a production virtual machine or running a security scanner, and a job can be run sequentially or parallel with other jobs in a workflow.

When you define a job, you specify a set of steps, each step can include one or more actions such as sending a notification, authenticating AWS on the runner, building and running a docker container or using a pre-built actions

Event Triggers: Event triggers are direct workflow triggers that launch workflow based on certain events such as on pull request, manually, issue comment and more. Github actions allow you to configure or trigger your workflow based on these events.

Secrets: Secrets are encrypted values that can be used in a workflow. Secrets can be used to store sensitive information, such as passwords, credentials etc and can be accessed inside a workflow. For example, if you want to setup a workflow to build a docker image and publish to AWS ECR (Elastic Container Registry), you have to store your AWS Access and Secret key credentials securely using Secret to prevent unauthorized access

Artifacts: Artifacts are files generated by a job that can be used in subsequent steps or jobs. Artifacts can be uploaded to Github, or to an external storage provider. Examples of artifacts include compiled binaries, log files, or test results. Artifacts can be uploaded to Github, or to an external storage provider.

Now, that we have an explicit understanding of Github actions, let’s write a simple Github actions script to pull a docker image from Docker hub

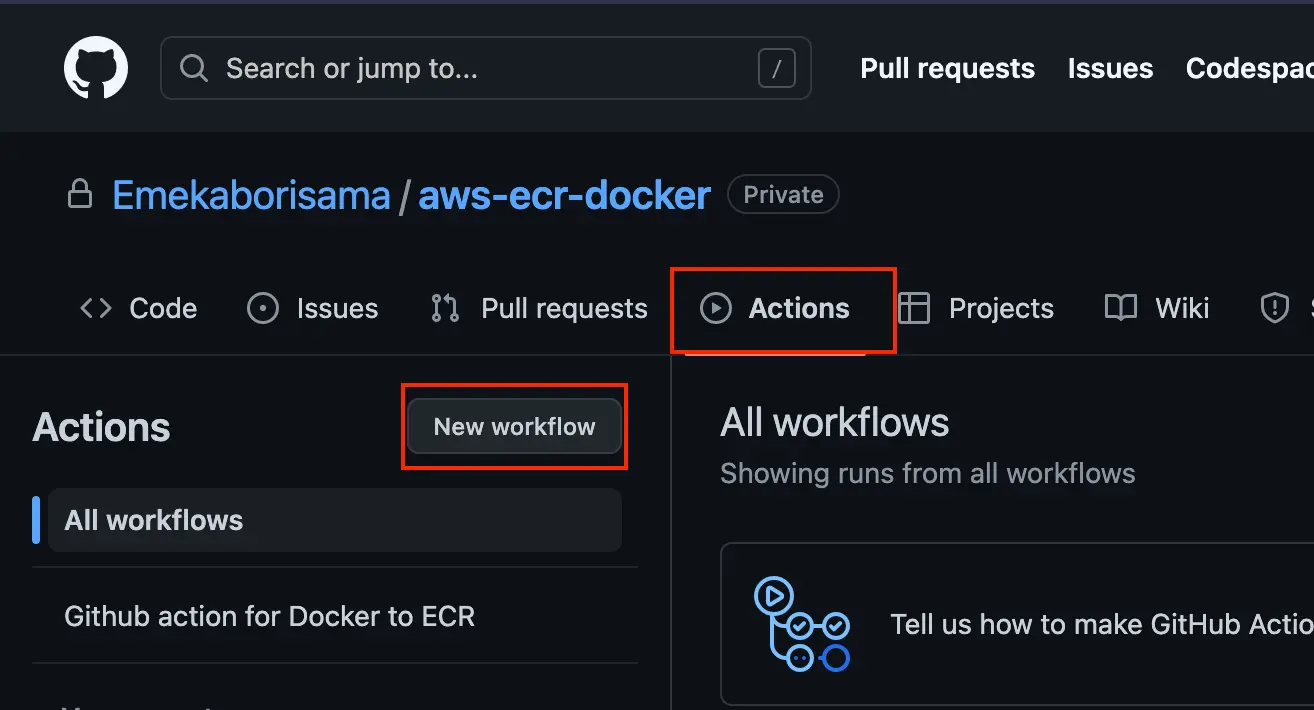

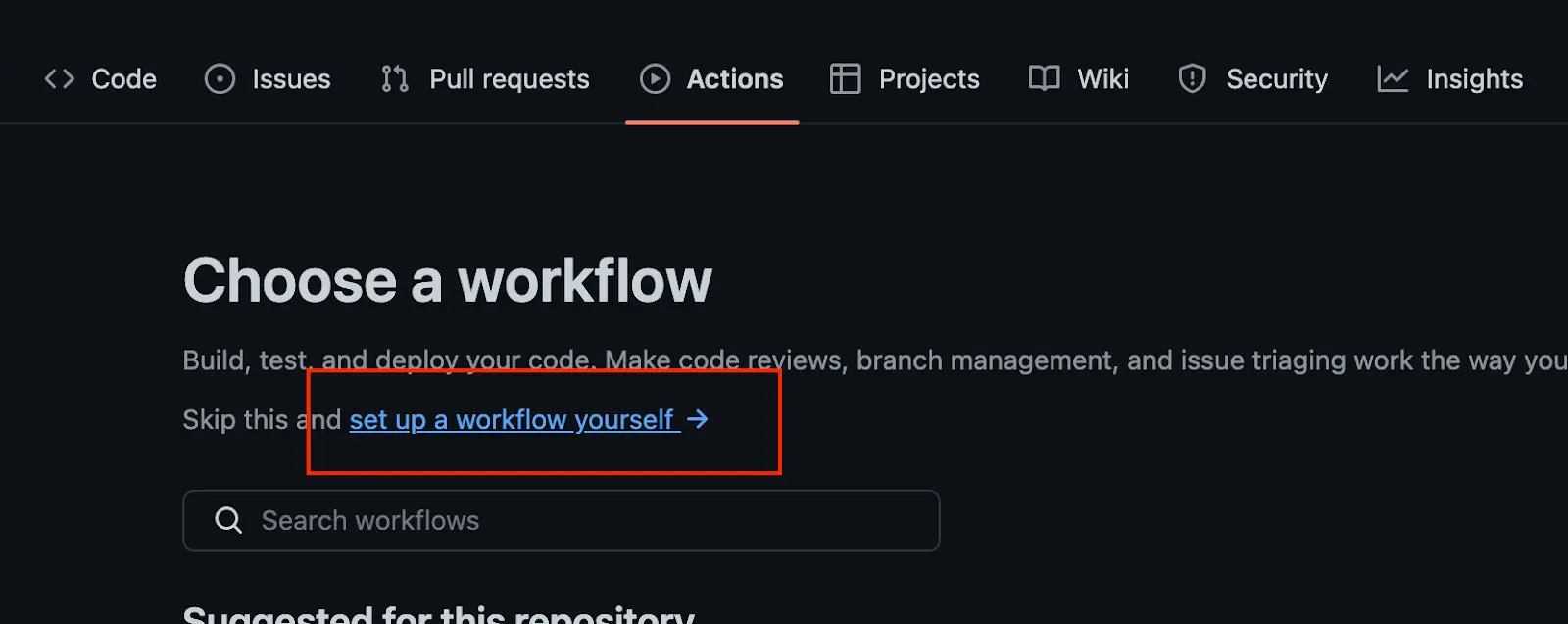

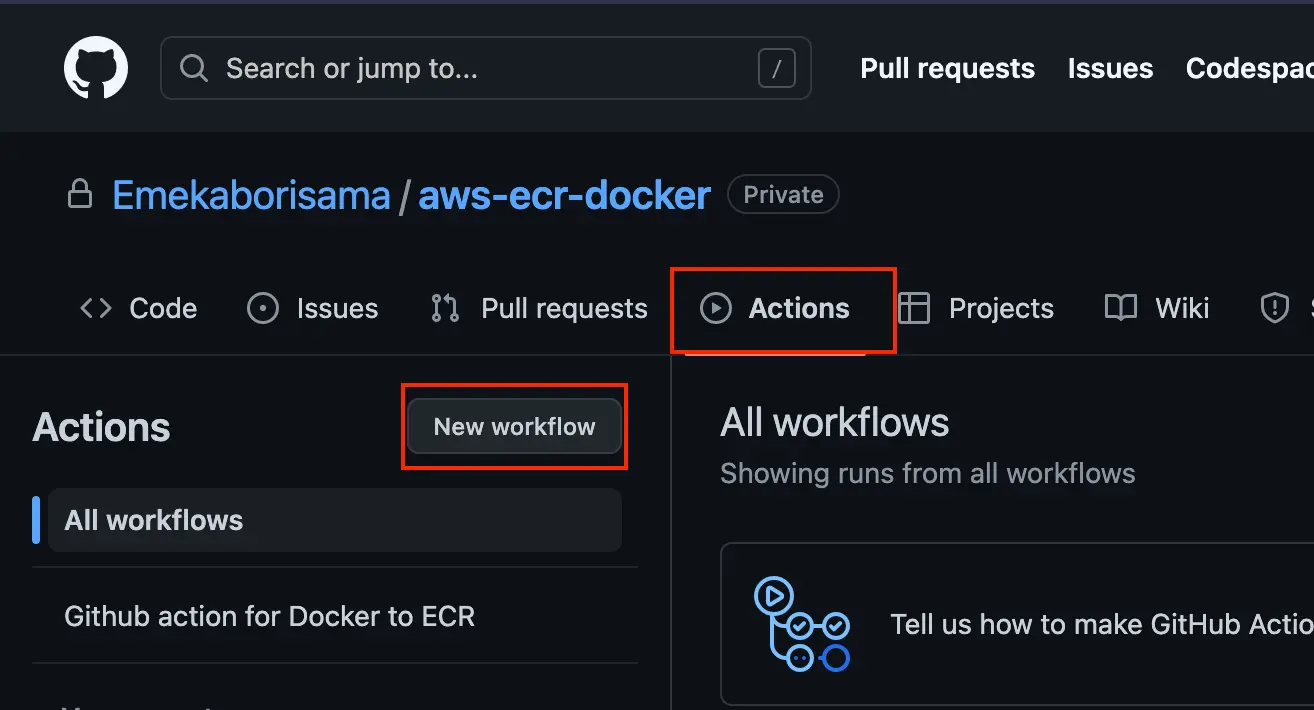

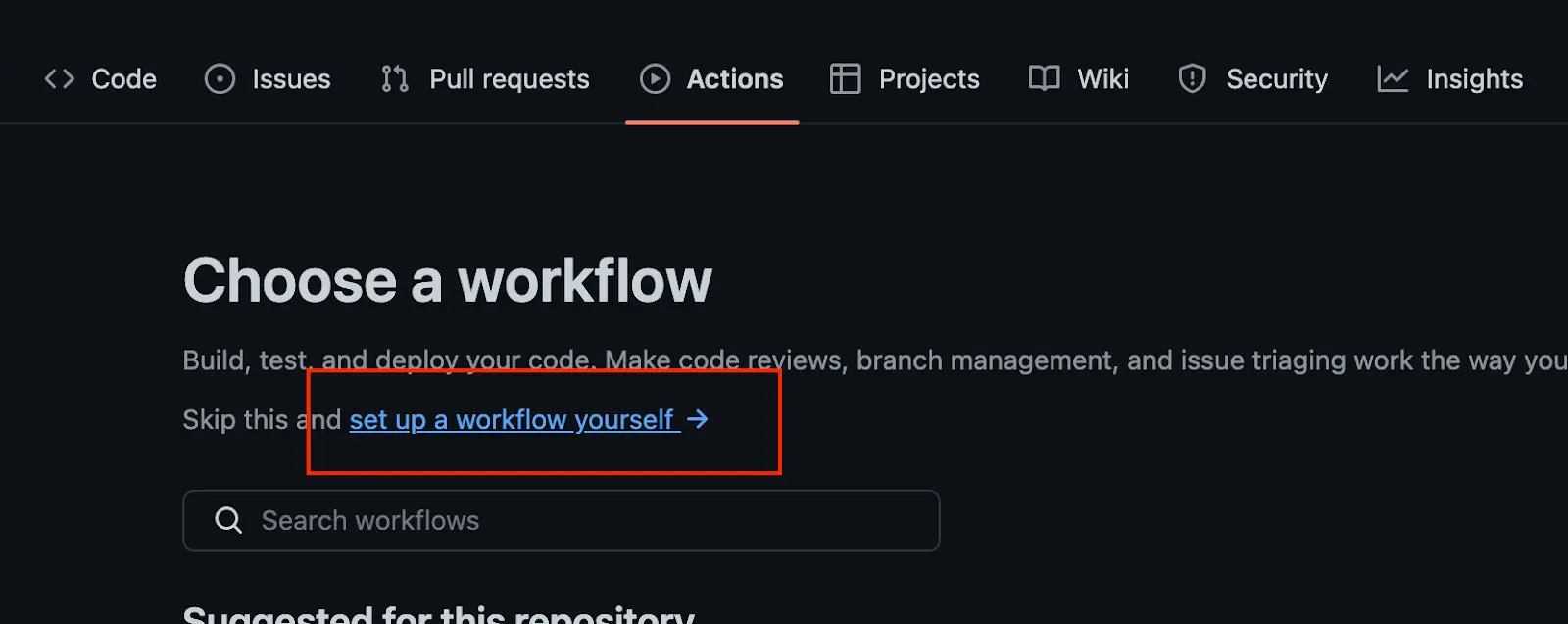

- Step 1: Create a github repository » click on the actions tab » New workflow » Set up a workflow yourself

- Step 2: Paste the code below inside the codebox/environment

Note: You can set your preferred action file name or you can use the default main.yml

name: Github action for Docker

on:

push:

branches: master

Jobs:

build:

name: Pull Docker image

runs-on: ubuntu-20.04

Steps:

- name: Checkout

uses: actions/checkout@v2

- name: Docker_Image_pull

id: withfreespace

run: |

# Pull the TensorFlow Docker image from Docker Hub

Docker pull tensorflow/tensorflow

The workflow above checks out the latest version of the code using the actions/checkout@v2 action. It then pulls the Docker image using the Docker pull [tensorflow](https://saturncloud.io/glossary/tensorflow)/tensorflow command and assigns the output to the withfreespace identifier.

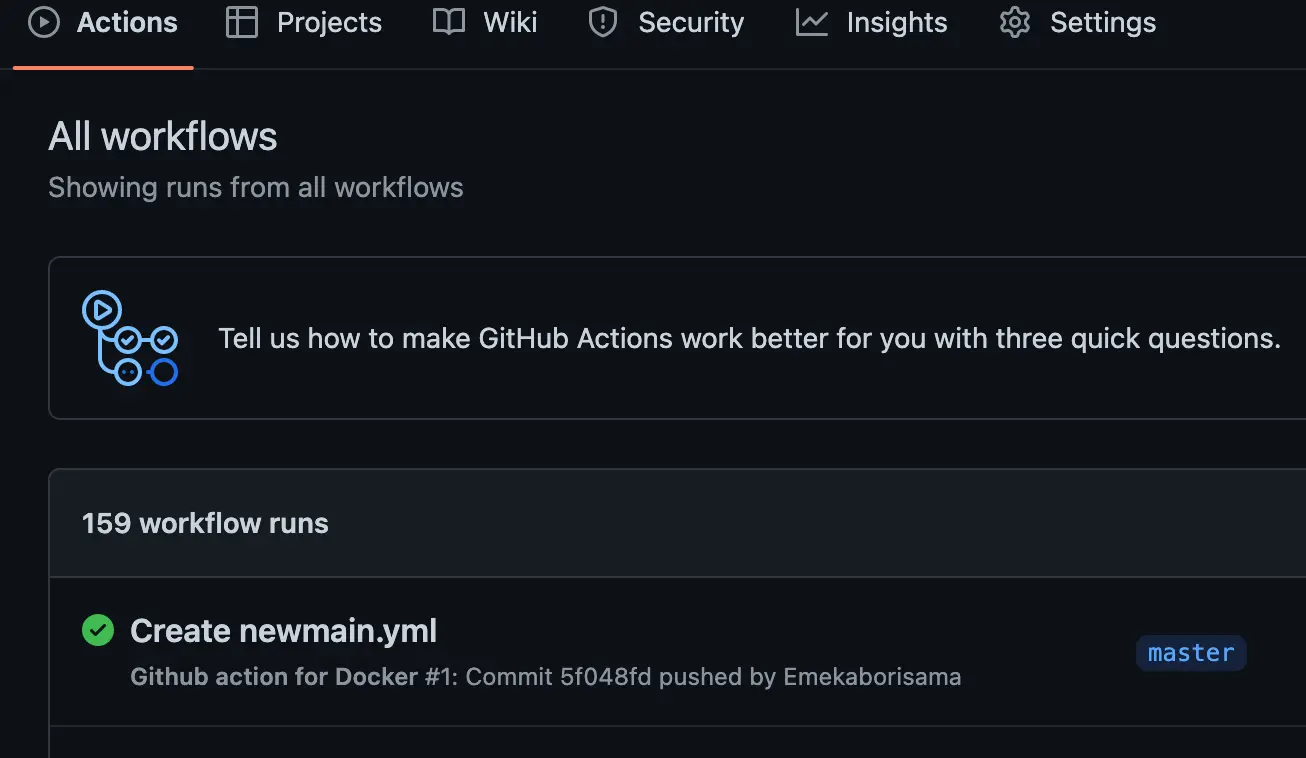

- Step 3: Commit the code and check your job status

Hurrrayyy !!!!!! Our simple github action workflow ran successfully. Next, we will setup AWS actions, and build and push our docker image to AWS Elastics Container Registry (ECR)

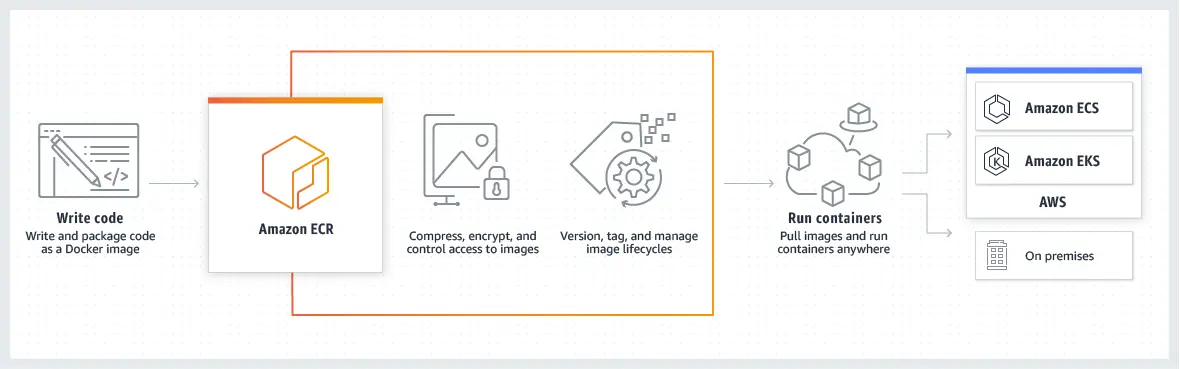

AWS Elastic Container Registry (ECR):

ECR (Elastic Container Registry) is a fully managed container registry that allows you to manage, store and deploy your docker containers. ECR (Elastic Container Registry) also provides security features to keep your container secured and prevent unauthorized access. For example, you can setup an IAM (AWS Identity and Access Management) policy to control who has access to your private or public repository.

Using ECR, you can as well integrate with other AWS services such as AWS ECS (Elastic Container Service), AWS EC2( Elastic Compute Cloud) and AWS EKS (Elastic Kubernetes Service), which are popular container orchestration services provided by AWS. ECR (Elastic Container Registry) allows you to easily deploy your Docker images to these services, which makes it easy to run your containers at scale.

How ECR works:

When you publish your docker image to ECR (Elastic Container Registry), it transfers your container image over HTTPS, encrypts it, creates a version, and stores it on AWS S3 (Simple Storage Service ) so your image can be highly available and accessible.

image credit: AWS amazon.com

Additionally, ECR (Elastic Container Registry) support public and private repositories; The public repositories are available to the general public. Images stored in the public repository can be shared with anyone and they do not require any authentication or authorization to access. You can use ECR (Elastic Container Registry) public repositories to share docker images with other developers or to distribute images to users outside of your organization. For example, if you have built a Docker image for an open-source project, you can push the image to a public repository in ECR (Elastic Container Registry) so the general public can easily pull and use it.

On the other hand, Images stored in the private repository are mostly for internal use within an organization. You can use ECR (Elastic Container Registry) private repositories to

securely store and manage Docker images for your organization applications which requires authentication and authorization to access.

For this tutorial, we will only create a private ECR (Elastic Container Registry) repository and write a github action to build a docker image and publish it to ECR (Elastic Container Registry)

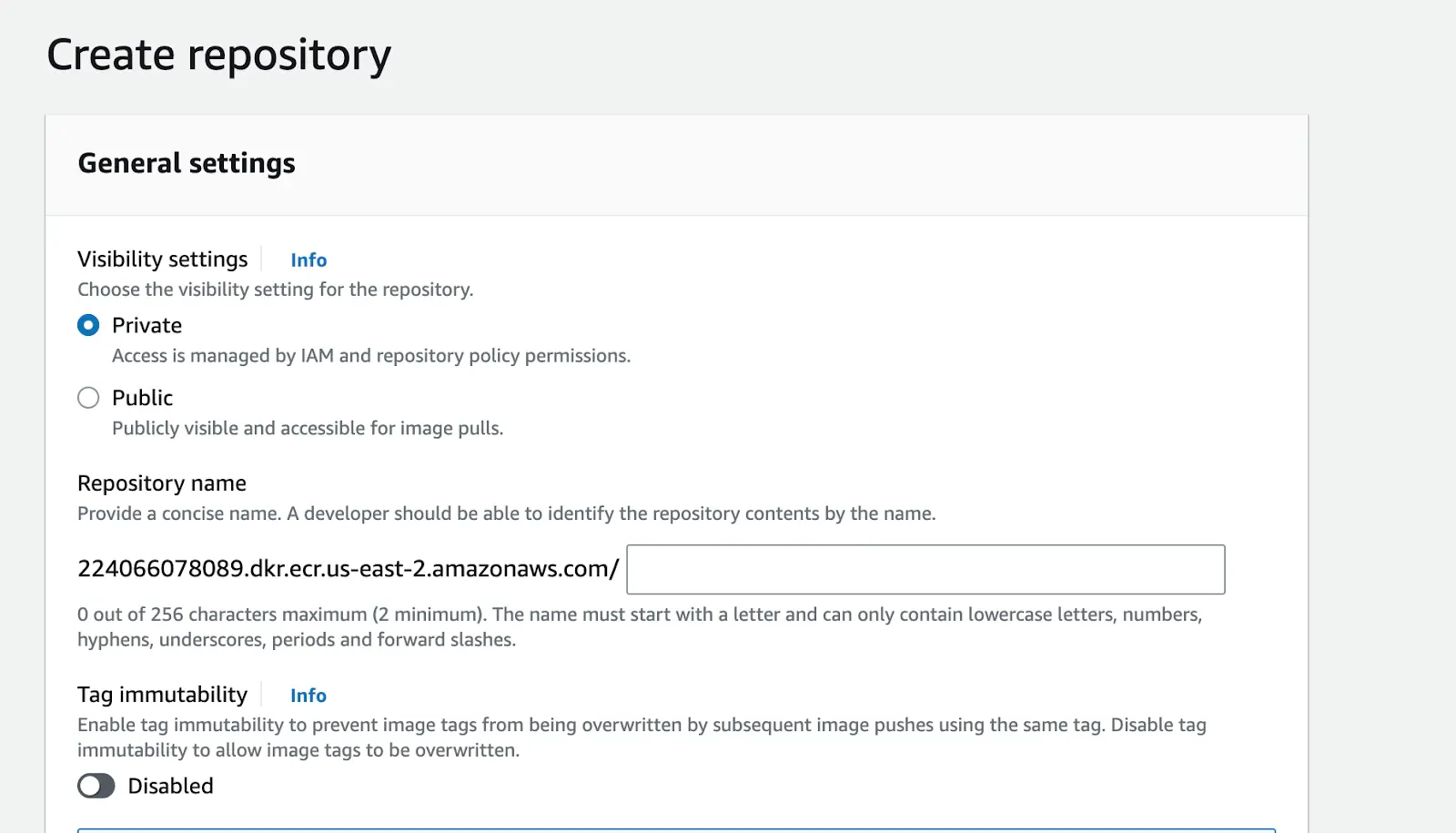

Step 1: Navigate to your AWS Console » Search ECR » Click on Get started

Step 2: Select private » Input your preferred ECR repository name (e.g st_cloud_first_version) » Create repository

Now that we have successfully created an ECR (Elastic Container Registry) repository, let’s create a github action workflow that builds a docker image and publish to ECR (Elastic Container Registry) private repository.

Build and Push a docker image to ECR (Elastic Container Registry) on Github Action

Step 1: Fork this example repository

https://github.com/Emekaborisama/aws-ecr-docker or Create a new repo and ensure you have the example or similar files in the example repository.

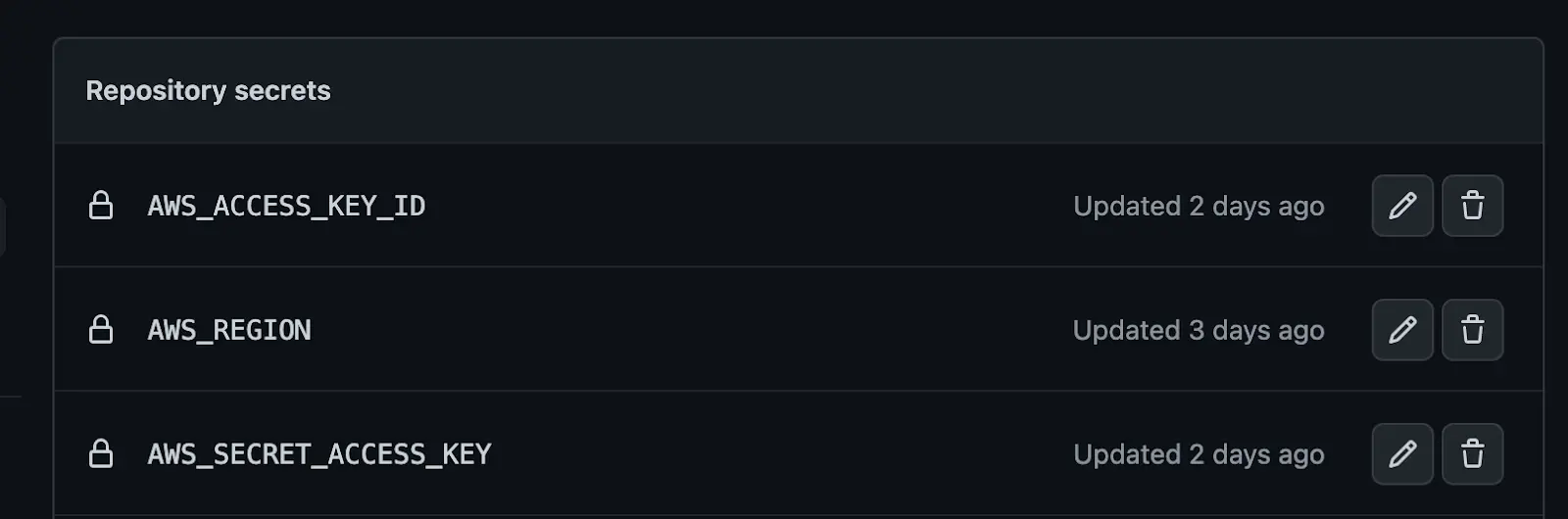

Step 2: Navigate to Setting on your repository »> Secrets and Variable »> Actions » New repository secret »>

Create secrets and input the outlined credentials below

- AWS ACCESS KEY

- AWS REGION

- AWS SECRET ACCESS KEY

In this step, we will store our AWS credentials and other variables we need to ensure we successfully authenticate ECR (Elastic Container Registry) and publish our image.

Note: Ensure that the access key and secret access key user has permission to ECR else the next step might fail read more here on granting permission to a user

Step 3: Navigate to Actions tab, create a new github workflow or edit your current workflow

Step 4: input the code below

name: Github docker build and push to ECR

on:

push:

branches: master

jobs:

ECRbuild:

name: Run Docker and Push to ECR

runs-on: ubuntu-20.04

steps:

# Checkout the code

- name: Checkout

uses: actions/checkout@v2

# Configure AWS credentials to access the ECR repository

- name: AWS cred

uses: aws-actions/configure-aws-credentials@v1

with:

aws-access-key-id: ${{ secrets.AWS_ACCESS_KEY_ID }}

aws-secret-access-key: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

aws-region: ${{ secrets.AWS_REGION }}

# Login to the Amazon ECR registry

- name: Login to Amazon ECR

id: login-ecr

uses: aws-actions/amazon-ecr-login@v1

# Build the Docker image and push it to the ECR registry

- name: ECRtask

id: ECRtaskrun

env:

ECR_REGISTRY: ${{ steps.login-ecr.outputs.registry }}

ECR_REPOSITORY: <input your ECR private repository name>

IMAGE_TAG: latest

run: |

docker build -t $ECR_REPOSITORY .

# Tag the Docker image with the ECR repository URI and push it to ECR

df -h

docker tag $ECR_REPOSITORY:$IMAGE_TAG $ECR_REGISTRY/$ECR_REPOSITORY:$IMAGE_TAG

echo "Pushing image to ECR..."

docker push $ECR_REGISTRY/$ECR_REPOSITORY:$IMAGE_TAG

# Set the output variable to the URI of the pushed image

echo "::set-output name=image::$ECR_REGISTRY/$ECR_REPOSITORY:$IMAGE_TAG"

The code above build a Docker image and push it to an Amazon Elastic Container Registry (ECR) repository. It is triggered when code is pushed to the master branch of a repository.

Next, commit the code and ensure your workflow is running. After this let’s solve a challenge we are likely to experience when setting up a workflow for high data-intensive application or service

Handling or maximizing github runner out-of-disk space error:

Github provisions a virtual machine for runners which have about 30 GB of storage and 7 CPU Core allocated and one of the common issues that developers or machine learning engineers experience while using Github Runner for their workflow is the out of disk space error. This error occurs when the total storage size of the files in the runner’s disk exceeds the limit.

While this error can be frustrating, there are steps you can take to handle or maximize the available disk space on your Github Runner.

Clean unnecessary files in the runner

Use pre-built runner cleaner

Use a Self-hosted runner

Clean unnecessary files in the runner: This step involves removing any unnecessary docker image, sql, dotnet or any auxiliary tools that aren’t needed for your workflow.

Here is a simple snippet or step to clean unnecessary files

- name: clean unnecessary files to save space

run: |

docker rmi `docker images -q`

sudo rm -rf /usr/share/dotnet /etc/mysql /etc/php /etc/sudo apt/sources.list.d

sudo apt -y autoremove --purge

sudo apt -y autoclean

sudo apt clean

rm --recursive --force "$AGENT_TOOLSDIRECTORY"

df -h

Use pre-built runner cleaner: Using pre-built runner cleaner work effective in combination with the first step (Clean unnecessary files).

The prebuilt runner cleaner basically removes or erases android, dotnet, haskell and large miscellaneous files and clears cache, then it swaps storage

Here is a simple snippet or step to perform this step

- name: Free Disk Space (Ubuntu)

uses: jlumbroso/free-disk-space@main

with:

# this might remove tools that are actually needed,

# if set to "true" but frees about 6 GB

tool-cache: false

large-packages: true

swap-storage: true

Overall, here is an example of what your github action workflow will look like using the free unnecessary file and prebuit runner cleaner in the workflow in Step 4

name: Github action for Docker to ECR

on:

push:

branches: master

jobs:

ECRbuild:

name: Run Docker and Push to ECR

runs-on: ubuntu-20.04

steps:

# Clean unnecessary files to save disk space

- name: clean unncessary files to save space

run: |

docker rmi `docker images -q`

sudo rm -rf /usr/share/dotnet /etc/mysql /etc/php /etc/sudo apt/sources.list.d

sudo apt -y autoremove --purge

sudo apt -y autoclean

sudo apt clean

rm --recursive --force "$AGENT_TOOLSDIRECTORY"

df -h

# Free up disk space on Ubuntu

- name: Free Disk Space (Ubuntu)

uses: jlumbroso/free-disk-space@main

with:

# This might remove tools that are actually needed, if set to "true" but frees about 6 GB

tool-cache: false

large-packages: true

swap-storage: true

# Checkout the repository

- name: Checkout

uses: actions/checkout@v2

# Configure AWS credentials

- name: AWS cred

uses: aws-actions/configure-aws-credentials@v1

with:

aws-access-key-id: ${{ secrets.AWS_ACCESS_KEY_ID }}

aws-secret-access-key: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

aws-region: ${{ secrets.AWS_REGION }}

# Login to Amazon ECR

- name: Login to Amazon ECR

id: login-ecr

uses: aws-actions/amazon-ecr-login@v1

# Build and push Docker image to ECR

- name: ECRtask

id: ECRtaskrun

env:

ECR_REGISTRY: ${{ steps.login-ecr.outputs.registry }}

ECR_REPOSITORY: st_cloud_first_version

IMAGE_TAG: latest

run: |

df -h

ls

echo $ { GITHUB_WORKSPACE }

#docker rmi `docker images -q`

docker build -t $ECR_REPOSITOR .

docker tag $ECR_REPOSITORY:$IMAGE_TAG $ECR_REGISTRY/$ECR_REPOSITORY:$IMAGE_TAG

echo "Pushing image to ECR..."

docker push $ECR_REGISTRY/$ECR_REPOSITORY:$IMAGE_TAG

# Set the output variable to the URI of the pushed image

echo "::set-output name=image::$ECR_REGISTRY/$ECR_REPOSITORY:$IMAGE_TAG"

PS: I was able to save 49 GB (an extra 19 GB) of my Github runner memory using this technique

Use a Self-hosted runner:

If you try the first two steps or actions and you are still out of disk space, use a self-hosted runner. Self-hosted runners can be particularly useful in scenarios where you need to run workflows in a specific environment and as well give you full control over the runner and the environment in which it runs.

Let’s setup a self-hosted runner with AWS EC2:

Before we begin, we need to get our Github token and store it as a secret in our repository

Step 1: Obtain and store your Github token as Secret:

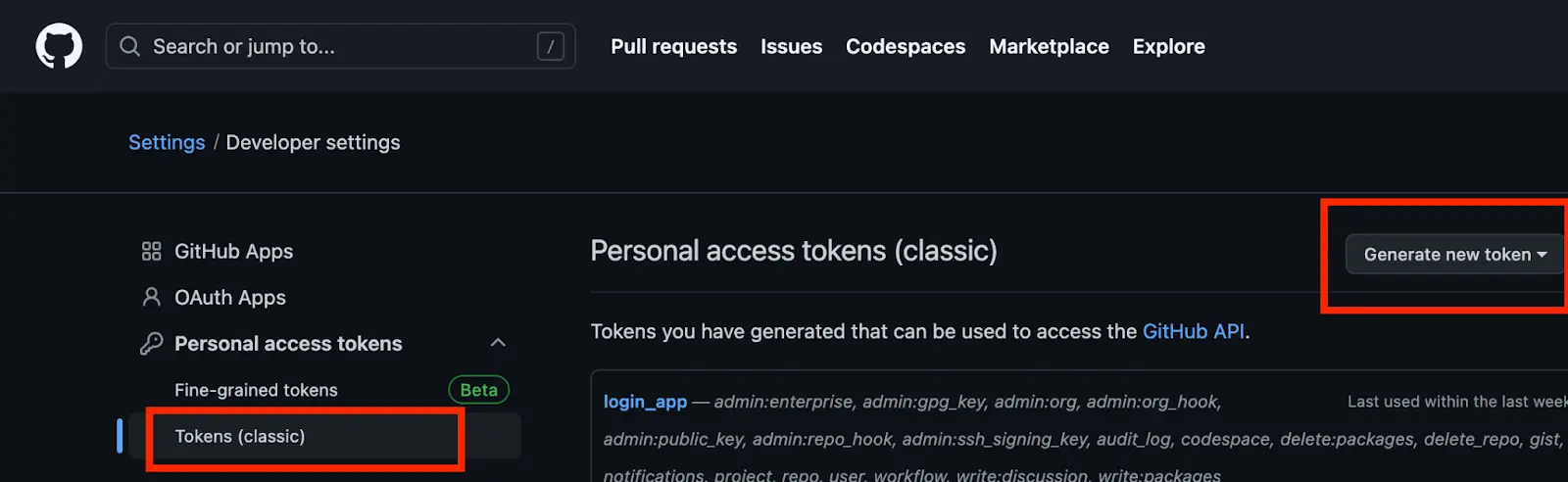

- Navigate to your Github account setting »> Developer setting »> Personal Access token »> Token (Classic)

- Add the token to your Github repository Secret

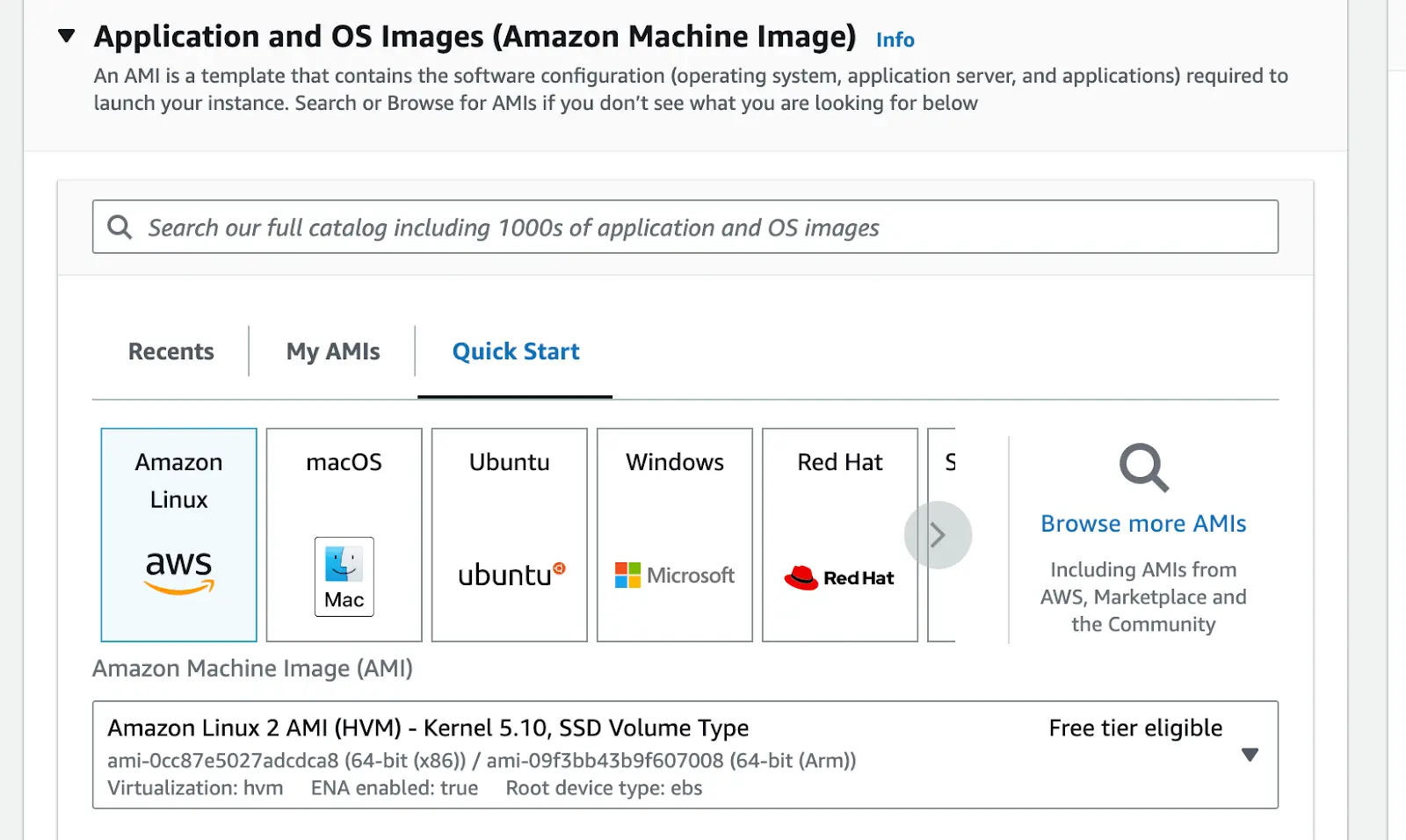

Step 2: Setup AWS EC2

- Use Amazon Linux as the instance OS

Configure your preferred EBS storage Size, Instance type, and select your preferred VPC and subnet

Ensure your security group(both V EC2 Outbound rules allow Port 443

Connect to your EC2 via SSH and install the required tools or dependencies for your workflow

For our example workflow we need git and docker. To install docker and git use the command below.

sudo yum update -y && \

sudo yum install docker -y && \

sudo yum install git -y && \

sudo systemctl enable docker

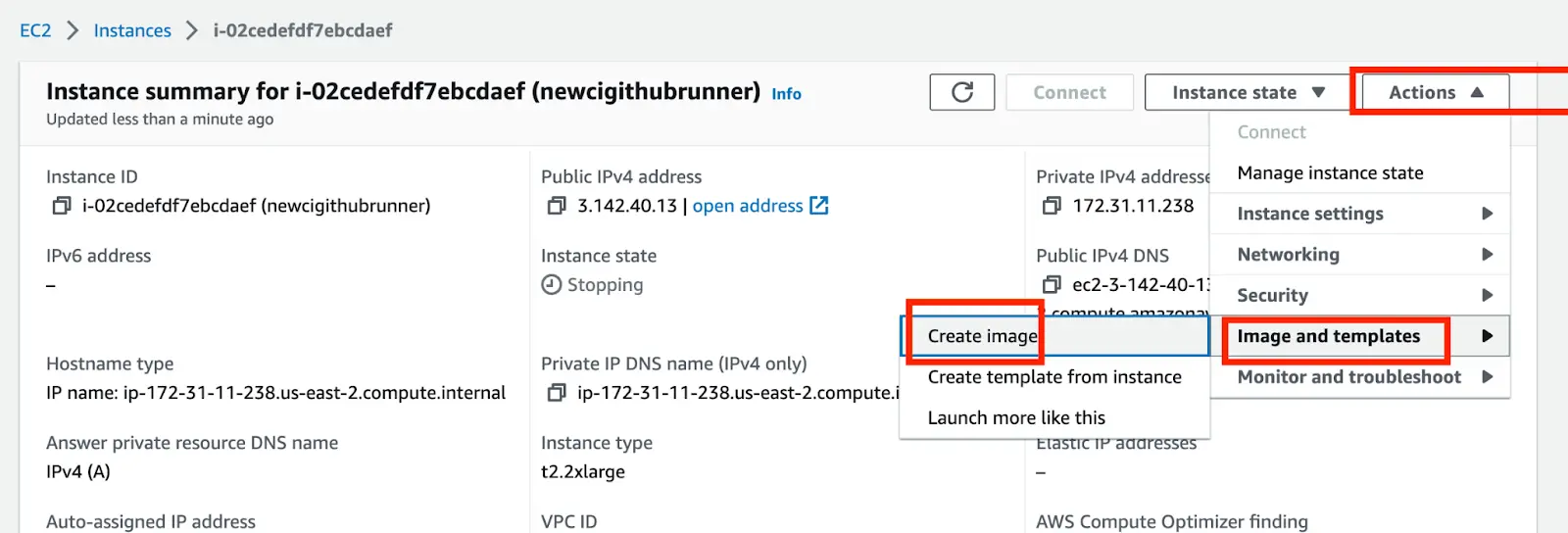

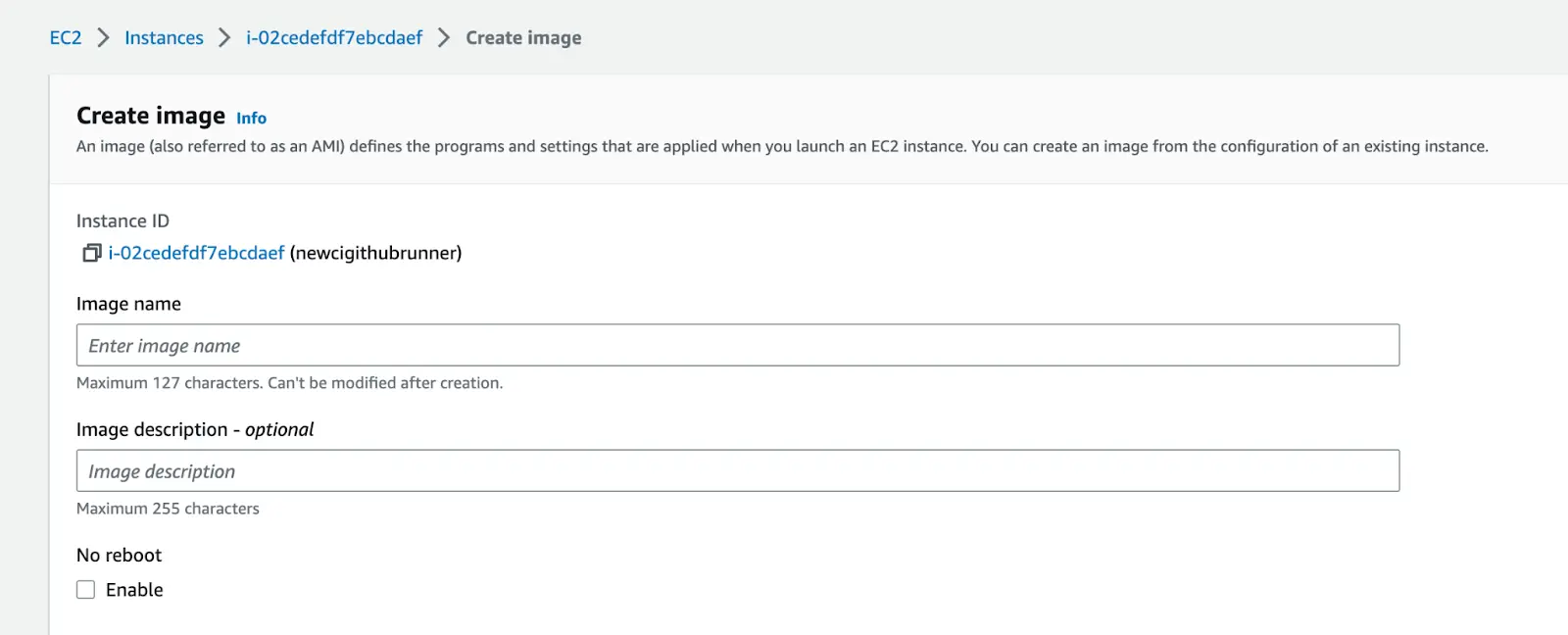

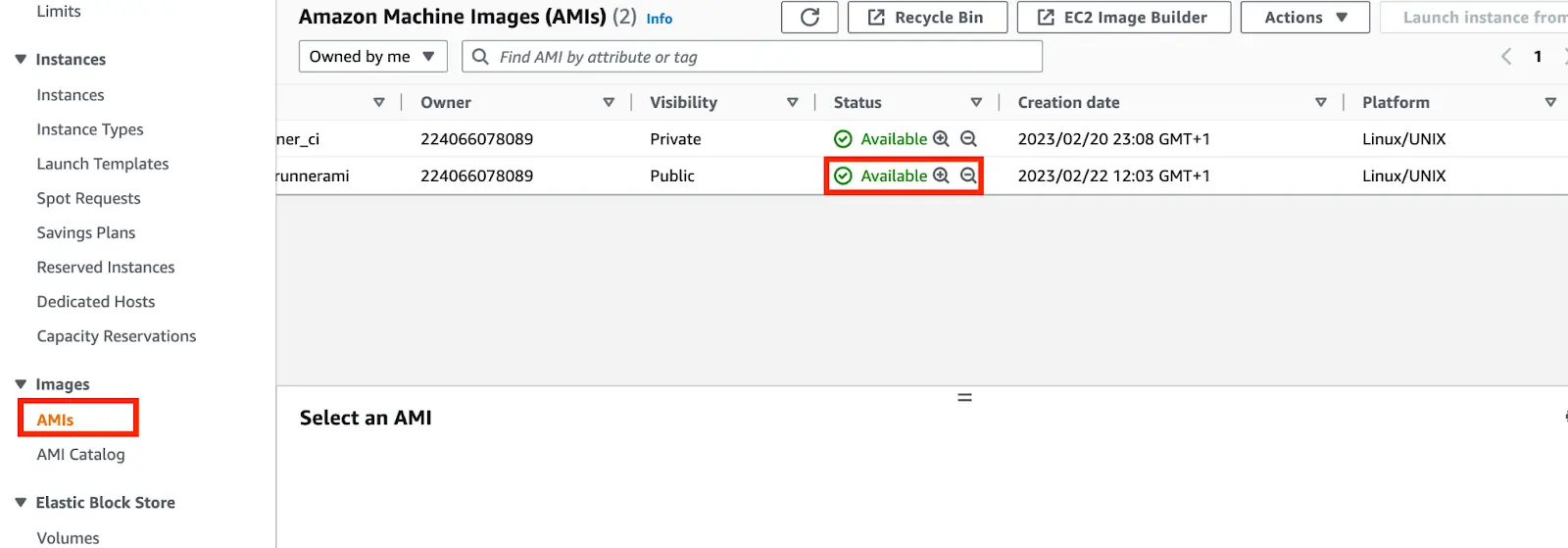

Step 3: Create an AMI from the Instance created:

- Navigate to the instance you have created »> Actions »> Image and Templates »> Create Image

- Input your preferred instance image name and description »> Create Image.

- Once the image is created, navigate to AMI on your EC2 pane to view your image.

- Now you can go ahead to delete the EC2 instance.

Configure your Github action to use Self hosted runner:

- paste the script below in your codebox

name: Github action for Docker to ECR

on:

push:

branches: master

jobs:

start-runner:

name: Start self-hosted EC2 runner

needs: ECRbuild

if: ${{ failure() && needs.ECRbuild.steps.ECRTask.outcome != 'success' }}

runs-on: ubuntu-latest

outputs:

label: ${{ steps.start-ec2-runner.outputs.label }}

ec2-instance-id: ${{ steps.start-ec2-runner.outputs.ec2-instance-id }}

steps:

- name: Configure AWS credentials

uses: aws-actions/configure-aws-credentials@v1

with:

aws-access-key-id: ${{ secrets.AWS_ACCESS_KEY_ID }}

aws-secret-access-key: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

aws-region: ${{ secrets.AWS_REGION }}

- name: Start EC2 runner

id: start-ec2-runner

uses: machulav/ec2-github-runner@v2

with:

mode: start

github-token: ${{ secrets.GH_SECRET }}

ec2-image-id: ami-0dxxxxxxxxx

ec2-instance-type: t2.2xlarge

subnet-id: subnet-08xxxxxxx

security-group-id: sg-06xxxxxx

# iam-role-name: my-role-name # optional, requires additional permissions

aws-resource-tags: > # optional, requires additional permissions

[

{"Key": "Name", "Value": "ec2-github-runner"},

{"Key": "GithubRepository", "Value": "${{ github.repository }}"}

]

ECRbuild:

name: Run Docker and Push to ECR

runs-on: ubuntu-20.04

steps:

- name: clean unnecessary files to save space

run: |

docker rmi `docker images -q`

sudo rm -rf /usr/share/dotnet /etc/mysql /etc/php /etc/sudo apt/sources.list.d

sudo apt -y autoremove --purge

sudo apt -y autoclean

sudo apt clean

rm --recursive --force "$AGENT_TOOLSDIRECTORY"

df -h

- name: Free Disk Space (Ubuntu)

uses: jlumbroso/free-disk-space@main

with:

# this might remove tools that are actually needed,

# if set to "true" but frees about 6 GB

tool-cache: false

large-packages: true

swap-storage: true

- name: Checkout

uses: actions/checkout@v2

continue-on-error: true

- name: AWS cred

uses: aws-actions/configure-aws-credentials@v1

with:

aws-access-key-id: ${{ secrets.AWS_ACCESS_KEY_ID }}

aws-secret-access-key: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

aws-region: ${{ secrets.AWS_REGION }}

continue-on-error: true

- name: Login to Amazon ECR

id: login-ecr

uses: aws-actions/amazon-ecr-login@v1

continue-on-error: true

- name: ECRtask

id: withfreespace

env:

ECR_REGISTRY: ${{ steps.login-ecr.outputs.registry }}

ECR_REPOSITORY: st_cloud_first_version

IMAGE_TAG: latest

run: |

echo "Free space:"

ls

docker build -t $ECR_REPOSITOR .

docker tag $ECR_REPOSITORY:$IMAGE_TAG $ECR_REGISTRY/$ECR_REPOSITORY:$IMAGE_TAG

echo "Pushing image to ECR..."

docker push $ECR_REGISTRY/$ECR_REPOSITORY:$IMAGE_TAG

# Set the output variable to the URI of the pushed image

echo "::set-output name=image::$ECR_REGISTRY/$ECR_REPOSITORY:$IMAGE_TAG"

ec2-runner-task:

name: run job

needs:

- start-runner

- ECRbuild

if: ${{ failure() && needs.ECRbuild.steps.ECRTask.outcome != 'success' }}

runs-on: ${{ needs.start-runner.outputs.label }} # run the job on the newly created runner

Steps:

- name: Checkout

uses: actions/checkout@v2

- name: AWS cred

uses: aws-actions/configure-aws-credentials@v1

with:

aws-access-key-id: ${{ secrets.AWS_ACCESS_KEY_ID }}

aws-secret-access-key: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

aws-region: ${{ secrets.AWS_REGION }}

- name: Login to Amazon ECR

id: login-ecr

uses: aws-actions/amazon-ecr-login@v1

- name: Run task

env:

ECR_REGISTRY: ${{ steps.login-ecr.outputs.registry }}

ECR_REPOSITORY: st_cloud_first_version

IMAGE_TAG: latest

run: |

echo "Free space:"

df -h

ls

docker build -t $ECR_REPOSITOR .

docker tag $ECR_REPOSITORY:$IMAGE_TAG $ECR_REGISTRY/$ECR_REPOSITORY:$IMAGE_TAG

echo "Pushing image to ECR..."

docker push $ECR_REGISTRY/$ECR_REPOSITORY:$IMAGE_TAG

# Set the output variable to the URI of the pushed image

echo "::set-output name=image::$ECR_REGISTRY/$ECR_REPOSITORY:$IMAGE_TAG"

stop-runner:

name: Stop self-hosted EC2 runner

needs:

- start-runner # required to get output from the start-runner job

- ec2-runner-task

runs-on: ubuntu-latest

if: ${{ always() }} # required to stop the runner even if the error happened in the previous jobs

steps:

- name: Configure AWS credentials

uses: aws-actions/configure-aws-credentials@v1

with:

aws-access-key-id: ${{ secrets.AWS_ACCESS_KEY_ID }}

aws-secret-access-key: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

aws-region: ${{ secrets.AWS_REGION }}

- name: Stop EC2 runner

uses: machulav/ec2-github-runner@v2

with:

mode: stop

github-token: ${{ secrets.GH_SECRET }}

label: ${{ needs.start-runner.outputs.label }}

ec2-instance-id: ${{ needs.start-runner.outputs.ec2-instance-id }}

continue-on-error: true

This Github Action above is a workflow that builds a Docker image, pushes/publishes it to Amazon Elastic Container Registry (ECR) and if the Github runner returns out-of-disk space error, it spins up the self-hosted EC2 runner.

Line 17

if: ${{ failure() && needs.ECRbuild.steps.ECRTask.outcome != 'success' }}

The workflow is triggered by push events to the master branch and It consists of four jobs: ECRbuild, start-runner, stop-runner and ec2-runner-task.

ECRbuildbuilds the Docker image, pushes it to ECR and outputs the image’s name.start-runnercreates a self-hosted EC2 instance and outputs the instance ID.ec2-runner-taskruns the Docker image on the self-hosted EC2 instance created by start-runner.Stop-runnerdisable or stop self-hosted EC2 instance after running the workflow and outputs the instance ID

The start-runner requires the following input:

Ec2 Image ID: Your AMI ID (e.g ami-0dxxxxxxxxx)

Ec2 instance type: Your EC2 instance type e.g t2.2xlarge

Subnet Id: Your EC2 subnet ID (e.g subnet-08xxxxxxx)

Security Group ID: Your EC2 security group ID (e.g sg-06xxxxxx)

Resources:

https://github.com/marketplace/actions/on-demand-self-hosted-aws-ec2-runners-for-github-actions

https://faun.pub/setting-up-github-self-hosted-runners-on-ec2-24ad1bd2248c

https://docs.github.com/en/actions/using-workflows/workflow-syntax-for-github-actions

About Saturn Cloud

Saturn Cloud is your all-in-one solution for data science & ML development, deployment, and data pipelines in the cloud. Spin up a notebook with 4TB of RAM, add a GPU, connect to a distributed cluster of workers, and more. Request a demo today to learn more.

Saturn Cloud provides customizable, ready-to-use cloud environments for collaborative data teams.

Try Saturn Cloud and join thousands of users moving to the cloud without

having to switch tools.