Building a Full Stack AI Platform on Bare Metal with k0rdent and Saturn Cloud

Bare metal GPU providers compete on price and availability, but customers increasingly expect more than SSH access to servers. They want the platform experience they get from AWS SageMaker or GCP Vertex AI. The trend is clear: CoreWeave acquired Weights & Biases for $1.7B, DigitalOcean acquired Paperspace, and Lightning AI merged with Voltage Park. GPU providers need platform layers, not just compute.

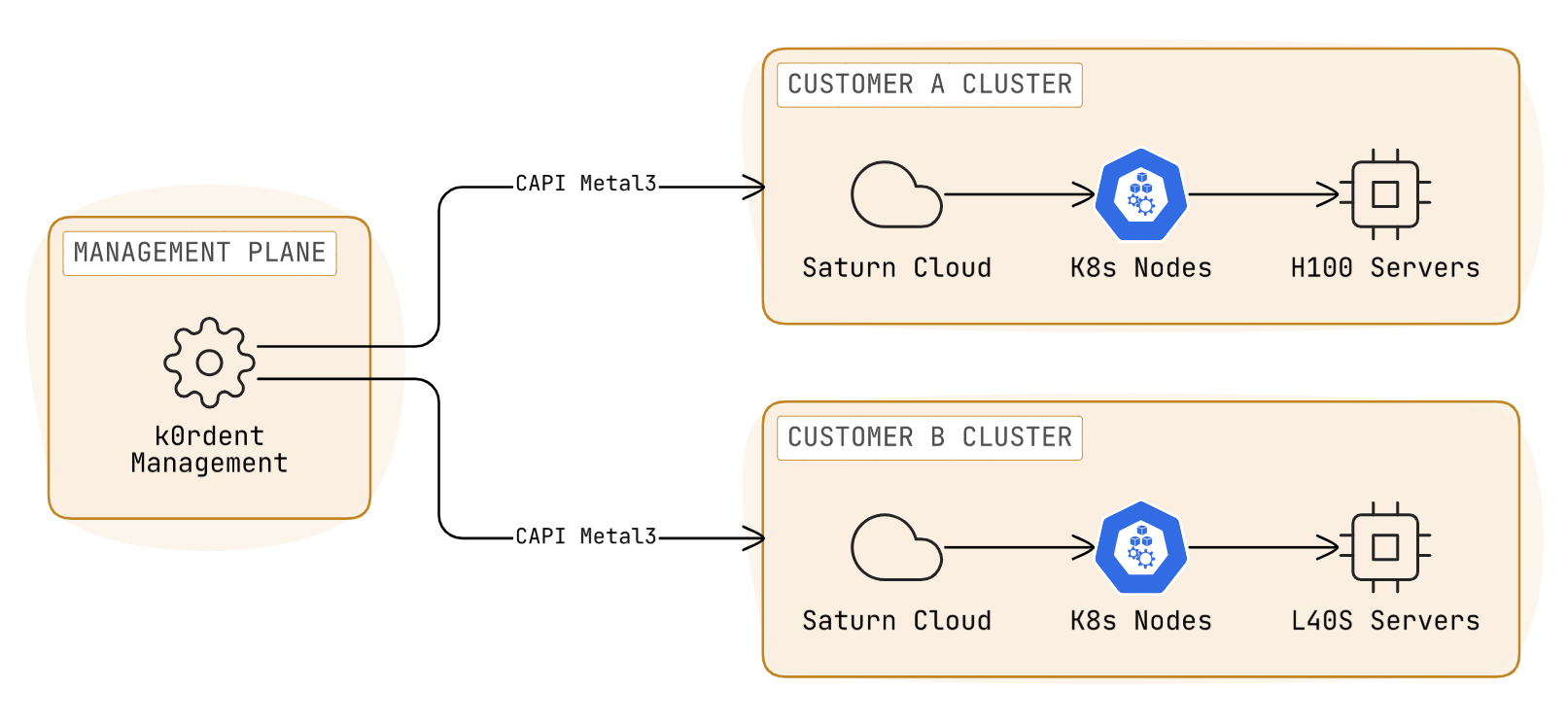

The question is how to build this stack without replicating the engineering effort AWS put into their ML platform. This post describes one approach: using Mirantis k0rdent to manage bare metal infrastructure and Kubernetes clusters, with Saturn Cloud installed on top to provide the AI platform layer.

The high-level picture

What you’re probably doing now: Customer fills out a form requesting 8xH200s. Someone on your team identifies available hardware, images the servers (or wipes the previous tenant’s data), configures VLANs for network isolation, and emails the customer an IP address and root password. Customer asks how to set up InfiniBand for multi-node training, you send documentation. They ask about idle detection, you tell them to write a script. They ask about notebooks, you suggest installing JupyterHub. Meanwhile, you have no visibility into whether those GPUs are actually running workloads or sitting idle, and the customer is paying either way.

Here’s what changes with k0rdent + Saturn Cloud:

What you have: Racks of GPU servers with BMC access (IPMI or Redfish), network infrastructure, storage systems, and customers who want more than SSH access.

What you install:

- k0rdent management cluster (runs on a control node, manages your bare metal fleet)

- Saturn Cloud (Helm charts deployed into Kubernetes clusters that k0rdent creates)

What your customers get: A web interface where they can launch JupyterLab environments, run training jobs, deploy models, and track GPU usage. No Kubernetes knowledge required.

What you manage: Hardware (GPUs, network, storage), and k0rdent configuration. Saturn Cloud handles the application platform, user authentication, and workload scheduling.

The division of labor is straightforward. k0rdent turns bare metal servers into managed Kubernetes clusters. Saturn Cloud turns those clusters into an AI development platform. You provide the GPUs, network, and storage, and both tools handle the rest.

The shift from manual to automated

| Current approach | With k0rdent + Saturn Cloud |

|---|---|

| Email threads to provision servers | API calls to create resources |

| Manual server imaging and VLAN configuration | Automated via Metal3 and Cluster API |

| Send customers IP addresses and SSH keys | Send customers a login URL |

| Customer installs drivers, CUDA, networking stack | Pre-configured in cluster images |

| Customer configures InfiniBand/RoCE for multi-node | k0rdent provides RDMA drivers, Saturn Cloud orchestrates training jobs |

| No visibility into GPU utilization | Usage tracking and idle detection built-in |

| Customer pays for idle GPUs | Automatic shutdown after idle period |

| Support tickets for “How do I run Jupyter?” | Self-service dev environments |

The difference is moving from a “concierge infrastructure” model (where every request requires human intervention) to a cloud-native platform where customers can self-service and you can see what’s actually running on your hardware.

What bare metal GPU providers need to deliver

AI teams need more than SSH access to GPU servers. They need:

- Development environments with JupyterLab, VSCode, persistent storage, and GPU access

- Job orchestration for training runs and batch inference

- Multi-node distributed training with proper network configuration

- Deployment infrastructure for serving models

- SSO integration and usage tracking by user/project

- Idle detection to prevent GPU waste

Building all of this from scratch requires significant engineering effort. Kubernetes provides some of the primitives (pods, services, persistent volumes), but you still need to build the control plane, web interface, authentication, and GPU scheduling logic.

The k0rdent layer: Infrastructure and cluster management

k0rdent manages the infrastructure layer. It orchestrates bare metal servers and creates Kubernetes clusters on top of them using the Cluster API and Metal3. While this post focuses on bare metal deployments, k0rdent also supports AWS, Azure, GCP, vSphere, and OpenStack, allowing you to manage hybrid GPU infrastructure (bare metal H200s or GB200s alongside cloud instances) through a single control plane.

What k0rdent handles

k0rdent uses Metal3 to represent each physical server as a BareMetalHost object in Kubernetes. These are abstracted into ProviderTemplates and ClusterDeployments, so users interact with high-level templates rather than raw Metal3 objects. The system manages servers through BMC protocols (IPMI or Redfish) and configures machines via iPXE network boot.

When you need a new Kubernetes cluster, k0rdent:

- Allocates available BareMetalHost objects from the pool

- Images the machines with the required OS and Kubernetes components via network boot

- Configures networking (DHCP, VIPs via keepalived)

- Installs the Kubernetes control plane and worker nodes

- Registers the cluster in the management plane

The key components are:

- baremetal-operator: Manages BareMetalHost lifecycle

- cluster-api-provider-metal3: CAPI provider for Metal3 infrastructure

- ironic: Handles bare metal imaging and boot management (includes MariaDB, DHCP, TFTP, HTTP servers)

A single k0rdent management cluster can create and manage hundreds of downstream Kubernetes clusters across your bare metal infrastructure.

Multi-tenancy for GPU providers: One of the hardest problems for bare metal GPU clouds is secure multi-tenancy. With raw servers, you need to manually configure VLANs, wipe drives between customers, and manage network isolation. k0rdent handles this by creating dedicated Kubernetes clusters per tenant (or per project). Each cluster gets its own isolated network fabric, dedicated nodes, and complete separation from other tenants. When a customer churns, k0rdent can reclaim the machines, securely wipe them, and return them to the available pool for the next tenant. This cluster-per-tenant model provides stronger isolation than namespace-based multi-tenancy while keeping management overhead low.

What k0rdent doesn’t handle

k0rdent provisions Kubernetes clusters, but it doesn’t know anything about AI workloads. It doesn’t provide:

- User-facing development environments

- Job scheduling and orchestration

- Model deployment and serving

- Usage tracking or cost allocation

- Application-level authentication

This is where Saturn Cloud fits in.

The Saturn Cloud layer: AI platform on Kubernetes

Saturn Cloud installs into Kubernetes clusters as a set of Helm charts and operators. It provides the 90% of platform functionality that every AI team needs: dev environments, jobs, deployments, and operational tooling.

What Saturn Cloud provides

Development environments: JupyterLab, RStudio, and VSCode instances with GPU access. Users can start a notebook server, write code, and test models without waiting for infrastructure provisioning.

Job orchestration: Run training jobs, batch inference, or data processing pipelines. Saturn Cloud handles job queueing, resource allocation, and retry logic. If a job fails, you get logs and error details without debugging Kubernetes pod states.

Distributed training coordination: Multi-node PyTorch or DeepSpeed jobs require specific environment variables (RANK, WORLD_SIZE, MASTER_ADDR) and high-speed networking. k0rdent ensures nodes have the correct RDMA drivers and network stack (InfiniBand or RoCE), while Saturn Cloud orchestrates the training job configuration and environment variables based on the number of GPUs requested.

Model deployments: Long-running inference endpoints with autoscaling. This includes NVIDIA NIM containers, custom FastAPI services, or vLLM deployments.

Usage tracking: Time-series data on GPU usage by user and project. This matters for chargeback, capacity planning, and identifying idle resources.

Idle detection: Automatically shut down dev environments after a configurable idle period. GPUs are expensive, and forgotten notebook servers add up quickly.

What Saturn Cloud doesn’t handle

Saturn Cloud assumes Kubernetes already exists and is managing the underlying infrastructure. It doesn’t configure bare metal servers, manage BMCs, or handle cluster lifecycle. That’s k0rdent’s job.

The combined architecture

Here’s how the layers fit together:

Deployment flow

Infrastructure setup: Install k0rdent management cluster on a control node. Register bare metal servers as BareMetalHost objects with BMC credentials.

Cluster provisioning: Use k0rdent to create one or more Kubernetes clusters from available bare metal hosts. Each cluster can be dedicated to a specific team, project, or security zone.

Saturn Cloud installation: Deploy Saturn Cloud as a k0rdent ServiceTemplate, which automatically installs it into each new cluster via Helm charts. Configure SSO, cloud provider integrations (for object storage), and GPU resource limits. k0rdent’s State Manager (KSM) can bootstrap the Saturn Cloud platform automatically when clusters are created.

User onboarding: Users log into Saturn Cloud, create dev environments or jobs, and start working. They don’t interact with k0rdent or Kubernetes directly.

Different teams, different concerns

k0rdent operates at infrastructure time scales (creating clusters takes minutes). Saturn Cloud operates at application time scales (starting a notebook takes seconds).

Infrastructure engineers manage k0rdent: adding servers, configuring networks, managing cluster templates. They don’t need to understand PyTorch or model deployment patterns.

AI/ML engineers use Saturn Cloud: launching training jobs, deploying models, tracking experiments. They don’t need to know how BareMetalHost objects work or how Metal3 images machines.

What this enables for bare metal GPU providers

Bare metal GPU providers often compete on price and availability. H200s at $3.50/hour instead of $10/hour on GCP is a compelling pitch, but only if the platform experience doesn’t require customers to rewrite their workflows.

With k0rdent + Saturn Cloud, you can offer:

Self-service provisioning: Customers get a web interface and API, not SSH credentials and a Slack channel for support requests.

Multi-tenancy: Run multiple customer environments on shared infrastructure with proper isolation and usage tracking.

Standard integrations: Saturn Cloud works with existing tools (S3-compatible storage, GitHub, Docker registries). Customers don’t need to learn new APIs.

Operational visibility: Track GPU utilization, identify idle resources, and allocate costs by user or project.

The infrastructure work (server orchestration, Kubernetes management) is handled by k0rdent. The application layer (notebooks, jobs, deployments) is handled by Saturn Cloud. You provide the hardware (GPUs, network, storage).

What this means for bare metal GPU providers

The economics of AI infrastructure are shifting. While hyperscalers built their ML platforms to drive consumption of their compute at premium pricing, bare metal GPU providers have the opportunity to deliver superior price-performance without sacrificing the platform experience that enterprise AI teams expect. The combination of k0rdent and Saturn Cloud eliminates the false choice between “cheap but manual” and “expensive but managed.” Your customers get the self-service development environments, orchestration tools, and operational visibility they need, running on infrastructure that costs 60-70% less than hyperscaler alternatives. More importantly, this approach lets you focus on what differentiates your business: GPU availability, network performance, and customer relationships, not rebuilding infrastructure management software.

The bare metal GPU market is consolidating around providers who can deliver complete platforms, not just compute. Whether you’re scaling from your first rack to your tenth datacenter, the path forward is clear: automate infrastructure management with k0rdent, deliver application-layer value with Saturn Cloud, and compete on the economics and performance that only bare metal can provide.

Join Our Webinar: From Bare Metal to Full-Stack AI Platform in 90 Days

Join engineers from Mirantis and Saturn Cloud for a technical deep-dive covering:

- Live demo: Provisioning a multi-tenant AI platform on bare metal infrastructure

- Architecture walkthrough: How k0rdent and Saturn Cloud integrate for production deployments

- Real-world case study: A bare metal GPU provider’s journey from manual provisioning to automated platform delivery

- Q&A: Your specific deployment scenarios and technical requirements

Sign up below to receive the webinar invitation and an early-access link to the recording once the session concludes.

Questions about your specific deployment? Contact support@saturncloud.io.

Saturn Cloud provides customizable, ready-to-use cloud environments

for collaborative data teams.

Try Saturn Cloud and join thousands of users moving to the cloud without having to switch tools.