A Guide to MLOps

Introduction

ML models have grown significantly in recent years, and businesses increasingly rely on them to automate and optimize their operations. However, managing ML models can be challenging, especially as models become more complex and require more resources to train and deploy. This has led to the emergence of MLOps as a way to standardize and streamline the ML workflow. MLOps emphasizes the need for continuous integration and continuous deployment (CI/CD) in the ML workflow, ensuring that models are updated in real-time to reflect changes in data or ML algorithms. This infrastructure is valuable in areas where accuracy, reproducibility, and reliability are critical, such as healthcare, finance, and self-driving cars. By implementing MLOps, organizations can ensure that their ML models are continuously updated and accurate, helping to drive innovation, reduce costs, and improve efficiency.

What is MLOps?

MLOps is a methodology combining ML and DevOps practices to streamline developing, deploying, and maintaining ML models. MLOps share several key characteristics with DevOps, including:

CI/CD: MLOps emphasizes the need for a continuous cycle of code, data, and model updates in ML workflows. This approach requires automating as much as possible to ensure consistent and reliable results.

Automation: Like DevOps, MLOps stresses the importance of automation throughout the ML lifecycle. Automating critical steps in the ML workflow, such as data processing, model training, and deployment, results in a more efficient and reliable workflow.

Collaboration and Transparency: MLOps encourages a collaborative and transparent culture of shared knowledge and expertise across teams developing and deploying ML models. This helps to ensure a streamlined process, as handoff expectations will be more standardized.

Infrastructure as Code (IaC): DevOps and MLOps employ an “infrastructure as code” approach, in which infrastructure is treated as code and managed through version control systems. This approach allows teams to manage infrastructure changes more efficiently and reproducibly.

Testing and Monitoring: MLOps and DevOps emphasize the importance of testing and monitoring to ensure consistent and reliable results. In MLOps, this involves testing and monitoring the accuracy and performance of ML models over time.

Flexibility and Agility: DevOps and MLOps emphasize flexibility and agility in response to changing business needs and requirements. This means being able to rapidly deploy and iterate on ML models to keep up with evolving business demands.

The bottom line is that ML has a lot of variability in its behavior, given that models are essentially a black box used to generate some prediction. While DevOps and MLOps share many similarities, MLOps requires a more specialized set of tools and practices to address the unique challenges posed by data-driven and computationally-intensive ML workflows. ML workflows often require a broad range of technical skills that go beyond traditional software development, and they may involve specialized infrastructure components, such as accelerators, GPUs, and clusters, to manage the computational demands of training and deploying ML models. Nevertheless, taking the best practices of DevOps and applying them across the ML workflow will significantly reduce project times and provide the structure ML needs to be effective in production.

Importance and Benefits of MLOps in Modern Business

ML has revolutionized how businesses analyze data, make decisions, and optimize operations. It enables organizations to create powerful, data-driven models that reveal patterns, trends, and insights, leading to more informed decision-making and more effective automation. However, effectively deploying and managing ML models can be challenging, which is where MLOps comes into play. MLOps is becoming increasingly important for modern businesses because it offers a range of benefits, including:

Faster Development Time: MLOps allows organizations to accelerate the development life-cycle of ML models, reducing the time to market and enabling businesses to respond quickly to changing market demands. Furthermore, MLOps can help automate many tasks in data collection, model training, and deployment, freeing up resources and speeding up the overall process.

Better Model Performance: With MLOps, businesses can continuously monitor and improve the performance of their ML models. MLOps facilitates automated testing mechanisms for ML models, which detects problems related to model accuracy, model drift, and data quality. Organizations can improve their ML models' overall performance and accuracy by addressing these issues early, translating into better business outcomes.

More Reliable Deployments: MLOps allows businesses to deploy ML models more reliably and consistently across different production environments. By automating the deployment process, MLOps reduces the risk of deployment errors and inconsistencies between different environments when running in production.

Reduced Costs and Improved Efficiency: Implementing MLOps can help organizations reduce costs and improve overall efficiency. By automating many tasks involved in data processing, model training, and deployment, organizations can reduce the need for manual intervention, resulting in a more efficient and cost-effective workflow.

In summary, MLOps is essential for modern businesses looking to leverage the transformative power of ML to drive innovation, stay ahead of the competition, and improve business outcomes. By enabling faster development time, better model performance, more reliable deployments, and enhanced efficiency, MLOps is instrumental in unlocking the full potential of harnessing ML for business intelligence and strategy. Utilizing MLOps tools will also allow team members to focus on more important matters and businesses to save on having large dedicated teams to maintain redundant workflows.

The MLOps Lifecycle

Whether creating your own MLOps infrastructure or selecting from various available MLOps platforms online, ensuring your infrastructure encompasses the four features mentioned below is critical to success. By selecting MLOps tools that address these vital aspects, you will create a continuous cycle from data scientists to deployment engineers to deploy models quickly without sacrificing quality.

Continuous Integration (CI)

Continuous Integration (CI) involves constantly testing and validating changes made to code and data to ensure they meet a set of defined standards. In MLOps, CI integrates new data and updates to ML models and supporting code. CI helps teams catch issues early in the development process, enabling them to collaborate more effectively and maintain high-quality ML models. Examples of CI practices in MLOps include:

Automated data validation checks to ensure data integrity and quality.

Model version control to track changes in model architecture and hyperparameters.

Automated unit testing of model code to catch issues before the code is merged into the production repository.

Continuous Deployment (CD)

Continuous Deployment (CD) is the automated release of software updates to production environments, such as ML models or applications. In MLOps, CD focuses on ensuring that the deployment of ML models is seamless, reliable, and consistent. CD reduces the risk of errors during deployment and makes it easier to maintain and update ML models in response to changing business requirements. Examples of CD practices in MLOps include:

Automated ML pipeline with continuous deployment tools like Jenkins or CircleCI for integrating and testing model updates, then deploying them to production.

Containerization of ML models using technologies like Docker to achieve a consistent deployment environment, reducing potential deployment issues.

Implementing rolling deployments or blue-green deployments minimizes downtime and allows for an easy rollback of problematic updates.

Continuous Training (CT)

Continuous Training (CT) involves updating ML models as new data becomes available or as existing data changes over time. This essential aspect of MLOps ensures that ML models remain accurate and effective while considering the latest data and preventing model drift. Regularly training models with new data helps maintain optimal performance and achieve better business outcomes. Examples of CT practices in MLOps include:

Setting policies (i.e., accuracy thresholds) that trigger model retraining to maintain up-to-date accuracy.

Using active learning strategies to prioritize collecting valuable new data for training.

Employing ensemble methods to combine multiple models trained on different subsets of data, allowing for continuous model improvement and adaptation to changing data patterns.

Continuous Monitoring (CM)

Continuous Monitoring (CM) involves constantly analyzing the performance of ML models in production environments to identify potential issues, verify that models meet defined standards, and maintain overall model effectiveness. MLOps practitioners use CM to detect issues like model drift or performance degradation, which can compromise the accuracy and reliability of predictions. By regularly monitoring the performance of their models, organizations can proactively address any problems, ensuring that their ML models remain effective and generate the desired results. Examples of CM practices in MLOps include:

Tracking key performance indicators (KPIs) of models in production, such as precision, recall, or other domain-specific metrics.

Implementing model performance monitoring dashboards for real-time visualization of model health.

Applying anomaly detection techniques to identify and handle concept drift, ensuring that the model can adapt to changing data patterns and maintain its accuracy over time.

How Does MLOps Benefit the ML Lifecycle?

Managing and deploying ML models can be time-consuming and challenging, primarily due to the complexity of ML workflows, data variability, the need for iterative experimentation, and the continuous monitoring and updating of deployed models. When the ML lifecycle is not properly streamlined with MLOps, organizations face issues such as inconsistent results due to varying data quality, slower deployment as manual processes become bottlenecks, and difficulty maintaining and updating models rapidly enough to react to changing business conditions. MLOps brings efficiency, automation, and best practices that facilitate each stage of the ML lifecycle.

Consider a scenario where a data science team without dedicated MLOps practices is developing an ML model for sales forecasting. In this scenario, the team may encounter the following challenges:

Data preprocessing and cleansing tasks are time-consuming due to the lack of standardized practices or automated data validation tools.

Difficulty in reproducibility and traceability of experiments due to inadequate versioning of model architecture, hyperparameters, and data sets.

Manual and inefficient deployment processes lead to delays in releasing models to production and the increased risk of errors in production environments.

Manual deployments can also add many failures in automatically scaling deployments across multiple servers online, affecting redundancy and uptime.

Inability to rapidly adjust deployed models to changes in data patterns, potentially leading to performance degradation and model drift.

There are five stages in the ML lifecycle, which are directly improved with MLOps tooling mentioned below.

Data Collection and Preprocessing

The first stage of the ML lifecycle involves the collection and preprocessing of data. Organizations can ensure data quality, consistency, and manageability by implementing best practices at this stage. Data versioning, automated data validation checks, and collaboration within the team lead to better accuracy and effectiveness of ML models. Examples include:

Data versioning to track changes in the datasets used for modeling.

Automated data validation checks to maintain data quality and integrity.

Collaboration tools within the team to share and manage data sources effectively.

Model Development

MLOps helps teams follow standardized practices during the model development stage while selecting algorithms, features, and tuning hyperparameters. This reduces inefficiencies and duplicated efforts, which improves overall model performance. Implementing version control, automated experimentation tracking, and collaboration tools significantly streamline this stage of the ML Lifecycle. Examples include:

Implementing version control for model architecture and hyperparameters.

Establishing a central hub for automated experimentation tracking to reduce repeating experiments and encourage easy comparisons and discussions.

Visualization tools and metric tracking to foster collaboration and monitor the performance of models during development.

Model Training and Validation

In the training and validation stage, MLOps ensures organizations use reliable processes for training and evaluating their ML models. Organizations can effectively optimize their models' accuracy by leveraging automation and best practices in training. MLOps practices include cross-validation, training pipeline management, and continuous integration to automatically test and validate model updates. Examples include:

Cross-validation techniques for better model evaluation.

Managing training pipelines and workflows for a more efficient and streamlined process.

Continuous integration workflows to automatically test and validate model updates.

Model Deployment

The fourth stage is model deployment to production environments. MLOps practices in this stage help organizations deploy models more reliably and consistently, reducing the risk of errors and inconsistencies during deployment. Techniques such as containerization using Docker and automated deployment pipelines enable seamless integration of models into production environments, facilitating rollback and monitoring capabilities. Examples include:

Containerization using Docker for consistent deployment environments.

Automated deployment pipelines to handle model releases without manual intervention.

Rollback and monitoring capabilities for quick identification and remediation of deployment issues.

Model Monitoring and Maintenance

The fifth stage involves ongoing monitoring and maintenance of ML models in production. Utilizing MLOps principles for this stage allows organizations to evaluate and adjust models as needed consistently. Regular monitoring helps detect issues like model drift or performance degradation, which can compromise the accuracy and reliability of predictions. Key performance indicators, model performance dashboards, and alerting mechanisms ensure organizations can proactively address any problems and maintain the effectiveness of their ML models. Examples include:

Key performance indicators for tracking the performance of models in production.

Model performance dashboards for real-time visualization of the model’s health.

Alerting mechanisms to notify teams of sudden or gradual changes in model performance, enabling quick intervention and remediation.

MLOps Tools and Technologies

Adopting the right tools and technologies is crucial to implement MLOps practices and managing end-to-end ML workflows successfully. Many MLOps solutions offer many features, from data management and experimentation tracking to model deployment and monitoring. From an MLOps tool that advertises a whole ML lifecycle workflow, you should expect these features to be implemented in some manner:

End-to-end ML lifecycle management: All these tools are designed to support various stages of the ML lifecycle, from data preprocessing and model training to deployment and monitoring.

Experiment tracking and versioning: These tools provide some mechanism for tracking experiments, model versions, and pipeline runs, enabling reproducibility and comparing different approaches. Some tools might show reproducibility using other abstractions but nevertheless have some form of version control.

Model deployment: While the specifics differ among the tools, they all offer some model deployment functionality to help users transition their models to production environments or to provide a quick deployment endpoint to test with applications requesting model inference.

Integration with popular ML libraries and frameworks: These tools are compatible with popular ML libraries such as TensorFlow, PyTorch, and Scikit-learn, allowing users to leverage their existing ML tools and skills. However, the amount of support each framework has differs across tooling.

Scalability: Each platform provides ways to scale workflows, either horizontally, vertically, or both, enabling users to work with large data sets and train more complex models efficiently.

Extensibility and customization: These tools offer varying extensibility and customization, enabling users to tailor the platform to their specific needs and integrate it with other tools or services as required.

Collaboration and multi-user support: Each platform typically accommodates collaboration among team members, allowing them to share resources, code, data, and experimental results, fostering more effective teamwork and a shared understanding throughout the ML lifecycle.

Environment and dependency handling: Most of these tools include features addressing consistent and reproducible environment handling. This can involve dependency management using containers (i.e., Docker) or virtual environments (i.e., Conda) or providing preconfigured settings with popular data science libraries and tools pre-installed.

Monitoring and alerting: End-to-end MLOps tooling could also offer some form of performance monitoring, anomaly detection, or alerting functionality. This helps users maintain high-performing models, identify potential issues, and ensure their ML solutions remain reliable and efficient in production.

Although there is substantial overlap in the core functionalities provided by these tools, their unique implementations, execution methods, and focus areas set them apart. In other words, judging an MLOps tool at face value might be difficult when comparing their offering on paper. All of these tools provide a different workflow experience.

In the following sections, we’ll showcase some notable MLOps tools designed to provide a complete end-to-end MLOps experience and highlight the differences in how they approach and execute standard MLOps features.

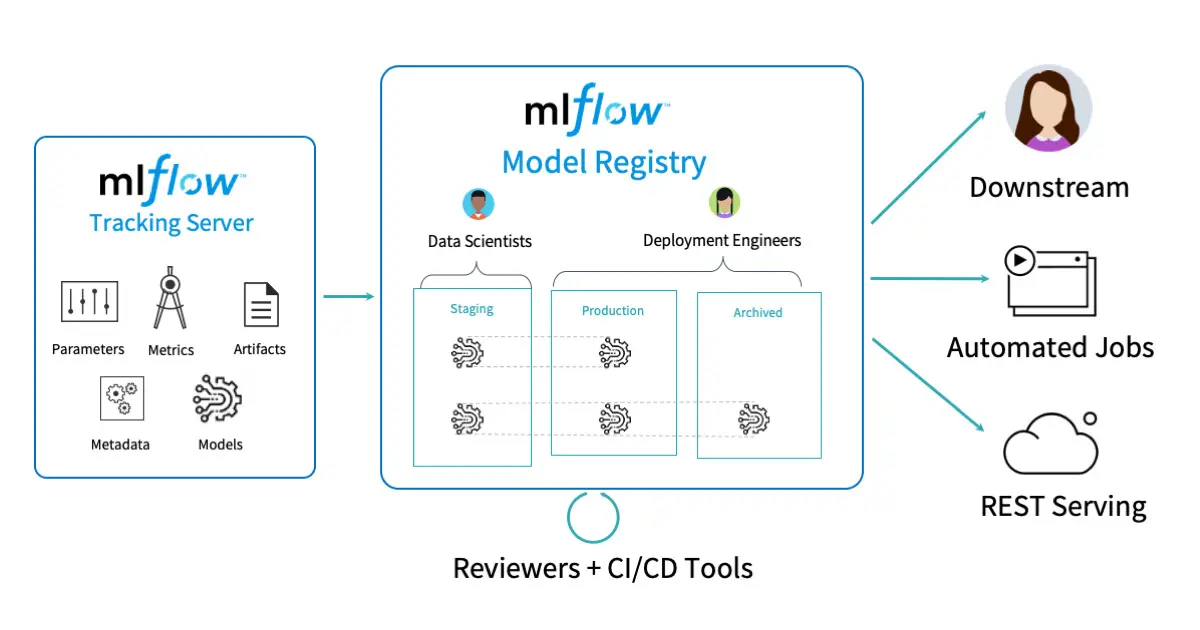

MLFlow

MLflow has unique features and characteristics that differentiate it from other MLOps tools, making it appealing to users with specific requirements or preferences:

Modularity: One of MLflow’s most significant advantages is its modular architecture. It consists of independent components (Tracking, Projects, Models, and Registry) that can be used separately or in combination, enabling users to tailor the platform to their precise needs without being forced to adopt all components.

Language Agnostic: MLflow supports multiple programming languages, including Python, R, and Java, which makes it accessible to a wide range of users with diverse skill sets. This primarily benefits teams with members who prefer different programming languages for their ML workloads.

Integration with Popular Libraries: MLflow is designed to work with popular ML libraries such as TensorFlow, PyTorch, and Scikit-learn. This compatibility allows users to integrate MLflow seamlessly into their existing workflows, taking advantage of its management features without adopting an entirely new ecosystem or changing their current tools.

Active, Open-source Community: MLflow has a vibrant open-source community that contributes to its development and keeps the platform up-to-date with new trends and requirements in the MLOps space. This active community support ensures that MLflow remains a cutting-edge and relevant ML lifecycle management solution.

While MLflow is a versatile and modular tool for managing various aspects of the ML lifecycle, it has some limitations compared to other MLOps platforms. One notable area where MLflow falls short is its need for an integrated, built-in pipeline orchestration and execution feature, such as those provided by TFX or Kubeflow Pipelines. While MLflow can structure and manage your pipeline steps using its tracking, projects, and model components, users may need to rely on external tools or custom scripting to coordinate complex end-to-end workflows and automate the execution of pipeline tasks. As a result, organizations seeking more streamlined, out-of-the-box support for complex pipeline orchestration may find that MLflow’s capabilities need improvement and explore alternative platforms or integrations to address their pipeline management needs.

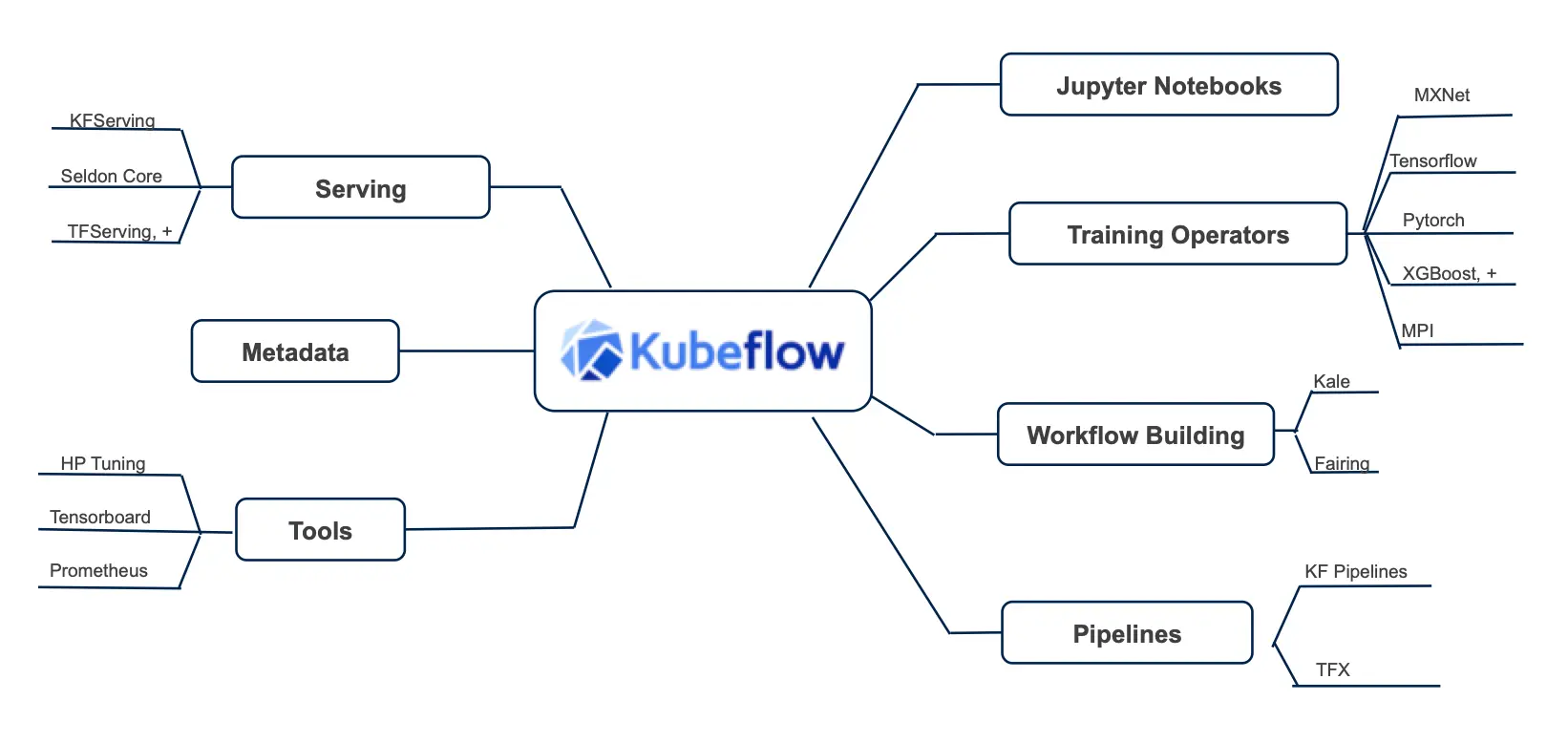

Kubeflow

While Kubeflow is a comprehensive MLOps platform with a suite of components tailored to cater to various aspects of the ML lifecycle, it has some limitations compared to other MLOps tools. Some of the areas where Kubeflow may fall short include:

Steeper Learning Curve: Kubeflow’s strong coupling with Kubernetes may result in a steeper learning curve for users who need to become more familiar with Kubernetes concepts and tooling. This might increase the time required to onboard new users and could be a barrier to adoption for teams without Kubernetes experience.

Limited Language Support: Kubeflow was initially developed with a primary focus on TensorFlow, and although it has expanded support for other ML frameworks like PyTorch and MXNet, it still has a more substantial bias towards the TensorFlow ecosystem. Organizations working with other languages or frameworks may require additional effort to adopt and integrate Kubeflow into their workflows.

Infrastructure Complexity: Kubeflow’s reliance on Kubernetes might introduce additional infrastructure management complexity for organizations without an existing Kubernetes setup. Smaller teams or projects that don’t require the full capabilities of Kubernetes might find Kubeflow’s infrastructure requirements to be an unnecessary overhead.

Less Focus on Experiment Tracking: While Kubeflow does offer experiment tracking functionalities through its Kubeflow Pipelines component, it may not be as extensive or user-friendly as dedicated experiment tracking tools like MLflow or Weights & Biases, another end-to-end MLOps tool with emphasis on real-time model observability tools. Teams with a strong focus on experiment tracking and comparison might find this aspect of Kubeflow needs improvement compared to other MLOps platforms with more advanced tracking features.

Integration with Non-Kubernetes Systems: Kubeflow’s Kubernetes-native design may limit its integration capabilities with other non-Kubernetes-based systems or proprietary infrastructure. In contrast, more flexible or agnostic MLOps tools like MLflow might offer more accessible integration options with various data sources and tools, regardless of the underlying infrastructure.

Kubeflow is an MLOps platform designed as a wrapper around Kubernetes, streamlining deployment, scaling, and managing ML workloads while converting them into Kubernetes-native workloads. This close relationship with Kubernetes offers advantages, such as the efficient orchestration of complex ML workflows. Still, it might introduce complexities for users lacking Kubernetes expertise, those using a wide range of languages or frameworks, or organizations with non-Kubernetes-based infrastructure. Overall, Kubeflow’s Kubernetes-centric nature provides significant benefits for deployment and orchestration, and organizations should consider these trade-offs and compatibility factors when assessing Kubeflow for their MLOps needs.

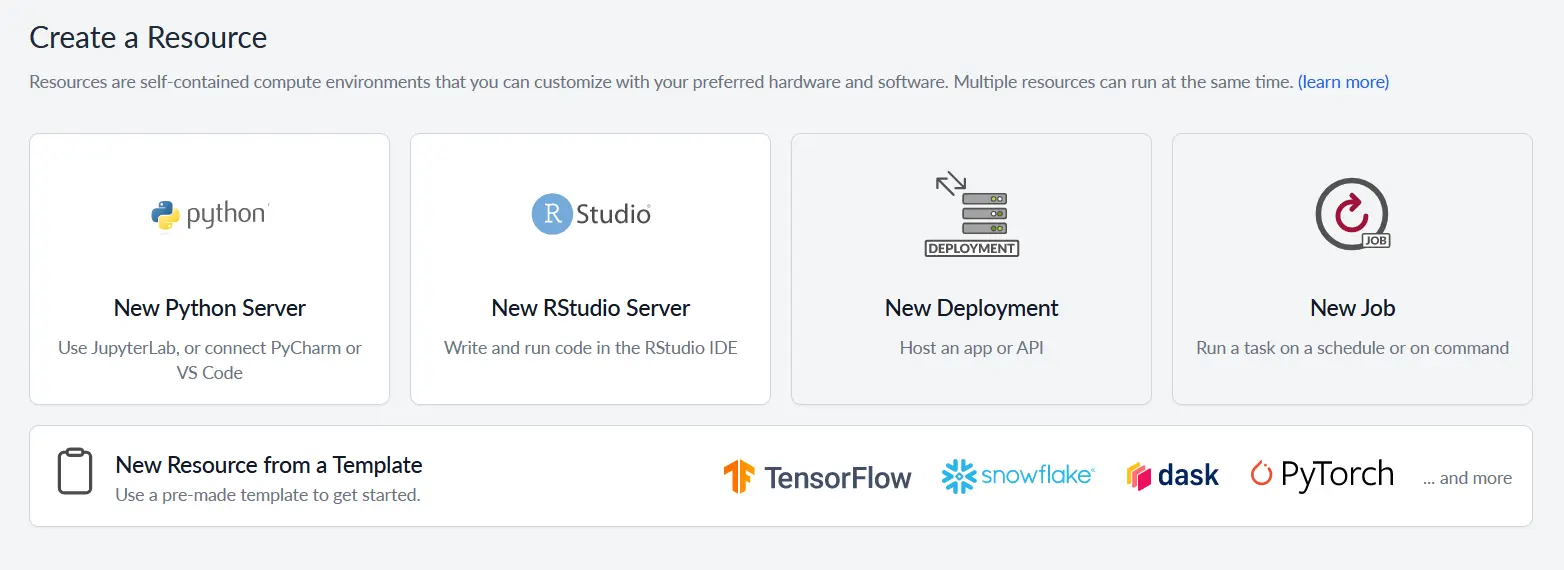

Saturn Cloud

Saturn Cloud is an MLOps platform that offers hassle-free scaling, infrastructure, collaboration, and rapid deployment of ML models, focusing on parallelization and GPU acceleration. Some key advantages and robust features of Saturn Cloud include:

Resource Acceleration Focus: Saturn Cloud strongly emphasizes providing easy-to-use GPU acceleration & flexible resource management for ML workloads. While other tools may support GPU-based processing, Saturn Cloud simplifies this process to remove infrastructure management overhead for the data scientist to use this acceleration.

Dask and Distributed Computing: Saturn Cloud has tight integration with Dask, a popular library for parallel and distributed computing in Python. This integration allows users to scale out their workloads effortlessly to use parallel processing on multi-node clusters.

Managed Infrastructure and Pre-built Environments: Saturn Cloud goes a step further in providing managed infrastructure and pre-built environments, easing the burden of infrastructure setup and maintenance for users.

Easy Resource Management and Sharing: Saturn Cloud simplifies sharing resources like Docker images, secrets, and shared folders by allowing users to define ownership and access asset permissions. These assets can be owned by an individual user, a group (a collection of users), or the entire organization. The ownership determines who can access and use the shared resources. Furthermore, users can clone full environments easily for others to run the same code anywhere.

Infrastructure as Code: Saturn Cloud employs a recipe JSON format, enabling users to define and manage resources with a code-centric approach. This fosters consistency, modularity, and version control, streamlining the platform’s setup and management of infrastructure components.

Saturn Cloud, while providing useful features and functionality for many use cases, may have some limitations compared to other MLOps tools. Here are a few areas that Saturn Cloud might be limited in:

Integration with Non-Python Languages: Saturn Cloud primarily targets the Python ecosystem, with extensive support for popular Python libraries and tools. However, any language that can be run in a Linux environment can be run with the Saturn Cloud platform.

Out-of-the-Box Experiment Tracking: While Saturn Cloud does facilitate experiment logging and tracking, its focus on scaling and infrastructure is more extensive than its experiment tracking capabilities. However, those who seek more customization and functionality in the tracking side of the MLOps workflow will be pleased to know that Saturn Cloud can be integrated with platforms including, but not limited to, Comet, Weights & Biases, Verta, and Neptune.

Kubernetes-Native Orchestration: Although Saturn Cloud offers scalability and managed infrastructure via Dask, it lacks the Kubernetes-native orchestration that tools like Kubeflow provide. Organizations heavily invested in Kubernetes may prefer platforms with deeper Kubernetes integration.

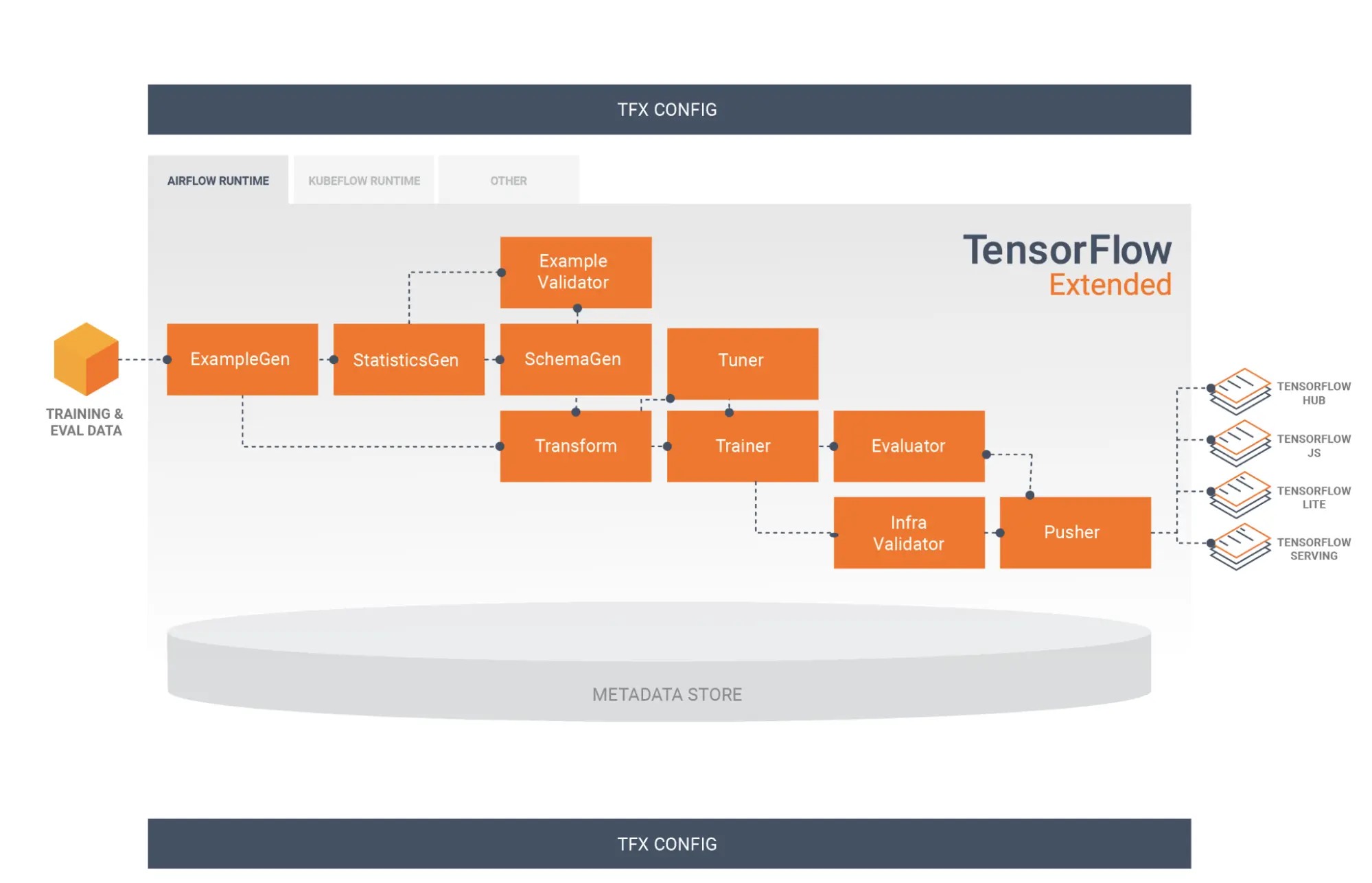

TensorFlow Extended (TFX)

TensorFlow Extended (TFX) is an end-to-end platform designed explicitly for TensorFlow users, providing a comprehensive and tightly-integrated solution for managing TensorFlow-based ML workflows. TFX excels in areas like:

TensorFlow Integration: TFX’s most notable strength is its seamless integration with the TensorFlow ecosystem. It offers a complete set of components tailored for TensorFlow, making it easier for users already invested in TensorFlow to build, test, deploy, and monitor their ML models without switching to other tools or frameworks.

Production Readiness: TFX is built with production environments in mind, emphasizing robustness, scalability, and the ability to support mission-critical ML workloads. It handles everything from data validation and preprocessing to model deployment and monitoring, ensuring that models are production-ready and can deliver reliable performance at scale.

End-to-end Workflows: TFX provides extensive components for handling various stages of the ML lifecycle. With support for data ingestion, transformation, model training, validation, and serving, TFX enables users to build end-to-end pipelines that ensure the reproducibility and consistency of their workflows.

Extensibility: TFX’s components are customizable and allow users to create and integrate their own components if needed. This extensibility enables organizations to tailor TFX to their specific requirements, incorporate their preferred tools, or implement custom solutions for unique challenges they might encounter in their ML workflows.

However, it’s worth noting that TFX’s primary focus on TensorFlow can be a limitation for organizations that rely on other ML frameworks or prefer a more language-agnostic solution. While TFX delivers a powerful and comprehensive platform for TensorFlow-based workloads, users working with frameworks like PyTorch or Scikit-learn may need to consider other MLOps tools that better suit their requirements. TFX’s strong TensorFlow integration, production readiness, and extensible components make it an attractive MLOps platform for organizations heavily invested in the TensorFlow ecosystem. Organizations can assess the compatibility of their current tools and frameworks and decide whether TFX’s features align well with their specific use cases and needs in managing their ML workflows.

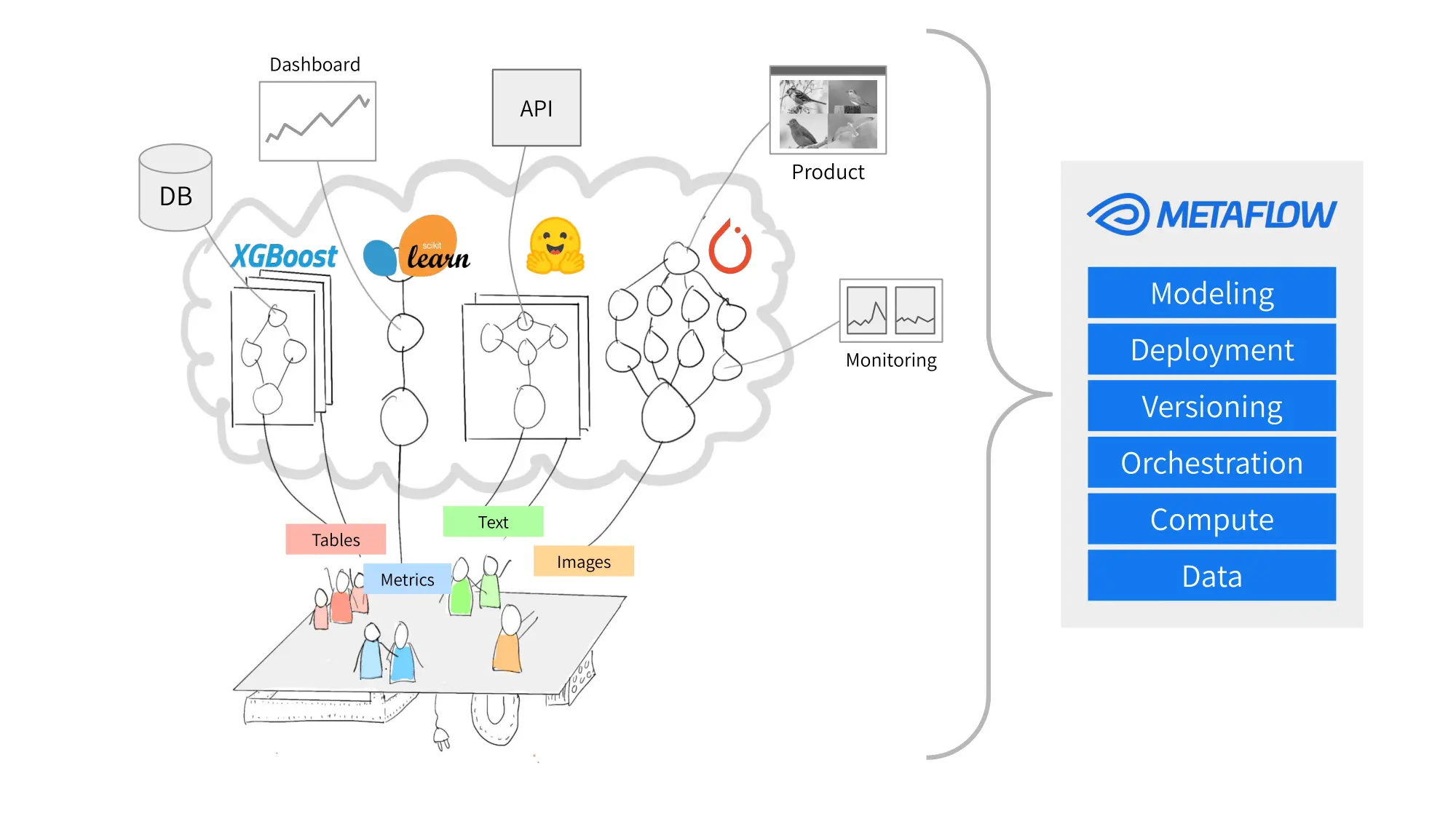

MetaFlow

Metaflow is an MLOps platform developed by Netflix, designed to streamline and simplify complex, real-world data science projects. Metaflow shines in several aspects due to its focus on handling real-world data science projects and simplifying complex ML workflows. Here are some areas where Metaflow excels:

Workflow Management: Metaflow’s primary strength lies in managing complex, real-world ML workflows effectively. Users can design, organize, and execute intricate processing and model training steps with built-in versioning, dependency management, and a Python-based domain-specific language.

Observable: Metaflow provides functionality to observe inputs and outputs after each pipeline step, making it easy to track the data at various stages of the pipeline.

Scalability: Metaflow easily scales workflows from local environments to the cloud and has tight integration with AWS services like AWS Batch, S3, and Step Functions. This makes it simple for users to run and deploy their workloads at scale without worrying about the underlying resources.

Built-in Data Management: Metaflow provides tools for efficient data management and versioning by automatically keeping track of datasets used by the workflows. It ensures data consistency across different pipeline runs and allows users to access historical data and artifacts, contributing to reproducibility and reliable experimentation.

Fault-Tolerance and Resilience: Metaflow is designed to handle the challenges that arise in real-world ML projects, such as unexpected failures, resource constraints, and changing requirements. It offers features like automatic error handling, retry mechanisms, and the ability to resume failed or halted steps, ensuring that workflows can be executed reliably and efficiently in various situations.

AWS Integration: As Netflix developed Metaflow, it closely integrates with Amazon Web Services (AWS) infrastructure. This makes it significantly easier for users already invested in the AWS ecosystem to leverage existing AWS resources and services in their ML workloads managed by Metaflow. This integration allows for seamless data storage, retrieval, processing, and control access to AWS resources, further streamlining the management of ML workflows.

While Metaflow has several strengths, there are certain areas where it may lack or fall short when compared to other MLOps tools:

Limited Deep Learning Support: Metaflow was initially developed to focus on typical data science workflows and traditional ML methods rather than deep learning. This might make it less suitable for teams or projects primarily working with deep learning frameworks like TensorFlow or PyTorch.

Experiment Tracking: Metaflow offers some experiment-tracking functionalities. Its focus on workflow management and infrastructural simplicity might make its tracking capabilities less comprehensive than dedicated experiment-tracking platforms like MLflow or Weights & Biases.

Kubernetes-Native Orchestration: Metaflow is a versatile platform that can be deployed on various backend solutions, such as AWS Batch and container orchestration systems. However, it lacks the Kubernetes-native pipeline orchestration found in tools like Kubeflow, which allows running entire ML pipelines as Kubernetes resources.

Language Support: Metaflow primarily supports Python, which is advantageous for most data science practitioners but might be a limitation for teams using other programming languages, such as R or Java, in their ML projects.

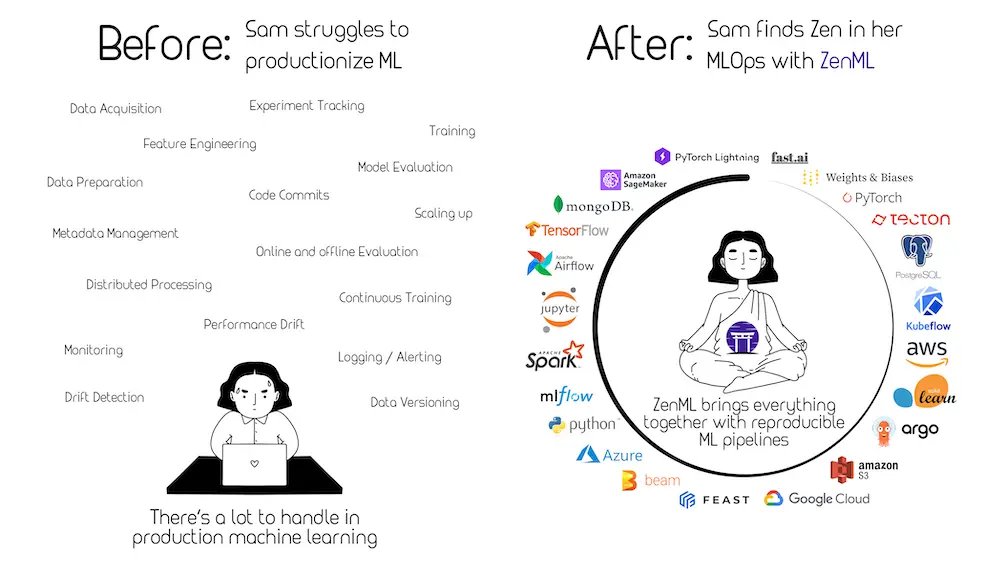

ZenML

ZenML is an extensible, open-source MLOps framework designed to make ML reproducible, maintainable, and scalable. ZenML is intended to be a highly extensible and adaptable MLOps framework. Its main value proposition is that it allows you to easily integrate and “glue” together various machine learning components, libraries, and frameworks to build end-to-end pipelines. ZenML’s modular design makes it easier for data scientists and engineers to mix and match different ML frameworks and tools for specific tasks within the pipeline, reducing the complexity of integrating various tools and frameworks.

Here are some areas where ZenML excels:

ML Pipeline Abstraction: ZenML offers a clean, Pythonic way to define ML pipelines using simple abstractions, making it easy to create and manage different stages of the ML lifecycle, such as data ingestion, preprocessing, training, and evaluation.

Reproducibility: ZenML strongly emphasizes reproducibility, ensuring pipeline components are versioned and tracked through a precise metadata system. This guarantees that ML experiments can be replicated consistently, preventing issues related to unstable environments, data, or dependencies.

Backend Orchestrator Integration: ZenML supports different backend orchestrators, such as Apache Airflow, Kubeflow, and others. This flexibility lets users choose the backend that best fits their needs and infrastructure, whether managing pipelines on their local machines, Kubernetes, or a cloud environment.

Extensibility: ZenML offers a highly extensible architecture that allows users to write custom logic for different pipeline steps and easily integrate with their preferred tools or libraries. This enables organizations to tailor ZenML to their specific requirements and workflows.

Dataset Versioning: ZenML focuses on efficient data management and versioning, ensuring pipelines have access to the correct versions of data and artifacts. This built-in data management system allows users to maintain data consistency across various pipeline runs and fosters transparency in the ML workflows.

High Integration with ML Frameworks: ZenML offers smooth integration with popular ML frameworks, including TensorFlow, PyTorch, and Scikit-learn. Its ability to work with these ML libraries allows practitioners to leverage their existing skills and tools while utilizing ZenML’s pipeline management.

In summary, ZenML excels in providing a clean pipeline abstraction, fostering reproducibility, supporting various backend orchestrators, offering extensibility, maintaining efficient dataset versioning, and integrating with popular ML libraries. Its focus on these aspects makes ZenML particularly suitable for organizations seeking to improve the maintainability, reproducibility, and scalability of their ML workflows without shifting too much of their infrastructure to new tooling.

What’s the Right Tool For Me?

With so many MLOps tools available, how do you know which one is for you and your team? When evaluating potential MLOps solutions, several factors come into play. Here are some key aspects to consider when choosing MLOps tools tailored to your organization’s specific needs and goals:

Organization Size and Team Structure: Consider the size of your data science and engineering teams, their level of expertise, and the extent to which they need to collaborate. Larger groups or more complex hierarchical structures might benefit from tools with robust collaboration and communication features.

Complexity and Diversity of ML Models: Evaluate the range of algorithms, model architectures, and technologies used in your organization. Some MLOps tools cater to specific frameworks or libraries, while others offer more extensive and versatile support.

Level of Automation and Scalability: Determine the extent to which you require automation for tasks like data preprocessing, model training, deployment, and monitoring. Also, understand the importance of scalability in your organization, as some MLOps tools provide better support for scaling up computations and handling large amounts of data.

Integration and Compatibility: Consider the compatibility of MLOps tools with your existing technology stack, infrastructure, and workflows. Seamless integration with your current systems will ensure a smoother adoption process and minimize disruptions to ongoing projects.

Customization and Extensibility: Assess the level of customization and extensibility needed for your ML workflows, as some tools provide more flexible APIs or plugin architectures that enable the creation of custom components to meet specific requirements.

Cost and Licensing: Keep in mind the pricing structures and licensing options of the MLOps tools, ensuring that they fit within your organization’s budget and resource constraints.

Security and Compliance: Evaluate how well the MLOps tools address security, data privacy, and compliance requirements. This is especially important for organizations operating in regulated industries or dealing with sensitive data.

Support and Community: Consider the quality of documentation, community support, and the availability of professional assistance when needed. Active communities and responsive support can be valuable when navigating challenges or seeking best practices.

By carefully examining these factors and aligning them with your organization’s needs and goals, you can make informed decisions when selecting MLOps tools that best support your ML workflows and enable a successful MLOps strategy.

MLOps Best Practices

Establishing best practices in MLOps is crucial for organizations looking to develop, deploy, and maintain high-quality ML models that drive value and positively impact their business outcomes. By implementing the following practices, organizations can ensure that their ML projects are efficient, collaborative, and maintainable while minimizing the risk of potential issues arising from inconsistent data, outdated models, or slow and error-prone development:

Ensuring data quality and consistency: Establish robust preprocessing pipelines, use tools for automated data validation checks like Great Expectations or TensorFlow Data Validation, and implement data governance policies that define data storage, access, and processing rules. A lack of data quality control can lead to inaccurate or biased model results, causing poor decision-making and potential business losses.

Version control for data and models: Use version control systems like Git or DVC to track changes made to data and models, improving collaboration and reducing confusion among team members. For example, DVC can manage different versions of datasets and model experiments, allowing easy switching, sharing, and reproduction. With version control, teams can manage multiple iterations and reproduce past results for analysis.

Collaborative and reproducible workflows: Encourage collaboration by implementing clear documentation, code review processes, standardized data management, and collaborative tools and platforms like Jupyter Notebooks and Saturn Cloud. Supporting team members to work together efficiently and effectively helps accelerate the development of high-quality models. On the other hand, ignoring collaborative and reproducible workflows results in slower development, increased risk of errors, and hindered knowledge sharing.

Automated testing and validation: Adopt a rigorous testing strategy by integrating automated testing and validation techniques (e.g., unit tests with Pytest, integration tests) into your ML pipeline, leveraging continuous integration tools like GitHub Actions or Jenkins to test model functionality regularly. Automated tests help identify and fix issues before deployment, ensuring a high-quality and reliable model performance in production. Skipping automated testing increases the risk of undetected problems, compromising model performance and ultimately hurting business outcomes.

Monitoring and alerting systems: Use tools like Amazon SageMaker Model Monitor, MLflow, or custom solutions to track key performance metrics and set up alerts to detect potential issues early. For example, configure alerts in MLflow when model drift is detected or specific performance thresholds are breached. Not implementing monitoring and alerting systems delays the detection of problems like model drift or performance degradation, resulting in suboptimal decisions based on outdated or inaccurate model predictions, negatively affecting the overall business performance.

By adhering to these MLOps best practices, organizations can efficiently develop, deploy, and maintain ML models while minimizing potential issues and maximizing model effectiveness and overall business impact.

MLOps and Data Security

Data security plays a vital role in the successful implementation of MLOps. Organizations must take necessary precautions to guarantee that their data and models remain secure and protected at every stage of the ML lifecycle. Critical considerations for ensuring data security in MLOps include:

Model Robustness: Ensure your ML models can withstand adversarial attacks or perform reliably in noisy or unexpected conditions. For instance, you can incorporate techniques like adversarial training, which involves injecting adversarial examples into the training process to increase model resilience against malicious attacks. Regularly evaluating model robustness helps prevent potential exploitation that could lead to incorrect predictions or system failures.

Data privacy and compliance: To safeguard sensitive data, organizations must adhere to relevant data privacy and compliance regulations, such as the General Data Protection Regulation (GDPR) or the Health Insurance Portability and Accountability Act (HIPAA). This may involve implementing robust data governance policies, anonymizing sensitive information, or utilizing techniques like data masking or pseudonymization.

Model security and integrity: Ensuring the security and integrity of ML models helps protect them from unauthorized access, tampering, or theft. Organizations can implement measures like encryption of model artifacts, secure storage, and model signing to validate authenticity, thereby minimizing the risk of compromise or manipulation by outside parties.

Secure deployment and access control: When deploying ML models to production environments, organizations must follow best practices for fast deployment. This includes identifying and fixing potential vulnerabilities, implementing secure communication channels (e.g., HTTPS or TLS), and enforcing strict access control mechanisms to restrict only model access to authorized users. Organizations can prevent unauthorized access and maintain model security using role-based access control and authentication protocols like OAuth or SAML.

Involving security teams like red teams in the MLOps cycle can also significantly enhance overall system security. Red teams, for instance, can simulate adversarial attacks on models and infrastructure, helping identify vulnerabilities and weaknesses that might otherwise go unnoticed. This proactive security approach enables organizations to address issues before they become threats, ensuring compliance with regulations and enhancing their ML solutions' overall reliability and trustworthiness. Collaborating with dedicated security teams during the MLOps cycle fosters a robust security culture that ultimately contributes to the success of ML projects.

MLOps Out in Industry

MLOps has been successfully implemented across various industries, driving significant improvements in efficiency, automation, and overall business performance. The following are real-world examples showcasing the potential and effectiveness of MLOps in different sectors:

Healthcare with CareSource

CareSource is one of the largest Medicaid providers in the United States focusing on triaging high-risk pregnancies and partnering with medical providers to proactively provide lifesaving obstetrics care. However, some data bottlenecks needed to be solved. CareSource’s data was siloed in different systems and was not always up to date, which made it difficult to access and analyze. When it came to model training, data was not always in a consistent format, which made it difficult to clean and prepare for analysis.

To address these challenges, CareSource implemented an MLOps framework that uses Databricks Feature Store, MLflow, and Hyperopt to develop, tune, and track ML models to predict obstetrics risk. They then used Stacks to help instantiate a production-ready template for deployment and send prediction results at a timely schedule to medical partners.

The accelerated transition between ML development and production-ready deployment enabled CareSource to directly impact patients' health and lives before it was too late. For example, CareSource identified high-risk pregnancies earlier, leading to better outcomes for mothers and babies. They also reduced the cost of care by preventing unnecessary hospitalizations.

Finance with Moody’s Analytics

Moody’s Analytics, a leader in financial modeling, encountered challenges such as limited access to tools and infrastructure, friction in model development and delivery, and knowledge silos across distributed teams. They developed and utilized ML models for various applications, including credit risk assessment and financial statement analysis. In response to these challenges, they implemented the Domino data science platform to streamline their end-to-end workflow and enable efficient collaboration among data scientists.

By leveraging Domino, Moody’s Analytics accelerated model development, reduced a nine-month project to four months, and significantly improved its model monitoring capabilities. This transformation allowed the company to efficiently develop and deliver customized, high-quality models for clients' needs, like risk evaluation and financial analysis.

Entertainment with Netflix

Netflix utilized Metaflow to streamline the development, deployment, and management of ML workloads for various applications, such as personalized content recommendations, optimizing streaming experiences, content demand forecasting, and sentiment analysis for social media engagement. By fostering efficient MLOps practices and tailoring a human-centric framework for their internal workflows, Netflix empowered its data scientists to experiment and iterate rapidly, leading to a more nimble and effective data science practice.

According to Ville Tuulos, a former manager of machine learning infrastructure at Netflix, implementing Metaflow reduced the average time from project idea to deployment from four months to just one week. This accelerated workflow highlights the transformative impact of MLOps and dedicated ML infrastructure, enabling ML teams to operate more quickly and efficiently. By integrating machine learning into various aspects of their business, Netflix showcases the value and potential of MLOps practices to revolutionize industries and improve overall business operations, providing a substantial advantage to fast-paced companies.

MLOps Lessons Learned

As we’ve seen in the aforementioned cases, the successful implementation of MLOps showcased how effective MLOps practices can drive substantial improvements in different aspects of the business. Thanks to the lessons learned from real-world experiences like this, we can derive key insights into the importance of MLOps for organizations:

Standardization, unified APIs, and abstractions to simplify the ML lifecycle.

Integration of multiple ML tools into a single coherent framework to streamline processes and reduce complexity.

Addressing critical issues like reproducibility, versioning, and experiment tracking to improve efficiency and collaboration.

Developing a human-centric framework that caters to the specific needs of data scientists, reducing friction and fostering rapid experimentation and iteration.

Monitoring models in production and maintaining proper feedback loops to ensure models remain relevant, accurate, and effective.

The lessons from Netflix and other real-world MLOps implementations can provide valuable insights to organizations looking to enhance their own ML capabilities. They emphasize the importance of having a well-thought-out strategy and investing in robust MLOps practices to develop, deploy, and maintain high-quality ML models that drive value while scaling and adapting to evolving business needs.

Future Trends and Challenges in MLOps

As MLOps continues to evolve and mature, organizations must stay aware of the emerging trends and challenges they may face when implementing MLOps practices. A few notable trends and potential obstacles include:

Edge Computing: The rise of edge computing presents opportunities for organizations to deploy ML models on edge devices, enabling faster and localized decision-making, reducing latency, and lowering bandwidth costs. Implementing MLOps in edge computing environments requires new strategies for model training, deployment, and monitoring to account for limited device resources, security, and connectivity constraints.

Explainable AI: As AI systems play a more significant role in everyday processes and decision-making, organizations must ensure that their ML models are explainable, transparent, and unbiased. This requires integrating tools for model interpretability, visualization, and techniques to mitigate bias. Incorporating explainable and responsible AI principles into MLOps practices helps increase stakeholder trust, comply with regulatory requirements, and uphold ethical standards.

Sophisticated Monitoring and Alerting: As the complexity and scale of ML models increase, organizations may require more advanced monitoring and alerting systems to maintain adequate performance. Anomaly detection, real-time feedback, and adaptive alert thresholds are some of the techniques that can help quickly identify and diagnose issues like model drift, performance degradation, or data quality problems. Integrating these advanced monitoring and alerting techniques into MLOps practices can ensure that organizations can proactively address issues as they arise and maintain consistently high levels of accuracy and reliability in their ML models.

Federated Learning: This approach enables training ML models on decentralized data sources while maintaining data privacy. Organizations can benefit from federated learning by implementing MLOps practices for distributed training and collaboration among multiple stakeholders without exposing sensitive data.

Human-in-the-loop Processes: There is a growing interest in incorporating human expertise in many ML applications, especially those that involve subjective decision-making or complex contexts that cannot be fully encoded. Integrating human-in-the-loop processes within MLOps workflows demands effective collaboration tools and strategies for seamlessly combining human and machine intelligence.

Quantum ML: Quantum computing is an emerging field that shows potential in solving complex problems and speeding up specific ML processes. As this technology matures, MLOps frameworks and tools may need to evolve to accommodate quantum-based ML models and handle new data management, training, and deployment challenges.

Robustness and Resilience: Ensuring the robustness and resilience of ML models in the face of adversarial circumstances, such as noisy inputs or malicious attacks, is a growing concern. Organizations will need to incorporate strategies and techniques for robust ML into their MLOps practices to guarantee the safety and stability of their models. This may involve adversarial training, input validation, or deploying monitoring systems to identify and alert when models encounter unexpected inputs or behaviors.

Conclusion

In today’s world, implementing MLOps has become crucial for organizations looking to unleash the full potential of ML, streamline workflows, and maintain high-performing models throughout their lifecycles. This article has explored MLOps practices and tools, use cases across various industries, the importance of data security, and the opportunities and challenges ahead as the field continues to evolve.

To recap, we have discussed the following:

The stages of the MLOps lifecycle.

Popular open-source MLOps tools that can be deployed to your infrastructure of choice.

Best practices for MLOps implementations.

MLOps use cases in different industries and valuable MLOps lessons learned.

Future trends and challenges, such as edge computing, explainable and responsible AI, and human-in-the-loop processes.

As the landscape of MLOps keeps evolving, organizations and practitioners must stay up-to-date with the latest practices, tools, and research. Emphasizing continued learning and adaptation will enable businesses to stay ahead of the curve, refine their MLOps strategies, and effectively address emerging trends and challenges.

The dynamic nature of ML and the rapid pace of technology means that organizations must be prepared to iterate and evolve with their MLOps solutions. This entails adopting new techniques and tools, fostering a collaborative learning culture within the team, sharing knowledge, and seeking insights from the broader MLOps community.

Organizations that embrace MLOps best practices, maintain a strong focus on data security and ethical AI, and remain agile in response to emerging trends will be better positioned to maximize the value of their ML investments. As businesses across industries leverage ML, MLOps will be increasingly vital in ensuring the successful, responsible, and sustainable deployment of AI-driven solutions. By adopting a robust and future-proof MLOps strategy, organizations can unlock the true potential of ML and drive transformative change in their respective fields.

About Saturn Cloud

Saturn Cloud is your all-in-one solution for data science & ML development, deployment, and data pipelines in the cloud. Spin up a notebook with 4TB of RAM, add a GPU, connect to a distributed cluster of workers, and more. Request a demo today to learn more.

Saturn Cloud provides customizable, ready-to-use cloud environments for collaborative data teams.

Try Saturn Cloud and join thousands of users moving to the cloud without

having to switch tools.